by University of Copenhagen

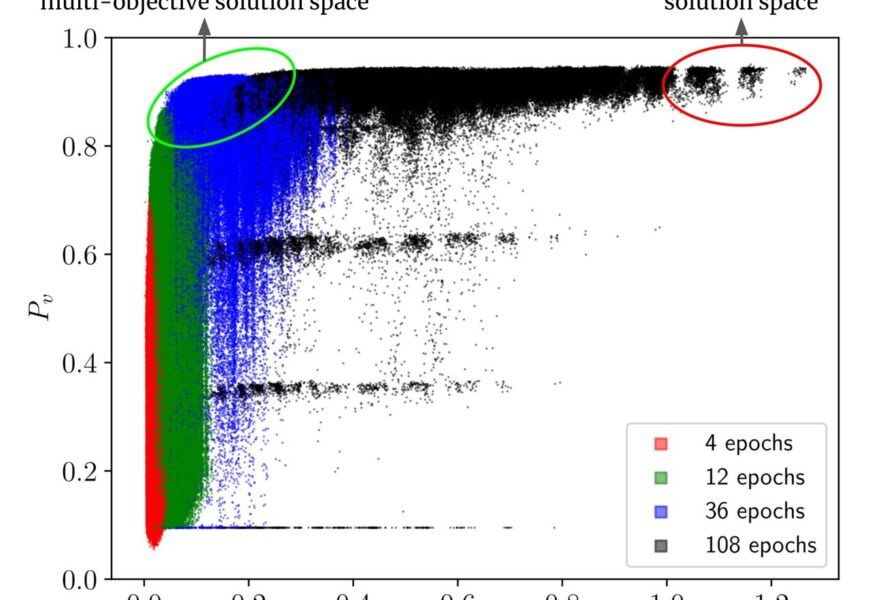

In this illustration, each point represents a convolutional neural network model, with energy consumption plotted on the horizontal axis and performance on the vertical axis. Traditionally, models have been chosen based solely on their performance, neglecting energy efficiency considerations, leading to selections within the red ellipse. This study introduces the concept of selecting models from the green ellipse, striking a balance between effectiveness and efficiency. Image credit: Figure sourced from a scientific article available at ieeexplore.ieee.org/document/10448303

It is widely acknowledged that significant energy resources are required for activities such as conducting searches on Google, interacting with virtual assistants like Siri, requesting tasks from ChatGPT, or utilizing AI technologies in various applications.

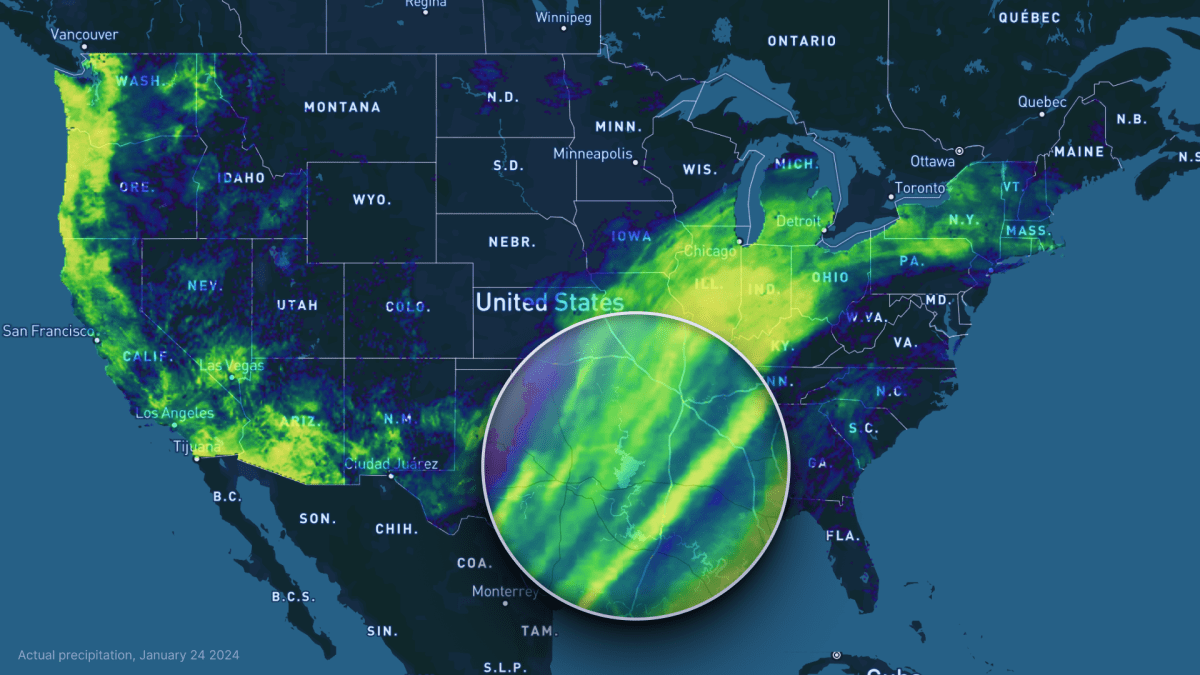

Projections indicate that by the year 2027, the energy consumption of AI servers will rival that of entire countries like Argentina or Sweden. For instance, a single prompt to ChatGPT is estimated to consume an equivalent amount of energy as forty full mobile phone charges. Despite this, the research community and industry have yet to prioritize the development of energy-efficient AI models, as highlighted by computer science experts at the University of Copenhagen.

Assistant Professor Raghavendra Selvan from the Department of Computer Science emphasizes the current trend of focusing primarily on the effectiveness of AI models in terms of result accuracy, akin to valuing a car solely for its speed without considering fuel efficiency. Consequently, many AI models exhibit inefficiencies in energy consumption. Selvan, whose research delves into reducing the carbon footprint of AI, stresses the importance of considering climate impacts throughout the design and training phases of AI models.

A recent study, co-authored by Selvan and computer science student Pedram Bakhtiarifard, demonstrates the feasibility of significantly reducing CO2 emissions without compromising the precision of AI models. The key lies in integrating climate considerations from the model’s inception, leading to substantial energy savings without sacrificing performance. These findings will be presented at the International Conference on Acoustics, Speech, and Signal Processing (ICASSP-2024).

By proactively designing energy-efficient models, the carbon footprint across all stages of a model’s lifecycle, from training to deployment, can be minimized. The researchers advocate for a paradigm shift where AI professionals prioritize energy efficiency alongside performance metrics, enabling the selection of models based on both criteria without the need for extensive training of each model.

The study involved estimating the energy requirements for training over 400,000 convolutional neural network AI models, offering insights into energy-efficient alternatives for tasks such as medical image analysis, language translation, and image recognition. By opting for different models or tweaking existing ones, substantial energy savings of 70–80% during training and deployment phases can be achieved with minimal performance trade-offs, a conservative estimate according to the researchers.

In fields like autonomous vehicles or certain medical applications where precision is paramount for safety, maintaining performance levels is crucial. However, this should not deter efforts to enhance energy efficiency in other domains.

The researchers stress the importance of a holistic approach to AI development that integrates climate considerations alongside performance metrics. They advocate for making energy efficiency a standard criterion in AI model development, akin to existing practices in other industries.

The comprehensive dataset compiled in this study, serving as a “recipe book” for AI professionals, is openly available for experimentation by researchers. Detailed information on more than 400,000 model architectures can be accessed on Github using simple Python scripts.

The analysis estimated that training the 429,000 convolutional neural network models in the dataset would require 263,000 kWh, equivalent to the energy consumption of an average Danish citizen over 46 years. The training process alone would take approximately 100 years for a single computer to complete. Notably, the researchers leveraged AI models for estimation, saving 99% of the energy that would have been expended in actual training.

The energy-intensive nature of training AI models, characterized by intensive computations on powerful computers, contributes significantly to their carbon footprint. Large models like the one underpinning ChatGPT entail substantial energy consumption, particularly when processed in data centers powered by fossil fuels, amplifying their environmental impact.

More information:

Pedram Bakhtiarifard et al, EC-NAS: Energy Consumption Aware Tabular Benchmarks for Neural Architecture Search, ICASSP 2024 – 2024 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (2024). DOI: 10.1109/ICASSP48485.2024.10448303

Provided by University of Copenhagen

Citation:

Computer scientists show the way: AI models need not be so power-hungry (2024, April 3) retrieved 3 April 2024 from https://techxplore.com/news/2024-04-scientists-ai-power-hungry.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no part may be reproduced without the written permission. The content is provided for information purposes only.