The machine learning community may be in the process of developing a new desktop AI benchmark suite, as announced by the MMCommons consortium earlier today. The consortium, in collaboration with the newly formed MLPerf Client working group, is working on creating a standardized set of client AI benchmarks tailored for traditional desktop PCs, workstations, and laptops. Initially focusing on establishing a standard set for Windows platforms, the consortium plans to base the first version of the MLPerf Client benchmark suite on Meta’s Llama 2LLM model.

In recent years, MLCommons, known as the industry benchmark for AI training and inference on various computing devices including machines and HPC systems, has expanded its benchmark offerings to encompass a wider range of devices. This expansion includes benchmarks for low-power devices and wireless technologies. With the introduction of the MLPerf Client benchmark suite for computers and workstations, the consortium aims to fill the gap in their benchmark portfolio. While this initiative marks a significant milestone, it also presents the team with their most ambitious project to date.

The primary objective of the newly formed MLPerf Client working group is to develop a benchmark suite tailored for client PCs that accurately reflects real-world AI workloads, delivering meaningful and practical results. The announcement of this endeavor at this early stage indicates that the consortium is in the initial phases of developing the MLPerf Client standard, which involves a collaborative and consensus-driven approach. While the group has outlined some technical aspects of the benchmark suite, further refinement and specification of the benchmark tasks are still pending in the coming months.

The working group has already decided to adopt Meta’s Llama 2 model, specifically the 7 billion parameter version (Llama-2-7B), as the foundation for the MLPerf Client benchmark suite. This choice is crucial as the model’s size and complexity are deemed suitable for client PCs. Additionally, the group is in the process of defining the benchmark’s specifics, particularly the tasks that the Llama model will be evaluated for.

The MLPerf Client working group aims to target mass market adoption by initially focusing on the Windows platform, a departure from their previous Unix-centric benchmarks. The goal is to ensure broad compatibility across devices ranging from tablets to desktops. While there are plans to extend the MLPerf Client benchmark suite to other platforms in the future, the primary emphasis is on establishing a strong presence in the dominant Windows PC market.

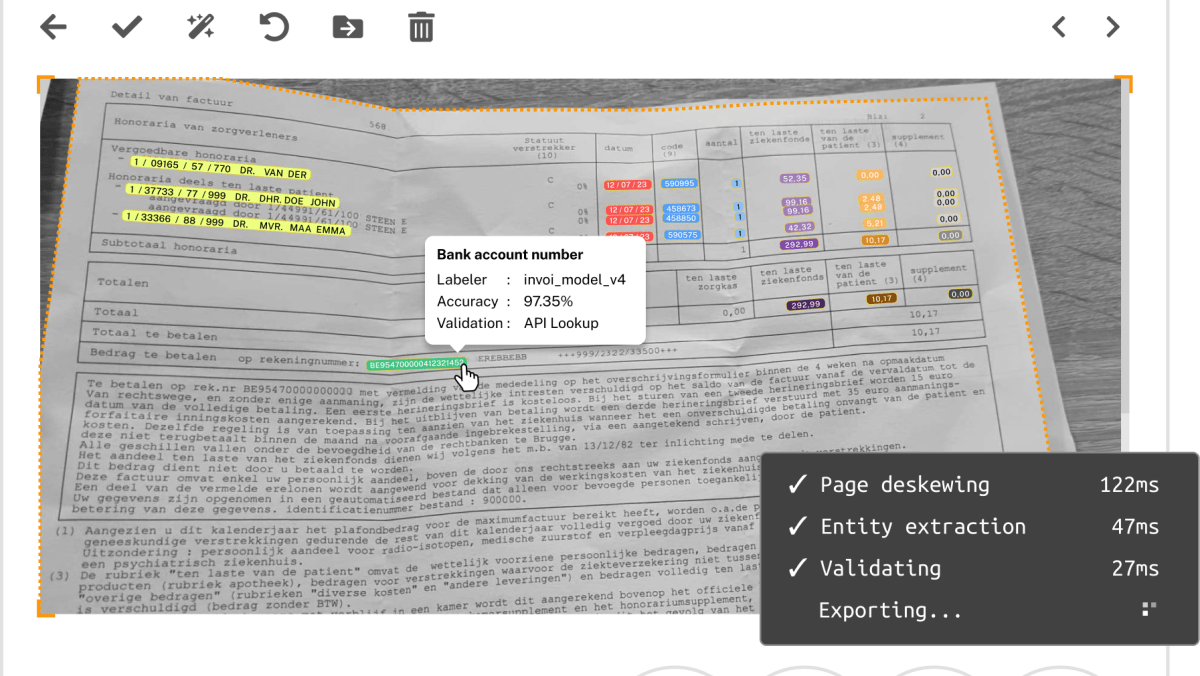

Given the group’s extensive experience with machine learning workloads, the shift towards customer-centric computing poses a significant challenge. Unlike previous MLPerf editions designed for machine manufacturers and data scientists, the MLPerf Client standard for devices is intended to have a user-friendly interface, making it accessible to end-users. This shift necessitates identifying representative machine workloads and creating an effective visual benchmark to engage users effectively.

In discussions with MLCommons representatives, it is evident that the group has a clear roadmap for the APIs and runtimes that will drive the standard forward, despite unresolved complexities in the benchmark suite. The MLPerf Client benchmark suite is expected to support a variety of execution backends, including Windows APIs like WinML and DirectML, as well as vendor-specific platforms such as CUDA and OpenVino. This flexible approach aligns with the consortium’s laissez-faire strategy observed in their other benchmarks.

Furthermore, the benchmark suite is designed to accommodate various hardware configurations, allowing vendors to leverage GPUs, CPUs, and emerging NPUs according to their preferences. The diverse composition of the working group, comprising hardware and software industry leaders such as Intel, AMD, NVIDIA, Arm, Qualcomm, Microsoft, and Dell, ensures a balanced representation and industry-wide buy-in, essential for widespread adoption.

While the MLPerf Client standard is still in development, it is poised to join leading benchmarks like UL’s Procyon AI and Primate Labs’ Geekbench M in providing benchmarks for Windows client AI. MLCommons aims to differentiate itself through a collaborative approach that involves all partners in decision-making, ensuring consensus and long-term support for the benchmark suite. Despite the ongoing development efforts, the goal is for the MLPerf Client benchmark suite to evolve and expand over time to encompass a broader range of workloads and models, transcending the Windows platform to cater to diverse computing environments.