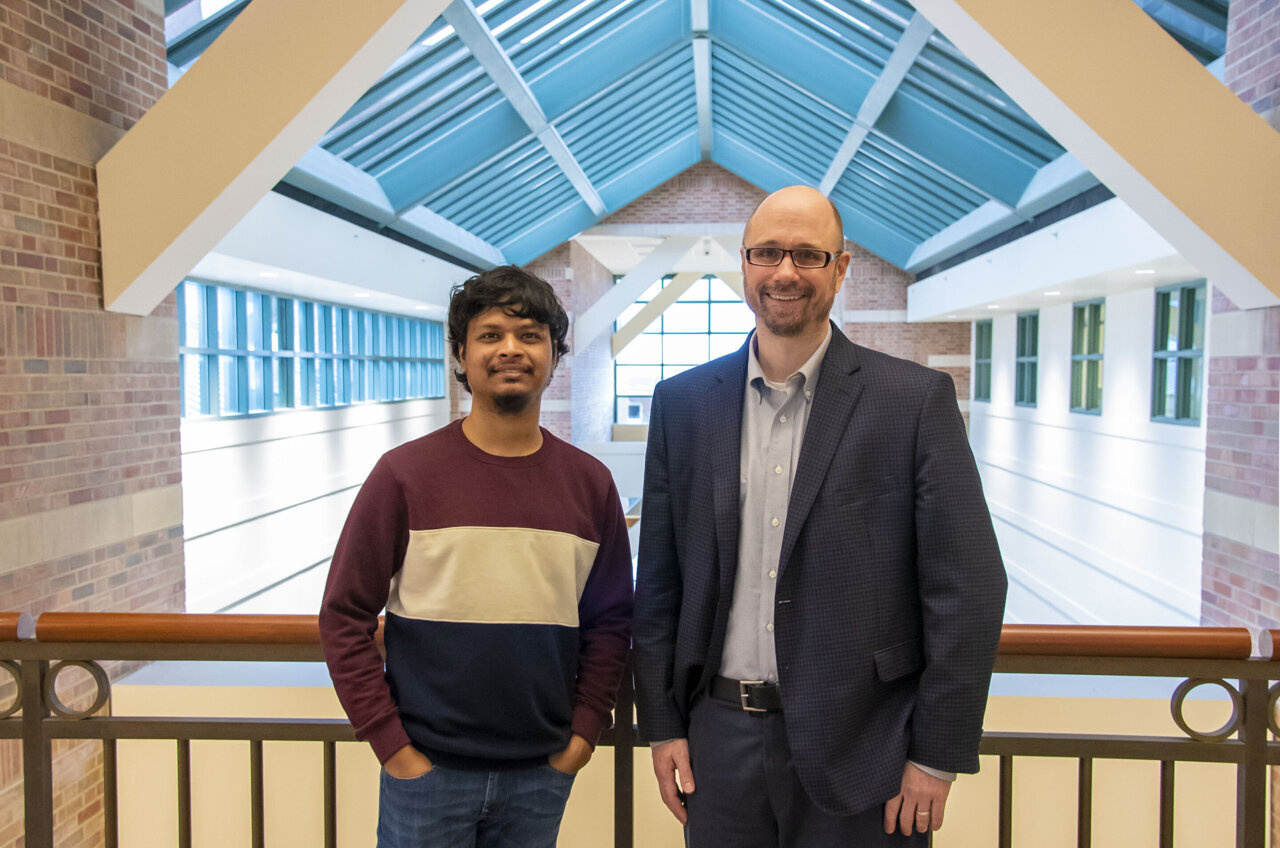

Researchers at the Beckman Institute, under the leadership of Mark Anastasio and Sourya Sengupta, have introduced an artificial intelligence (AI) model capable of precisely detecting tumors and diseases in medical images. This innovative tool generates explanatory maps for each diagnosis, assisting healthcare providers in understanding the reasoning behind the results, verifying accuracy, and communicating effectively with patients. The credit for this development goes to Jenna Kurtzweil from the Beckman Institute Communications Office.

The AI model, often likened to a medical diagnostics expert, doctor’s assistant, and cartographer, has been designed to enhance transparency in the diagnostic process. By providing visual explanations for each diagnosis, the tool enables doctors to easily comprehend the decision-making process, validate the results, and elucidate them to patients.

Sourya Sengupta, the lead author of the study and a graduate research assistant at the Beckman Institute, emphasized the model’s role in early disease detection and decision understanding. This research breakthrough was recently featured in IEEE Transactions on Medical Imaging.

In the realm of artificial intelligence, the concept has evolved significantly since the 1950s, with machine learning (ML) serving as a fundamental method for developing intelligent systems. Deep learning, a more advanced form of ML, leverages deep neural networks to process vast amounts of data and make sophisticated decisions akin to human cognition.

Despite their prowess, deep neural networks face challenges in elucidating their decision-making process—a phenomenon known as the “black box problem” in AI. This limitation becomes critical in medical imaging, where precise interpretations are essential for detecting conditions like breast cancer at early stages.

Sengupta’s team addressed this issue by creating an innovative AI model that not only makes accurate diagnoses but also provides self-interpretation through visual maps. These maps, known as equivalency maps (E-maps), assign values to different regions of the medical images, aiding doctors in understanding the model’s reasoning and enhancing transparency in the diagnostic process.

The model’s training involved analyzing over 20,000 images across three disease diagnosis tasks, showcasing impressive accuracy rates comparable to existing black-box AI systems. This achievement highlights the model’s interpretability and performance in detecting conditions such as tumors, macular degeneration, and heart enlargement.

By bridging the gap between AI decision-making and human understanding, the researchers envision broader applications for their model in diagnosing various anomalies throughout the body. This groundbreaking approach not only advances disease detection but also fosters trust and transparency between healthcare providers and patients.

The study, titled “A Test Statistic Estimation-based Approach for Establishing Self-interpretable CNN-based Binary Classifiers,” was published in IEEE Transactions on Medical Imaging.