In 2024, there is an abundance of interconnect options available for connecting tens, hundreds, thousands, or even tens of thousands of accelerators together.

Various companies offer different interconnect technologies. Nvidia utilizes NVLink and InfiniBand, while Google employs optical circuit switches (OCS) for communication between TPU pods. AMD introduces its Infinity Fabric for various levels of traffic, including die-to-die, chip-to-chip, and soon node-to-node. On the other hand, Intel relies on traditional Ethernet for Gaudi2 and Gaudi3.

The primary challenge lies not in establishing a large enough mesh network but in mitigating significant performance penalties and bandwidth limitations associated with off-package communication. Moreover, the dependency of AI processing on HBM memory, which is intricately linked to compute resources, poses a significant constraint.

Dave Lazovsky, the founder of Celestial AI, aptly describes the current industry landscape where Nvidia GPUs essentially function as high-priced memory controllers. Celestial AI, with recent substantial funding, is advancing its Photonic Fabric technology, supported by key investors like AMD Ventures, among others.

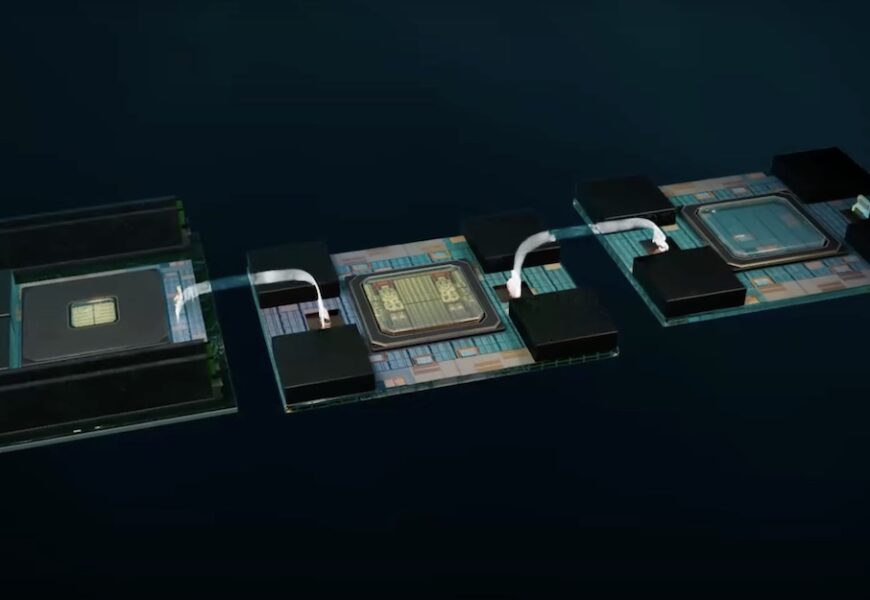

Celestial’s Photonic Fabric encompasses a range of silicon photonics interconnects, interposers, and chiplets designed to separate AI compute from memory. While the company remains discreet about its partnerships, the discussions around co-packaging silicon photonic chiplets with AMD Ventures and the potential integration of its technology with hyperscale customers and processor manufacturers hint at significant developments.

The core components of Celestial’s technology include chiplets, interposers, and an optical variant of packaging technologies like Intel’s EMIB or TSMC’s CoWoS, known as OMIB. Chiplets play a crucial role, offering flexibility in expanding HBM memory capacity or facilitating chip-to-chip interconnectivity similar to optical NVLink or Infinity Fabric.

These chiplets, slightly smaller than an HBM stack, provide substantial opto-electrical interconnect capabilities, delivering impressive bandwidth off the chip. The upcoming second-generation Photonic Fabric promises even higher bandwidth, enhancing connectivity and performance significantly.

Celestial’s innovative approach includes a memory expansion module that combines HBM stacks with DDR5 DIMMs to optimize both capacity and bandwidth utilization. This module, enabled by a 5-nanometer switch ASIC, effectively transforms HBM into a write-through cache for DDR5, offering a balance between capacity, bandwidth, and latency.

The efficiency of Celestial’s memory solution, with significantly lower energy overhead per bit compared to traditional methods, showcases a promising advancement in the field. The integration of multiple memory modules into a memory switch further enhances the overall memory architecture, enabling efficient machine learning operations without the need for additional switches.

While Celestial aims to sample its Photonic Fabric chiplets to customers by the second half of 2025, with product launches expected by 2027, other startups like Ayar Labs and Lightmatter are also making significant strides in silicon photonics. The industry shift towards co-packaged optics and silicon photonic interposers appears inevitable, heralding a new era of high-performance computing architectures.