It is logical to presume that even the most intelligent and cunning artificial intelligence algorithm will need to adhere to the principles of silicon. The capabilities of such algorithms will inevitably be restricted by the underlying technology they rely on.

To mitigate the potential risks associated with AI systems, researchers are exploring innovative approaches to leverage this infrastructure. The idea is to integrate computer chips essential for executing advanced algorithms with predefined regulations governing their training and deployment.

This strategy could present a powerful new mechanism to deter rogue nations or irresponsible corporations from clandestinely developing hazardous AI technologies. The current discourse revolves around the challenges posed by highly potent AI, which may be more challenging to regulate through conventional legislative frameworks or treaties. The Center for New American Security, a prominent US think tank specializing in foreign policy, recently released an article outlining the potential utilization of constrained AI to enforce diverse AI parameters.

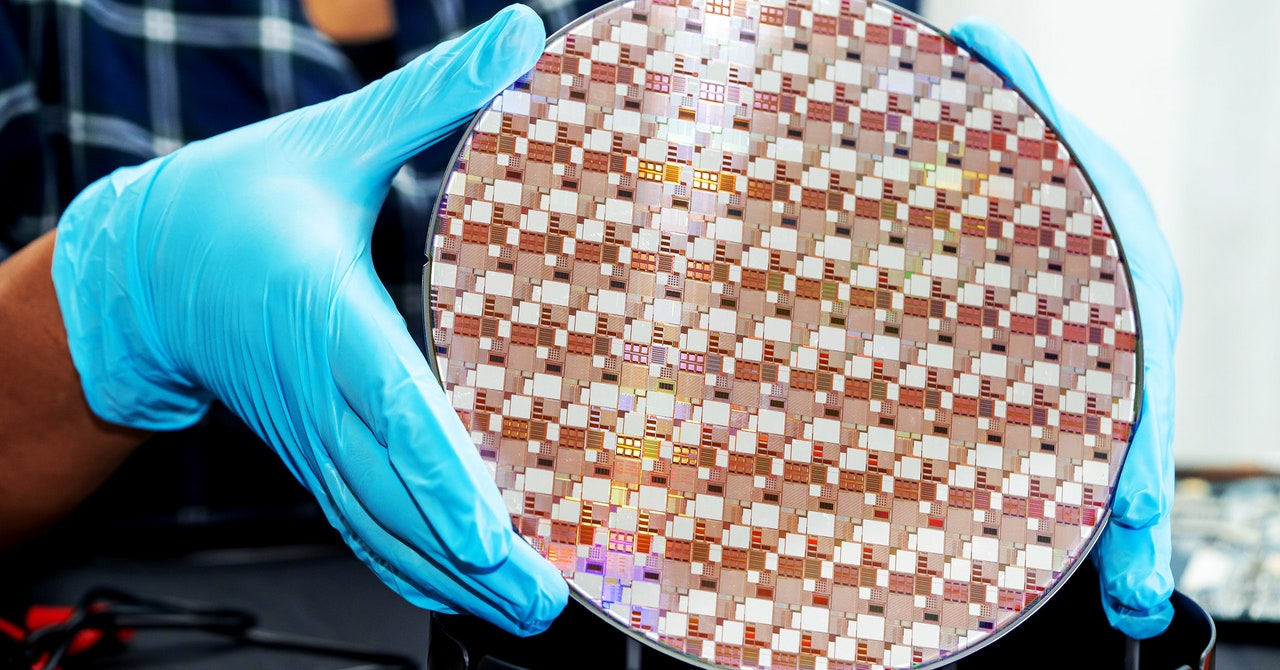

Existing chips already incorporate reliable components designed to safeguard sensitive data and prevent misuse. For example, the latest iPhones store genetic information in a ‘secure enclave’, ensuring its protection. Similarly, Google employs specialized mechanisms in its aerial devices to verify data integrity.

To prevent unauthorized AI projects from accessing excessive computational resources, the report proposes leveraging existing GPU features or integrating new functionalities into forthcoming chip designs. Advanced AI models like those underpinning ChatGPT necessitate substantial computing power for training, thereby restricting access to those capable of developing highly potent systems.

According to CNAS, governments or regulatory bodies could issue certificates periodically to manage AI training exposure effectively. Tim Fist, a CNAS colleague and co-author of the paper, suggests implementing protocols that mandate a comprehensive assessment and a favorable safety rating before model construction.

Some AI luminaries express concerns about the potential risks associated with highly intelligent AI systems becoming uncontrollable. In the immediate term, authorities and experts fear that existing AI models could facilitate the development of chemical or biological weaponry or streamline cybersecurity automation. While measures like smuggling and sophisticated architecture offer partial solutions, Washington has imposed stringent export controls on AI chips to limit China’s access to cutting-edge AI technologies for military purposes. Nvidia refrained from commenting, but recent US export restrictions have significantly impacted the company’s revenue from Chinese transactions.

While embedding restrictions directly into computer hardware may seem extreme, Fist cites historical precedents where infrastructure was established to monitor or regulate critical technologies and uphold international agreements. Verification systems played a pivotal role in treaty enforcement, as evidenced by nonproliferation and security measures in the nuclear domain.

The proposals put forth by CNAS are not purely speculative. Nvidia’s primary AI training chips, crucial for developing potent AI models, already incorporate secure encrypted modules. Furthermore, in November 2023, researchers from the Future of Life Institute and Mithril Security demonstrated how an Intel CPU’s security module could prevent unauthorized AI model usage.

Though implementing actual “seismographs” or electronic controls for AI may pose technical and social challenges, future AI chips would necessitate new hardware features and cryptographic technologies. These developments must strike a balance between effectiveness, affordability, and resistance to adversaries with advanced chip manufacturing capabilities. Previous endeavors like the Clipper chip, designed by the NSA in the 1990s to decrypt messages, underscore the complexities of compromising computer systems for national security purposes.

Aviya Skowron, head of policy and ethics at EleutherAI, notes resistance from software professionals towards hardware interventions by manufacturers. The advancements required to support governable chips are substantial.

The concept has garnered attention from the US government, with the Commerce Department’s Bureau of Industry and Security soliciting technological solutions to enable chips to restrict AI functionalities. Jason Matheny, CEO of Rand Corporation, emphasized the need for “microelectronic controls embedded in AI chips” to mitigate national security risks during a congressional testimony last April.