At the most recent GTC event, Nvidia’s increasing impact on enterprise infrastructure stood out prominently. GTC, the industry’s largest IoT-focused gathering, covers almost the entire AI ecosystem.

Despite the potential for applications and foundational models to enhance business value and spur investment, the current AI landscape remains robust due to the specialized equipment it demands. Nvidia leads the way in enabling both cloud services and on-premises solution providers.

Nvidia’s Role as a Comprehensive Solution Provider

A major highlight from Nvidia is the introduction of its latest Blackwell accelerators, designed to enhance AI training capabilities and support high-performance inference for relational AI. Nvidia’s innovative BH200 accelerators…

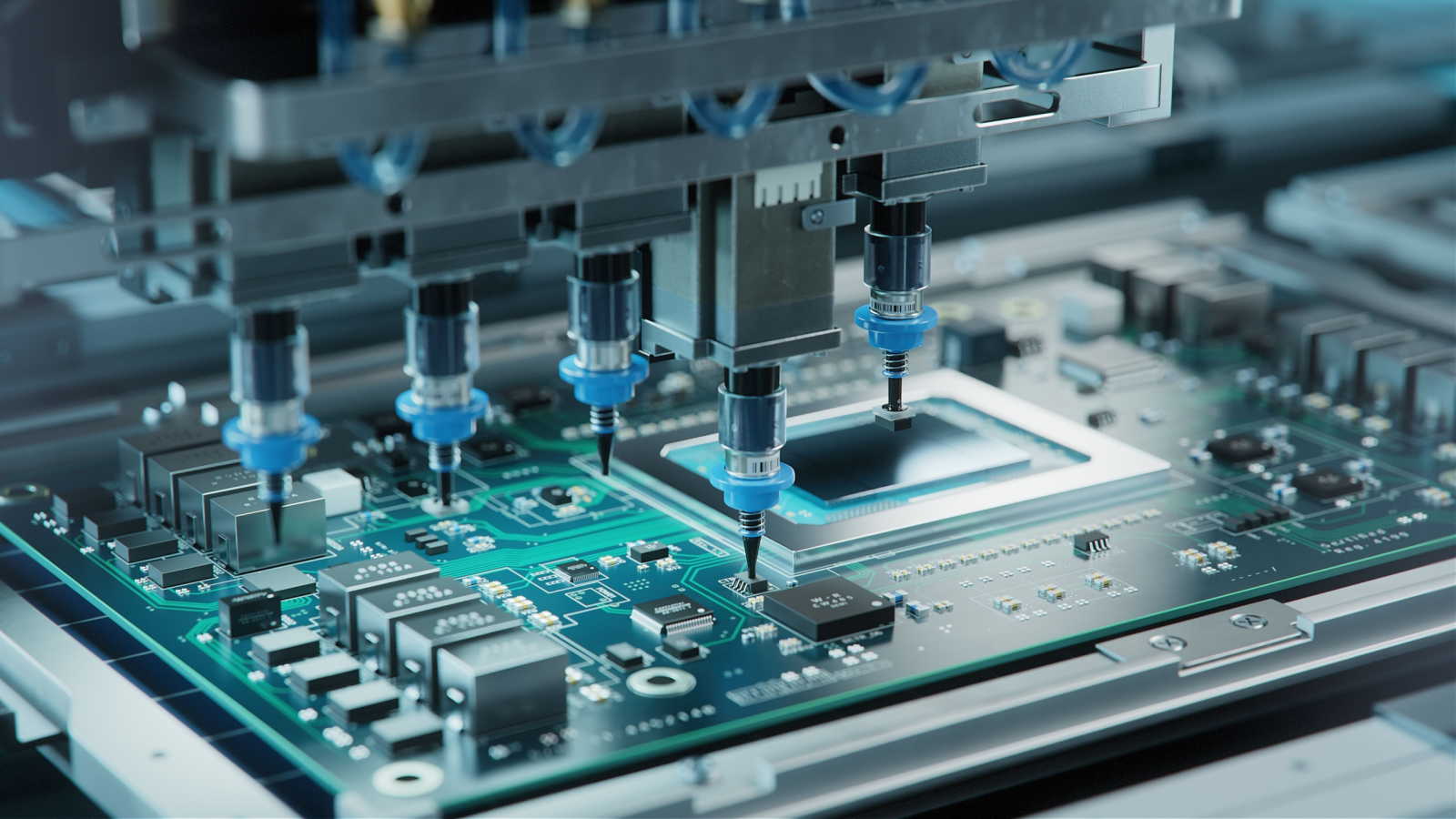

While customers are likely to gain access to cutting-edge GPUs, Nvidia positions its accelerators as holistic solutions at the system level, offering a seamless, efficient, end-to-end solution for enterprise AI. This initiative kicks off with the Nvidia GB200 NVL72, a groundbreaking rack-level AI system tailored for large-scale AI and HPC challenges.

Featuring a 900 GB/s NVLink-C2C interface for seamless data accessibility, incorporating high-performance NVIDIA GPUs and CPUs, this configuration delivers an impressive 80 petaflops of AI performance, 1.7 TB of robust storage, and supports up to 72 Graphics.

Nvidia has unveiled the DGX SuperPOD powered by DGX GB200 units, further scaling up capabilities. The SuperPOD harnesses Nvidia GB200 Grace Blackwell Superchips, specifically designed for handling trillion-parameter models, capable of supporting tens of thousands of GPUs.

This latest system ensures continuous uptime with full-stack resilience. It boasts an efficient, liquid-cooled design for optimal performance, integrating Base Command and Nvidia AI Enterprise technology to streamline AI development and deployment processes, enhancing developer efficiency and system reliability.

AI Evolution in the Cloud Environment

Nvidia is committed to transitioning from a GPU-centric approach to offering comprehensive system-level solutions in the market. This strategic shift has reportedly stirred some unease among cloud service providers (CSPs) who prefer developing their own solutions, although this sentiment appears to be diminishing.

Nvidia and Amazon’s AWS, the final CSP to endorse the current DGX cloud technology, simultaneously announced a strategic partnership that includes the development of a new AI system as part of their revamped Job Ceiba.

Oracle Cloud, another key partner of Nvidia in the DGX ecosystem, also declared extensive support for the GPU leader’s latest technologies. Additionally, Oracle may integrate Nvidia’s Bluefield-3 DPUs into its product lineup, providing customers with a compelling new option for offloading data center tasks from CPUs.

Microsoft Azure revealed support for Nvidia’s advanced Grace Blackwell GB200 and Nvidia Quantum-X800 InfiniBand technologies. Furthermore, Google Cloud is set to leverage Nvidia’s GB200 NVL72 systems, which integrate 72 Blackwell GPUs and 36 Grace CPUs interconnected via second-generation NVLink.

OEMs Embrace the AI Revolution

Contrary to popular belief, AI is not solely confined to the cloud domain. Major players like Dell Technologies, HPE, Supermicro, and Lenovo have significant AI-focused operations. In their recent financial reports, both Dell and HPE disclosed a substantial server backlog of around $2 billion each.

Nvidia and Dell jointly announced a new AI Factory initiative, underscoring their collaboration to bolster on-premises AI capabilities. Dell’s AI Factory amalgamates Dell’s comprehensive range of computing, storage, networking, and workstations, incorporating Nvidia’s Enterprise AI software suite and the Nvidia Spectrum-X networking fabric to ensure a seamless and robust AI infrastructure.

Dell has also enhanced its PowerEdge server lineup to accommodate Nvidia’s latest accelerator generation, including the launch of a high-performance eight-processor server featuring liquid-cooled components.

Lenovo introduced new AI-centric ThinkEdge servers. The liquid-cooled eight-processor ThinkSystem SR780a V3 server by Lenovo boasts efficient power usage effectiveness. On the other hand, the air-cooled Lenovo ThinkSystem SR680a V3 server can leverage a mix of Nvidia GPUs and Intel processors to accelerate AI workloads. Lastly, the Lenovo PG8A0N is a 1U node equipped with open-loop liquid cooling for accelerators and supports the new Nvidia GB200 Grace Blackwell Superchip.

Hewlett-Packard Enterprise unveiled new capabilities for its targeted generative AI solutions rather than launching new servers. Collaborating with Nvidia, HPE introduced the new HPE Machine Learning Inference Software, simplifying the deployment of ML models at scale for businesses. This latest offering seamlessly integrates with Nvidia NIM to deliver foundation models in pre-built containers optimized for Nvidia platforms.

Evolution of Storage in the AI Landscape

AI training necessitates a storage infrastructure distinct from traditional enterprise storage solutions. AI workloads impose unique requirements on throughput, latency, and scalability. While large training clusters demand highly scalable parallel file systems, conventional storage architectures suffice for moderate AI setups. These contrasting storage paradigms were evident at GTC.

Weka and VAST Data are engaged in a competitive race to deliver cutting-edge data infrastructure for AI service providers, a competition that was hard to overlook at GTC. Weka unveiled a new system that earned Nvidia DGX SuperPOD certification for its software. Similarly, VAST Data showcased its recently launched Bluefield-3 solution, catering to scalable storage needs in large AI clusters.

Hammerspace also made waves with its announcement that Meta’s newly unveiled 48K GPU cluster incorporates Hammerspace technology.

On-premises storage solutions continue to rely on traditional storage approaches. Pure Storage announced extended support for AI workloads, including an RAG pipeline, Nvidia OVX Server Storage Reference architecture, new industry-specific RAG models developed in collaboration with Nvidia, and an expanded partner ecosystem featuring ISVs like Run.AI and Weights & Biases.

Likewise, NetApp introduced new RAG-centric services leveraging Nvidia NeMo Retriever microservices technology.

Analyst’s Perspective

GTC showcased several key trends, including the increasing adoption of liquid-cooled solutions, the emphasis on inference infrastructure, the proliferation of edge AI, and the integration of AI in cybersecurity. These developments build upon the foundation laid by Nvidia through its collaborations with cloud providers and OEM partners.

While AI remains at the forefront of technological advancements, its impact continues to broaden. Cloud providers are embracing more sophisticated solution stacks, while on-premises AI adoption is on the rise. Inference capabilities are gaining prominence, driving the need for robust AI infrastructure both on-premises and at the edge.

Despite the widespread influence of AI, Nvidia stands out as a pivotal player defining the requisite infrastructure. By adopting a platform-centric approach, Nvidia transcends traditional GPU offerings to provide integrated, system-level AI solutions. Beyond the introduction of its latest Blackwell accelerators, the Nvidia GB200 NVL72 systems and corresponding SuperPOD solutions exemplify this strategic focus.

Nvidia’s strategic planning and execution have solidified its dominance in the AI market. The company is fostering ecosystems that enable businesses to navigate the AI landscape effectively, moving beyond chip sales to offer comprehensive solutions.

Disclosure: Steve McDowell, an industry analyst at NAND Research, provides research, analysis, and advisory services to various technology companies, including those mentioned in this article. Mr. McDowell does not hold equity positions in the businesses referenced here.