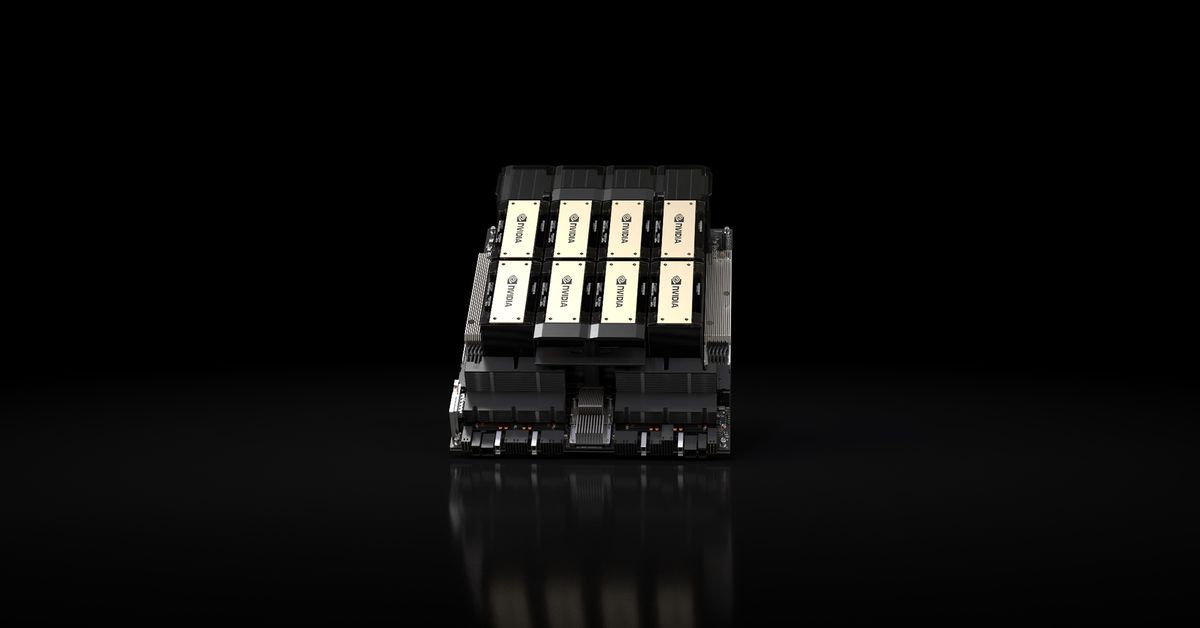

Nvidia is set to unveil the latest high-end solution for AI work, the HGX H200, as an upgrade to the previous H100 model. This new GPU boasts 1.4 times faster memory speed and 1.8 times larger storage capacity, enhancing its capability to tackle demanding AI tasks.

The key question revolves around the availability of the new chips. Nvidia has not provided a definitive answer regarding whether the supply constraints that plagued the H100 will persist with the H200. The release of the second batch of H200 cards is scheduled for the second quarter of 2024, with Nvidia collaborating with global enterprises and cloud service providers to ensure accessibility.

While the H200 shares many similarities with its predecessor, the significant enhancement lies in its memory configuration. It is the first GPU to adopt the faster HBM3e storage standard, elevating the storage speed to 4.8 terabytes per minute and the total capacity to 141GB, a notable increase from the H100’s 80GB.

Ian Buck, Nvidia’s VP of high-performance computing products, highlighted the advantages of the enhanced HBM memory in boosting performance for complex AI models and high-performance computing tasks while optimizing GPU efficiency.

Moreover, the H200 is designed to maintain compatibility with existing systems supporting H100s, easing the transition for cloud providers without necessitating any major adjustments. Leading cloud platforms like Amazon, Google, Microsoft, and Oracle are poised to introduce the new GPUs in the upcoming month.

Although the pricing details for the H200 are undisclosed, reports suggest that the preceding H100 models were priced between \(25,000 to \)40,000 each, with a substantial quantity required for top-tier operations. The industry eagerly anticipates further information on pricing and availability from Nvidia.

As demand for AI hardware intensifies, Nvidia’s chips remain highly sought after for their efficiency in handling massive data volumes essential for training advanced language and image models. The scarcity of H100 cards has led to businesses using them as collateral for loans, sparking collaborations to secure access to these coveted GPUs.

Looking ahead, Nvidia aims to double its H100 production in 2024, with plans to manufacture up to 2 million units, a significant increase from the 500,000 units produced in 2023. However, the relentless demand for relational AI solutions may outpace supply growth, especially with the impending launch of the new H200 chip.