University of Chicago researchers recently introduced Nightshade 1.0, a tool designed to deter unethical developers of machine learning models who train their systems on data without proper authorization. Nightshade serves as an offensive data poisoning instrument, complementing the defensive tool Glaze, previously featured by The Register in February last year.

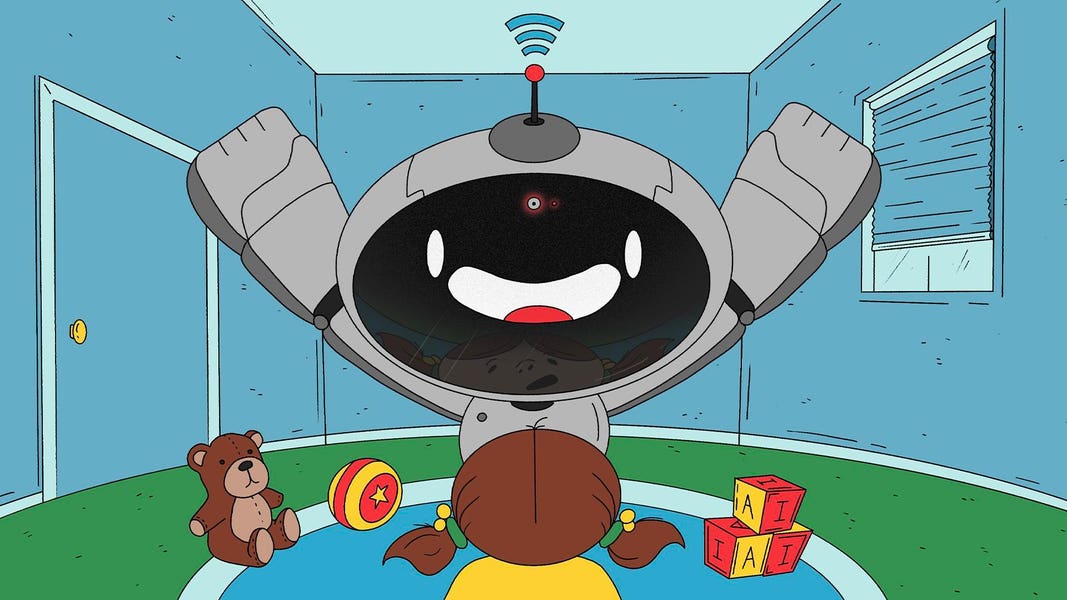

Nightshade functions by contaminating image files to disrupt models that consume data without consent, aiming to compel those training image-centric models to respect the preferences of content creators regarding the utilization of their creations.

The development team behind Nightshade explained that it operates as a multi-objective optimization, minimizing visible alterations to the original image. While a human observer may perceive minimal changes, an AI model could interpret the modified image differently, potentially identifying objects erroneously.

This innovative tool was crafted by University of Chicago doctoral candidates Shawn Shan, Wenxin Ding, and Josephine Passananti, alongside professors Heather Zheng and Ben Zhao, some of whom contributed to Glaze as well.

Initially detailed in a research paper in October 2023, Nightshade executes a prompt-specific poisoning attack, manipulating image labels to distort the understanding of the depicted content during model training.

Users engaging with models trained on Nightshade-poisoned images may encounter unexpected outcomes, such as receiving an image of a different object than requested, thereby diminishing the reliability of text-to-image models. This unpredictability incentivizes model developers to prioritize utilizing freely offered data exclusively.

The authors emphasize Nightshade’s utility in safeguarding intellectual property rights for content creators against model trainers who disregard copyright notices and opt-out directives. They underscore the importance of respecting the wishes of artwork creators to avoid legal disputes like the ongoing lawsuit against AI firms for unauthorized use of artists’ work.

While acknowledging some limitations of Nightshade, particularly in processing images with flat colors and smooth backgrounds, the authors remain confident in adapting the tool to counter potential mitigation strategies.

Furthermore, the researchers recommend utilizing both Nightshade and Glaze in tandem to combat unauthorized data usage effectively. As the tools continue to evolve, a unified version is in the works to streamline their application for users.