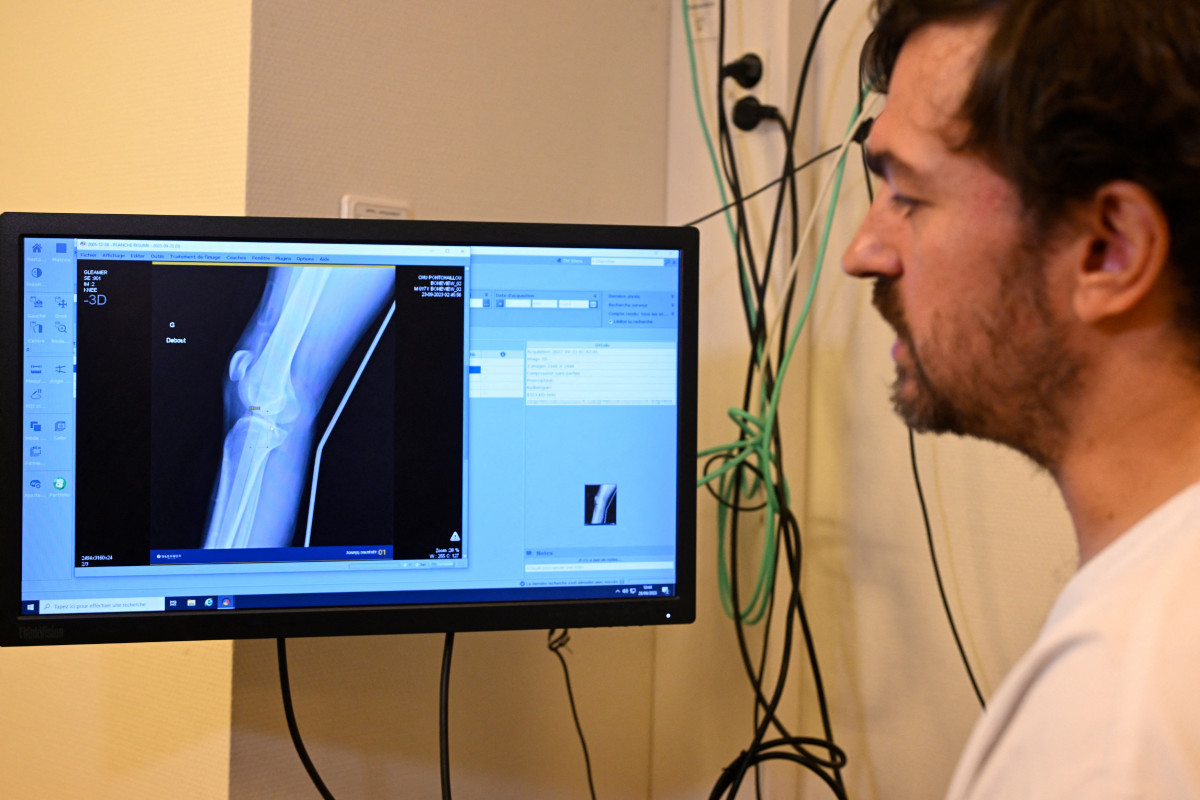

A physician utilizes artificial intelligence for the analysis of an x-ray. | Image Source: Damien Meyer/AFP via Getty Images

Within the healthcare domain, medical professionals are currently employing unregulated artificial intelligence tools like note-taking virtual assistants and predictive software to assist in the diagnosis and treatment of illnesses.

The regulation of this swiftly advancing technology by governmental bodies such as the Food and Drug Administration has been impeded by significant financial and staffing challenges. The prospect of these agencies catching up in the near future appears dim, transforming the incorporation of AI in healthcare into a high-stakes experiment to determine whether the private sector can propel medical innovation securely in the absence of rigorous government supervision.

John Ayers, an associate professor at the University of California San Diego, has expressed apprehensions about the present state of affairs, drawing parallels to a scenario where progress has outstripped the regulatory framework, prompting inquiries into how to maintain control without encountering catastrophic repercussions.

In contrast to traditional medical devices or medications, AI software is dynamic and continually evolving. The FDA aims to transition from a singular approval model to a more proactive monitoring approach for artificial intelligence products gradually, marking a pioneering initiative for the agency.

Despite President Joe Biden’s commitment to swift and coordinated action to ensure AI safety and efficacy, regulatory bodies like the FDA lack the essential resources to efficiently supervise a technology that is in a perpetual state of change.

The FDA encounters significant hurdles in assessing AI due to its adaptable nature and the diverse outcomes it might generate based on the context. This presents a formidable challenge that diverges from the FDA’s customary regulatory procedures, where sanctioned drugs and devices do not necessitate ongoing monitoring of their progressive performance.

In addition to the necessity of revising its regulatory tactics and reinforcing its workforce, the FDA is striving to acquire expanded authority to solicit performance data on AI and establish precise guidelines for algorithms beyond its existing risk assessment framework for medical products.

The ongoing discourse on AI regulation within Congress further muddles the scenario as the FDA wrestles with uncertainties regarding its jurisdiction and the allocation of regulatory powers among various agencies under the Department of Health and Human Services.

To tackle these obstacles, suggestions have surfaced for the establishment of public-private assurance laboratories, potentially situated in distinguished academic institutions or healthcare facilities, to authenticate and supervise AI applications in healthcare. This collaborative strategy aims to ensure the efficiency and safety of AI technologies in various healthcare environments.

Despite these endeavors, apprehensions persist among smaller industry participants concerning potential conflicts of interest if oversight is carried out by entities involved in AI system development. Some experts advocate for stringent validation processes within the FDA itself to ensure enhanced patient outcomes and effective regulation of AI technologies.

In view of past incidents where AI malfunctions went undetected, there is an increasing consensus that regulatory bodies must enhance their oversight mechanisms to uphold patient safety and the credibility of AI applications in healthcare.