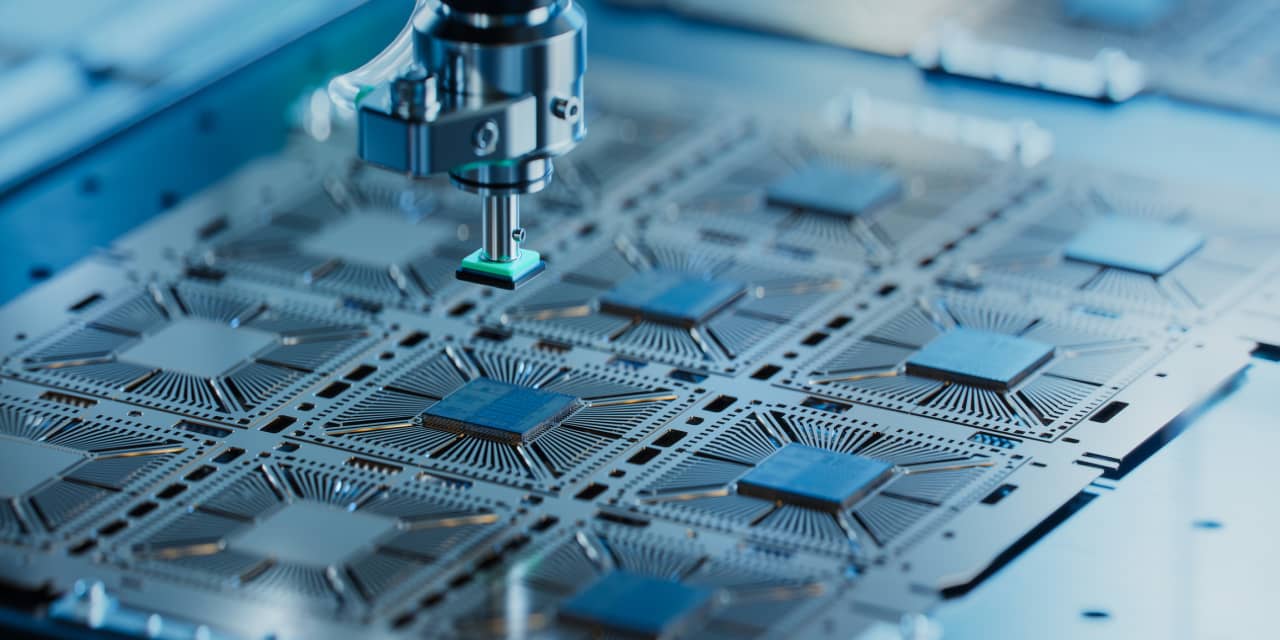

Visitors have the opportunity to observe the performance of each model on particular benchmarks and review its overall average score. None of the models have achieved a perfect score of 100 points on any benchmark to date. A recent breakthrough was made by Smaug-72B, a novel AI model developed by the San Francisco-based startup Abacus.AI, which surpassed an average score of 80, setting a new milestone.

Several Language Model Models (LLMs) are now exceeding the baseline level of human performance on various tests, indicating a phenomenon known as “saturation.” This occurs when models enhance their capabilities to a level where they surpass specific benchmark tests, akin to a student transitioning from middle school to high school. Another scenario leading to saturation is when models memorize how to respond to certain test questions, known as “overfitting.”

Thomas Wolf, a co-founder and the chief science officer of Hugging Face, explained that saturation does not imply overall superiority to humans. Instead, it signifies that models have outgrown the current benchmarks, necessitating the creation of new evaluation metrics to assess their capabilities accurately.

While some benchmarks have existed for years, developers of new LLMs often train their models on these established test sets to ensure high scores upon launch. To counter this trend, Chatbot Arena, an initiative by the Large Model Systems Organization, employs human judgment to assess AI models.

According to Parli, this approach allows researchers to explore more holistic evaluation methods beyond singular metrics, enabling a comprehensive assessment of language models.

Chatbot Arena enables visitors to pose questions to two anonymous AI models and vote on the superior response. With over 300,000 human votes, the leaderboard ranks approximately 60 models. The platform has experienced a significant surge in traffic since its inception less than a year ago, receiving thousands of votes daily. However, due to the overwhelming demand to include new models, the platform faces constraints in accommodating all requests.

Wei-Lin Chiang, a doctoral student in computer science at the University of California-Berkeley and co-creator of Chatbot Arena, mentioned that crowdsourced votes yield results comparable in quality to those obtained from human experts. The team is actively developing algorithms to identify and mitigate malicious behavior from anonymous voters.

While benchmarks play a crucial role, researchers acknowledge their limitations in providing a comprehensive evaluation. Even if a model excels in reasoning benchmarks, it may struggle in specific tasks such as analyzing legal documents. This underscores the importance of conducting “vibe checks” on AI models to assess their performance across diverse contexts, evaluating their ability to engage users, maintain consistent personalities, and retain information effectively.

Despite the inherent shortcomings of benchmarking, researchers emphasize that these tests and leaderboards foster innovation among AI developers, compelling them to continually enhance their models to meet evolving evaluation standards.