Happy Wednesday! Are you prepared for the season of stomping on lantern flies? Feel free to share squashed bugs and news tips with: [email protected].

Next up: The Treasury Department’s crackdown on commercial spyware. But first:

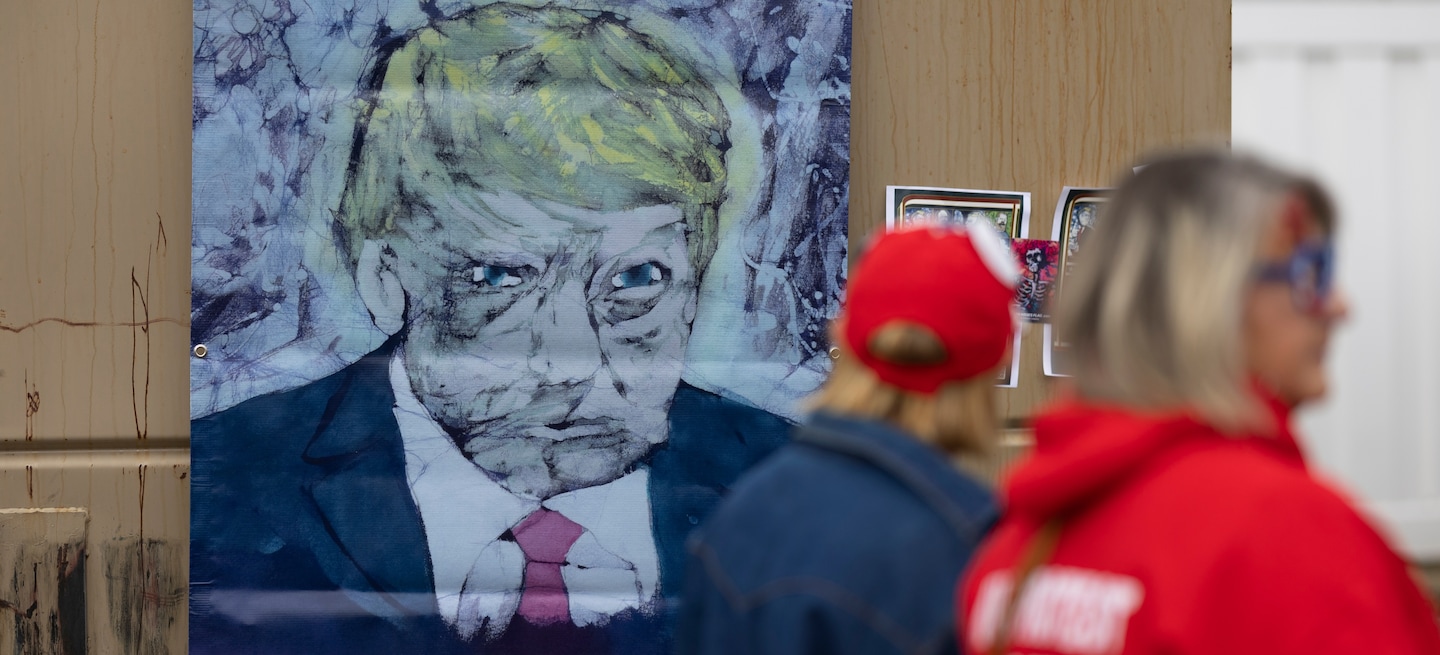

Exploring the Impact of AI and Disinformation Through Fake Images of Trump with Black Voters

A recent BBC investigation shed light on a concerning trend in the 2024 U.S. presidential campaign involving fabricated images generated by AI. These fake images depicted Donald Trump alongside Black individuals, circulating on various platforms like X and Facebook with the alleged aim of influencing Black voters to support Trump. Despite the implication, there is no concrete evidence linking these images to the Trump campaign. Notably, some images originated from parody accounts and were later shared without clarification on their authenticity. The extent of the images’ reach and the level of deception they caused remain uncertain.

Mark Kaye, a conservative media personality from Florida, contributed to this narrative by creating an image featuring Trump alongside smiling Black women using the AI tool Midjourney. Kaye’s intention was to boost engagement on social media, recognizing the effectiveness of visual content in capturing audience attention. Although Kaye has a substantial following on Facebook, the images only gained traction after being spotlighted by the BBC. Currently, Kaye’s Facebook post has been flagged for spreading misinformation by independent fact-checkers.

This story underscores the complex dynamics between AI image manipulation tools, social media platforms, and traditional media during an election cycle. According to Joan Donovan, a media studies professor at Boston University, such campaigns aim to attract media coverage by leveraging AI-generated content, even if the subject matter itself lacks newsworthiness. These AI-generated images represent a subtle yet potent form of propaganda, distinct from traditional deepfakes, as they can subtly influence public perception without triggering immediate scrutiny.

The emergence of what Donovan refers to as “cheapfakes” poses a new challenge in the disinformation landscape. Despite certain restrictions, AI image tools like Midjourney have proven susceptible to generating misleading content, as highlighted in a report by the Center for Countering Digital Hate. The ease of creating deceptive visuals through these tools has significantly lowered the barrier for spreading fake information, prompting concerns for tech platforms to address this evolving threat effectively.

Moreover, the proliferation of election-related disinformation facilitated by AI tools has prompted calls for stricter regulations. Nina Jankowicz from the Centre for Information Resilience suggests that platforms should scrutinize political content for deepfake elements to mitigate their amplification. While acknowledging the risks associated with deepfakes, Jankowicz emphasizes the importance of critical thinking in discerning fabricated content from reality.

In parallel developments, the Treasury Department’s recent actions against commercial spyware, particularly targeting Intellexa, signify a significant escalation in combating surveillance abuses. Intellexa, founded by Tal Dilian, faced sanctions for targeting U.S. officials and journalists, marking a pivotal moment in curbing spyware proliferation. The repercussions of these sanctions extend beyond U.S. borders, prompting speculation on potential European responses to such violations.

As the intersection of AI, disinformation, and cybersecurity continues to evolve, the need for robust safeguards and regulatory measures becomes increasingly apparent. Stay informed and vigilant amidst the evolving landscape of digital threats and misinformation.

For more tech updates and insights, remember to subscribe to The Technology202 and reach out to Cristiano and Will for feedback, tips, or simply to say hello. Thank you for being part of our community!