Summary: A robotic sensor utilizing artificial intelligence has been developed to read braille at an impressive speed of 315 words per minute with 87% accuracy, surpassing human reading capabilities. This innovation, driven by machine learning algorithms, showcases high sensitivity in interpreting braille akin to human tactile skills.

While not primarily intended for assistive purposes, this breakthrough holds promise for enhancing robotic hands and prosthetics. By excelling in braille reading, the sensor underscores the potential of robotics in emulating intricate human tactile abilities.

Key Details:

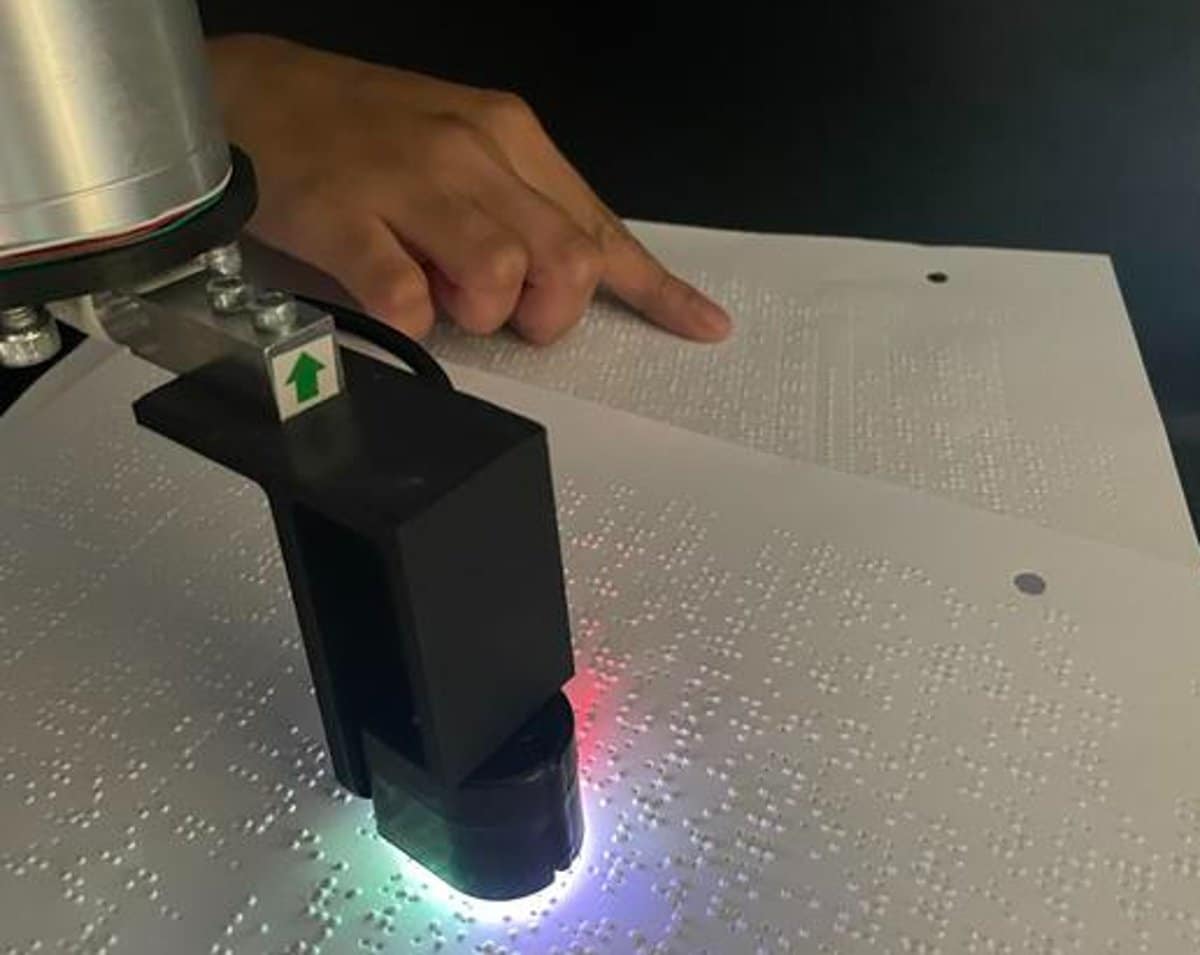

- The robotic braille reader integrates AI and a camera-equipped ‘fingertip’ sensor to achieve double the reading speed of most individuals.

- Its exceptional sensitivity positions it as a model for advancing robotic hands and prosthetics.

- This development poses a challenge in replicating human fingertip sensitivity in robotics, paving the way for diverse applications beyond braille comprehension.

A team of researchers at the University of Cambridge has devised a robotic sensor empowered by machine learning algorithms to swiftly navigate braille text lines. This robotic reader can decipher braille at a remarkable speed of 315 words per minute with close to 90% accuracy.

Despite its non-assistive design, the sensor’s heightened sensitivity required for braille reading renders it an ideal candidate for testing advanced robotic hands or prosthetics with human-like tactile acuity.

The study outcomes have been detailed in the IEEE Robotics and Automation Letters journal.

Human fingertips possess remarkable sensitivity crucial for tactile exploration. They enable us to discern minute textural variations or gauge the appropriate grip strength when handling objects, such as delicately lifting an egg or securely grasping a bowling ball.

Replicating such sensitivity in robotic hands while ensuring energy efficiency presents a significant engineering hurdle. Within Professor Fumiya Iida’s lab at Cambridge’s Department of Engineering, researchers are addressing this challenge along with other human-like skills that prove intricate for robots.

Parth Potdar, from Cambridge’s Department of Engineering and the primary author of the paper, highlighted, “The pliancy of human fingertips contributes to our adeptness in applying precise pressure. Softness is advantageous for robotics, but integrating extensive sensor data while dealing with flexible surfaces poses a complexity.”

Braille serves as an ideal assessment for a robot’s ‘fingertip’ due to its demand for heightened sensitivity, given the proximity of dots within each letter pattern. Leveraging an off-the-shelf sensor, the researchers developed a robotic braille reader that authentically mimics human reading behavior.

David Hardman, another co-author from the Department of Engineering, elucidated, “Existing robotic braille readers operate in a static manner, examining one letter pattern at a time, unlike human reading behavior. Our aim is to devise a more realistic and efficient approach.”

The robotic sensor incorporates a camera within its ‘fingertip’ and employs a fusion of camera data and sensor inputs for reading. Potdar explained, “Addressing motion blur through extensive image processing poses a significant challenge for roboticists in this context.”

To mitigate this challenge, the team devised machine learning algorithms to ‘deblur’ images before character recognition. By training the algorithm on sharp braille images with simulated blur, they enabled the robotic reader to deblur letters and subsequently detect and classify each character using a computer vision model.

Upon algorithm integration, the robotic braille reader demonstrated its proficiency by swiftly traversing rows of braille characters. Operating at 315 words per minute with 87% accuracy, the reader surpassed human braille reading speed twofold while maintaining comparable accuracy.

Hardman expressed surprise at the algorithm’s accuracy in braille reading despite being trained on artificially blurred images. He emphasized the achieved balance between speed and accuracy, akin to proficient human readers.

Potdar emphasized the significance of braille reading speed in evaluating tactile sensing systems’ dynamic performance, indicating broader applications beyond braille comprehension, such as detecting surface textures or slippage in robotic interactions.

In the future, the researchers aim to scale this technology to the dimensions of a humanoid hand or skin.

Funding: The research received partial support from the Samsung Global Research Outreach Program.

Abstract High-Speed Tactile Braille Reading via Biomimetic Sliding Interactions

While conventional braille-reading robotic sensors adopt a discrete letter-by-letter reading strategy, a biomimetic sliding approach offers higher speed potential.

Our proposed pipeline for continuous braille reading involves dynamic frame collection using a vision-based tactile sensor, motion-blur removal by an autoencoder, braille character classification by a lightweight YOLO v8 model, and error minimization in the predicted string through data-driven consolidation.

We showcase a cutting-edge speed of 315 words per minute at 87.5% accuracy, exceeding human braille reading speed by more than twofold.

Although demonstrated on braille, this biomimetic sliding technique holds promise for enhanced dynamic spatial and temporal detection of surface textures, presenting developmental challenges that warrant attention.