The anonymous producer Ghostwriter, seen here in February at a Grammy party at the Waldorf Astoria Beverly Hills, created a stir in 2023 with the single “Heart on My Sleeve,” which used deepfake technology to recreate the voices and styles of Drake and The Weeknd without either artist’s involvement or permission.

For as long as people have been making money from music, there have been disagreements to hash out — over who gets to claim credit for what, where music can be used and shared, how revenue should be split and, occasionally, what ownership even means. Each time the industry settles on a set of rules to account for those ambiguities, nothing disrupts the status quo all over again quite like new advancements in technology. When radio signals sprang up around the nation, competitors proliferated just across the border. When hip-hop’s early architects created an entirely new sonic culture, their building blocks were samples of older music, sourced without permission. When peer-to-peer file-sharing ran rampant through college dorm rooms, it united an internet-connected youth audience even as its legality remained an open question. “This industry always seems to be the canary in the coal mine,” observed Mitch Glazier, the head of the Recording Industry Association of America, in an interview. “Both in adapting to new technology, but also when it comes to abuse and people taking what artists do.”

In the music world as in other creative industries, generative AI — a class of digital tools that can create new content based on what they “learn” from existing media — is the latest tech revolution to rock the boat. Though it’s resurfacing some familiar issues, plenty about it is genuinely new. People are already using AI models to analyze artists’ signature songwriting styles, vocal sounds or production aesthetics, and create new work that mirrors their old stuff without their say. For performers who assiduously curate their online images and understand branding as the coin of the realm, the threat of these tools proliferating unchecked is something akin to identity theft. And for those anxious that the inherent value of music has already sunk too far in the public eye, it’s doubly worrying that the underlying message of AI’s powers of mimicry is, “It’s pretty easy to do what you do, sound how you sound, make what you make,” as Ezra Klein put it in a recent podcast episode. With no comprehensive system in place to dictate how these tools can and can’t be used, the regulatory arm of the music industry is finding itself in an extended game of Whac-a-Mole.

Last month, the nation’s first law aimed at tamping down abuses of AI in music was added to the books in Tennessee. Announced by Governor Bill Lee in January at a historic studio on Nashville’s Music Row, the ELVIS Act — which stands for Ensuring Likeness Voice and Image Security, and nods to an earlier legal reckoning with entities other than the Elvis Presley estate slapping the King’s name and face on things — made its way through the Tennessee General Assembly with bipartisan support. Along the way, there were speeches from the bill’s co-sponsors and performers and songwriters who call the state home, many of them representing the country and contemporary Christian music industries, insisting on the importance of protecting artists from their voices being cloned and words being put in their mouths. On a Thursday in March, Governor Lee signed the measure at a downtown Nashville honky-tonk and fist-bumped Luke Bryan, who was among the supportive music celebrities on hand.

To understand the stakes of this issue and the legal tangle that surrounds it, NPR Music sought the expertise of a few people who have observed the passage of the ELVIS Act, and the evolving discourse around generative AI, from different vantage points. They include the RIAA’s Glazier, a seasoned advocate for the recording industry; Joseph Fishman, a professor at Vanderbilt Law School who instructs future attorneys in the nuances of intellectual property law; Mary Bragg, an independent singer-songwriter and producer with a longtime ethos of self-sufficiency; and the three knowledgeable founders of ViNIL, a Nashville-based tech startup directing problem-solving efforts at the uncertainty stoked by AI. Drawing on their insights, we’ve unpacked four pressing questions about what’s happening with music and machine learning.

1) What exactly does generative AI do, and how has it intersected with music so far?

Talk of generative AI can easily slip into dystopian territory, and it’s no wonder: For more than half a century, we’ve been watching artificial intelligence take over and wipe out any humans standing in its way in sci-fi epics like 2001: A Space Odyssey, The Matrix and the Terminator franchise.

The reality is a little more pedestrian: The machine learning models with us now are taking on tasks that we’re accustomed to seeing performed by humans. They aren’t actually autonomous, or creative, for that matter. Their developers input vast quantities of human-made intellectual and artistic output — books, articles, photos, graphic designs, musical compositions, audio and video recordings — so that the AI models can taxonomize all of that existing material, then recognize and replicate its patterns.

AI is already being put to use by music-makers in plenty of ways, many of them generally viewed as benign to neutral. The living Beatles employed it to salvage John Lennon’s vocals from a muddy, lo-fi 1970s recording, so that they could add their own parts and complete the song “Now and Then.” Nashville singer-songwriter Mary Bragg reported that some of her professional peers treat ChatGPT like a tool for overcoming writer’s block. “Of course, it sort of became a larger topic around town,” she told me, specifying that she hasn’t yet employed it that way herself.

What she does do, though, is let her recording software show her shortcuts to evening out audio levels on her song demos. “You press one single button and it listens to the information that you feed it,” she explained at her home studio in a Nashville suburb, “and then it thinks for you about what it thinks you should do. Oftentimes it’s a pretty darn good suggestion. It doesn’t mean you should always take that suggestion, but it’s a starting point.” Those demos are meant for pitching her songs, not for public consumption, and Bragg made clear that she still enlists human mastering engineers to finalize music she’s going to release out into the world.

Early experiments with the musical potential of generative AI were received largely as harmless and fascinating geekery. Take “Daddy’s Car,” a Beatles-esque bop from 2016 whose music (though not its lyrics) was composed by the AI program Flow Machines. For more variety, try the minute-long, garbled genre exercises dubbed “Jukebox Samples” that OpenAI churned out four years later. These all felt like facsimiles made from a great distance, absurdly generalized and subtly distorted surveys of the oeuvres of Céline Dion, Frank Sinatra, Ella Fitzgerald or Simon & Garfunkel. In case it wasn’t clear whose music influenced each of those OpenAI tracks, they were titled, for instance, “Country, in the style of Alan Jackson.” The explicit citing of those artists as source material forecast copyright issues soon to come.

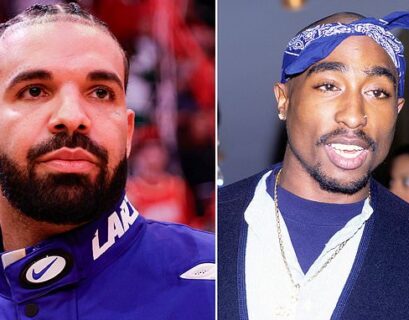

The specter of AI-generated music has grown more ominous, however, as the attempts have landed nearer their soundalike targets — especially when it comes to the mimicry or cloning of famous voices. Deepfake technology got a boost in visibility after someone posted audio on YouTube that sounded very nearly like Jay-Z — down to his smooth, imperious flow — reciting Shakespeare; ditto when the brooding, petulant banger “Heart on My Sleeve” surfaced on TikTok, sounding like a collab between Drake and The Weeknd, though it was quickly confirmed that neither was involved. Things got real enough that the record labels behind all three of those stars demanded the stuff be taken down. (In an ironic twist, Drake recently dropped his own AI stunt, harnessing the deepfaked voices of 2Pac and Snoop Dogg for a diss track in his ongoing feud with Kendrick Lamar.)

2) Who are the people calling for protections against AI in music, and what are they worried about?

From the outside, it can seem like the debate over AI comes down to choosing sides; you’re either for or against it. But it’s not that simple. Even those working on generative AI are saying that its power will inevitably increase exponentially. Simply avoiding it probably isn’t an option in a field as reliant on technology as music.

There is, however, a divide between enthusiastic early adopters and those inclined to proceed with caution. Grimes, famously a tech nonconformist, went all in on permitting fans to use and manipulate her voice in AI-aided music and split any profits. Ghostwriter, the anonymous writer-producer behind “Heart on My Sleeve,” and his manager say they envisioned that deepfake as a demonstration of potential opportunity for music-makers who work behind the scenes.

Perhaps among the better indicators of the current ambivalence in the industry are the moves made so far by the world’s biggest record label, Universal Music Group. Its CEO, Lucian Grainge, optimistically and proactively endorsed the Music AI Incubator, a collaboration with YouTube to explore what music-makers on its own roster could create with the assistance of machine learning. At the same time, Grainge and Universal have made a strong appeal for regulation. They’re not alone in their concern: Joining the cause in various ways are record labels and publishing companies great and small, individual performers, producers and songwriters operating on every conceivable scale and the trade organizations that represent them. Put more simply, it’s the people and groups who benefit from protecting the copyrights they hold, and ensuring the distinctness of the voices they’re invested in doesn’t get diluted. “I was born with an instrument that I love to use,” was how Bragg put it to me. “And then I went and trained. My voice is the thing that makes me special.”

Lawmakers and supporters pose at the signing of the ELVIS Act at Robert’s Western World in Nashville on March 21, 2024. Left to right: Representative William Lamberth, musician Luke Bryan, Governor Bill Lee, musician Chris Janson, RIAA head Mitch Glazier and Senator Jack Johnson. Jason Kempin/Getty Images for Human Artistry

Lawmakers and supporters pose at the signing of the ELVIS Act at Robert’s Western World in Nashville on March 21, 2024. Left to right: Representative William Lamberth, musician Luke Bryan, Governor Bill Lee, musician Chris Janson, RIAA head Mitch Glazier and Senator Jack Johnson. Jason Kempin/Getty Images for Human Artistry

The RIAA has played a leading advocacy role thus far, one that the organization’s chairman and CEO Mitch Glazier told me accelerated when “Heart on My Sleeve” dropped last spring and calls and emails poured in from industry execs, urgently inquiring about legal provisions against deepfakes. “That’s when we decided that we had to get together with the rest of the industry,” he explained. The RIAA helped launch a special coalition, the Human Artistry Campaign, with partners from across the entertainment industry, and got down to lobbying.

Growing awareness of the fact that many AI models are trained by scraping the internet and ingesting copyrighted works to use as templates for new content prompted litigation. A pile of lawsuits were filed against OpenAI by fiction and nonfiction authors and media companies, including The New York Times. A group of visual artists sued companies behind AI image generators. And in the music realm, three publishing companies, Universal, Concord and ABKO, filed against Anthropic, the company behind the AI model Claude, last fall for copyright infringement. One widely cited piece of evidence that Claude might be utilizing their catalogs of compositions: When prompted to write a song “about the death of Buddy Holly,” the AI ripped entire lines from “American Pie,” the folk-rock classic famously inspired by Holly’s death and controlled by Universal.

“I think no matter what kind of content you produce, there’s a strong belief that you’re not allowed to copy it, create an AI model from it, and then produce new output that competes with the original that you trained on,” Glazier says.

That AI is enabling the cloning of an artist’s — or anyone’s — voice and likeness is a separate issue. It’s on this other front that the RIAA and other industry stakeholders are pursuing legislation on state and federal levels.

3) Why is AI music so hard to regulate?

When Roc Nation demanded the Shakespearean clip of Jay-Z be taken down, arguing that it “unlawfully uses an AI to impersonate our client’s voice,” the wording of their argument got some attention of its own: That was a novel approach to a practice that wasn’t already clearly covered under U.S. law. The closest thing to it are the laws around “publicity rights.”

“Every state has some version of it,” says law professor Joseph Fishman, seated behind the desk of his on-campus office at Vanderbilt. “There are differences around the margins state to state. But it is basically a way for individuals to control how their identity is used, usually in commercial contexts. So, think: advertisers trying to use your identity, particularly if you’re a celebrity, to sell cars or potato chips. If you have not given authorization to a company to plaster your face in an ad saying ‘this person approves’ of whatever the product is, publicity rights give you an ability to prevent that from happening.”

Two states with a sizable entertainment industry presence, New York and California, added voice protections to their statutes after Bette Midler and Tom Waits went to court to fight advertising campaigns whose singers were meant to sound like them (both had declined to do the ads themselves). But Tennessee’s ELVIS Act is the first measure in the nation aimed not at commercial or promotional uses, but at protecting performers’ voices and likenesses specifically from abuses enabled by AI. Or as Glazier described it, “protecting an artist’s soul.”

Tennessee lawmakers didn’t have templates in any other states to look to. “Writing legislation is hard,” Fishman says, “especially when you don’t have others’ mistakes to learn from. The goal should be not only to write the language in a way that covers all the stuff you want to cover, but also to exclude all the stuff that you don’t want to cover.”

What’s more, new efforts to prevent the exploitation of performers’ voices could inadvertently affect more accepted forms of imitation, such as cover bands and biopics. As the ELVIS Act made its way through the Tennessee General Assembly, the Motion Picture Association raised a concern that its language was too broad. “What the [MPA] was pointing out, I think absolutely correctly,” Fishman says, “was this would also include any kind of film that tries to depict real people in physically accurate ways, where somebody really does sound like the person they’re trying to sound like or really does look like the person they are trying to look like. That seems to be swept under this as well.”

The founders of ViNIL (from left: Sada Garba, Jeremy Brook and Charles Alexander) created the Nashville startup to monitor and offer resources around the escalating use of AI and deepfake technology. Jewly Hight

The founders of ViNIL (from left: Sada Garba, Jeremy Brook and Charles Alexander) created the Nashville startup to monitor and offer resources around the escalating use of AI and deepfake technology. Jewly Hight

And of course, getting a law on the books in one state, even a state that’s home to a major industry hub, isn’t a complete solution: The music business crosses state lines and national borders. “It’s very difficult to have a patchwork of state laws applicable to a global, borderless system,” Glazier says. Right now, there’s a great amount of interest in getting federal legislation — like the NO AI FRAUD Act, effectively a nationalized version of publicity rights law — in place.

Some industry innovators also see a need for thinking beyond regulation. “Even when laws pass and even when the laws are strong,” notes Jeremy Brook, an entertainment industry attorney who helped launch the startup ViNIL, “that doesn’t mean they’re easy to enforce.”

Brook and his co-founders, computer coder Sada Garba and digital strategist Charles Alexander, have set out to offer content creators — those who use AI and those who don’t — as well as companies seeking to license their work, “a legal way” to handle their transactions. At South by Southwest last month, they rolled out an interface that allows individuals to license their image or voice to companies, then marks the agreed-upon end product with a trackable digital stamp certifying its legitimacy.

4) New technology has upended the music industry before. Can we take any lessons from history?

The music industry has forever found itself in catch-up mode when new innovations disrupt its business models. Both the rise of sampling incubated by hip-hop and the illegal downloading spree powered by Napster were met with lawsuits, the latter of which accomplished the aim of getting the file-sharing platform shut down. Eventually came specific guidance for how samples should be cleared, agreements with platforms designed to monetize digital music (like the iTunes store and Spotify), and updates to the regulation of payments in the streaming era — but it all took time and plenty of compromise. After hashing all of that out, Glazier says, music executives wanted to ensure “that we didn’t have a repeat of the past.”

The tone of the advocacy for restricting AI abuses feels a little more circumspect than the complaints against Napster. For one thing, people in the industry aren’t talking like machine learning can, or should, be shut down. “You do want to be able to use generative AI and AI technology for good purposes,” Glazier says. “You don’t want to limit the potential of what this technology can do for any industry. You want to encourage responsibility.”

There’s also a need for a nuanced understanding of ownership. Popular music has been the scene for endless cases of cultural theft, from the great whitewashing of rock and roll up to the virtual star FN Meka, a Black-presenting “AI-powered robot rapper” conceived by white creators, signed by a label and met with extreme backlash in 2022. Just a few weeks ago, a nearly real-sounding Delta blues track caused its own controversy: A simple prompt had gotten an AI music generator to spit out a song that simulated the grief and grit of a Black bluesman in the Jim Crow South. On the heels of “Heart on My Sleeve,” the most notorious musical deepfake to date — which paired the vocal likenesses two Black men, Drake and The Weeknd — it was a reminder that the ethical questions circling the use of AI are many, some of them all too familiar.

The online world is a place where music-makers carve out vanishingly small profit margins from the streaming of their own music. As an example of the lack of agency many artists at her level feel, Bragg pointed out a particularly vexing kind of streaming fraud that’s cropped up recently, in which scammers reupload another artist’s work under new names and titles and collect the royalties for themselves. Other types of fraud have been prosecuted or met with crackdowns that, in certain cases, inadvertently penalize artists who aren’t even aware that their streaming numbers have been artificially inflated by bots. Just as it’s hard to imagine musicians pulling their music from streaming platforms in order to protect it from these schemes, the immediate options can feel few and bleak for artists newly entered in a surreal competition with themselves, through software that can clone their sounds and styles without permission. All of this is playing out in a reality without precedent. “There is a problem that has never existed in the world before,” says ViNIL’s Brook, “which is that we can no longer be sure that the face we’re seeing and the voice we’re hearing is actually authorized by the person it belongs to.”

For Bragg, the most startling use of AI she’s witnessed wasn’t about stealing someone’s voice, but giving it back. A friend sent her the audio of a speech on climate change that scientist Bill Weihl was preparing to deliver at a conference. Weihl had lost the ability to speak due to ALS — and yet, he was able to address an audience sounding like his old self with the aid of ElevenLabs, one of many companies testing AI as a means of helping people with similar disabilities communicate. Weihl and a collaborator fed three hours of old recordings of him into the AI model, then refined the clone by choosing what inflections and phrasing sounded just right.

“When I heard that speech, I was both inspired and also pretty freaked out,” Bragg recalled. “That’s, like, my biggest fear in life, either losing my hearing or losing the ability to sing.”

That is, in a nutshell, the profoundly destabilizing experience of encountering machine learning’s rapidly expanding potential, its promise of options the music business — and the rest of the world — have never had. It’s there to do things we can’t or don’t want to have to do for ourselves. The effects could be empowering, catastrophic or, more likely, both. And attempting to ignore the presence of generative AI won’t insulate us from its powers. More than ever before, those who make, market and engage with music will need to continuously and conscientiously adapt.