In a transformative era where technology continually evolves to be more intuitive and empathetic, Emotional AI (Artificial Intelligence) APIs are paving a new path. These advanced tools are not just software interfaces; they are bridges to understanding human emotions and behaviours in a manner that was once the realm of science fiction. Companies like Hume AI, Entropik Tech and Affectiva are at the forefront of Emotional AI, using artificial intelligence to understand human emotions by blending psychology with advanced technology. Entropik is transforming brand-consumer interactions by analyzing cognitive and emotional responses to gain deep insights into consumer behavior. The application of Emotional AI extends beyond marketing into healthcare and other sectors, with companies like NuraLogix employing novel methods for emotional assessment, which has significant implications for mental health and diagnostics. However, the advancement of this technology also brings up important ethical and privacy issues, particularly regarding consent and data security, highlighting the need for responsible and ethical use of Emotional AI.

The Complex Ethos of Emotional AI APIs: Navigating Innovation and Responsibility

As we delve deeper into the world of Emotional AI APIs, it’s important to consider significant industry shifts that reflect the evolving landscape of this technology. Notably, tech giants like Microsoft, as well as companies like HireVue and Nielsen, have made the decision to discontinue their facial coding APIs. This move underscores the complex ethical and privacy considerations surrounding emotional AI.

Microsoft’s decision to discontinue its emotional recognition API in June of 2022 was rooted in several ethical and practical considerations. This choice reflects a broader shift in the tech industry’s approach to sensitive technologies, particularly around artificial intelligence (AI). In a blog post, Microsoft outlined the measures it would take to ensure its Face API is developed and used responsibly.

“A core priority for the Cognitive Services team is to ensure its AI technology, including facial recognition, is developed and used responsibly. While we have adopted six essential principles to guide our work in AI more broadly, we recognized early on that the unique risks and opportunities posed by facial recognition technology necessitate its own set of guiding principles.To strengthen our commitment to these principles and set up a stronger foundation for the future, Microsoft is announcing meaningful updates to its Responsible AI Standard, the internal playbook that guides our AI product development and deployment. As part of aligning our products to this new Standard, we have updated our approach to facial recognition including adding a new Limited Access policy, removing AI classifiers of sensitive attributes, and bolstering our investments in fairness and transparency.

Facial detection capabilities (including detecting blur, exposure, glasses, head pose, landmarks, noise, occlusion, and facial bounding box) will remain generally available and do not require an application.In another change, we will retire facial analysis capabilities that purport to infer emotional states and identity attributes such as gender, age, smile, facial hair, hair, and makeup.

We collaborated with internal and external researchers to understand the limitations and potential benefits of this technology and navigate the tradeoffs. In the case of emotion classification specifically, these efforts raised important questions about privacy, the lack of consensus on a definition of “emotions,” and the inability to generalize the linkage between facial expression and emotional state across use cases, regions, and demographics. API access to capabilities that predict sensitive attributes also opens up a wide range of ways they can be misused—including subjecting people to stereotyping, discrimination, or unfair denial of services.” said Sarah Bird, the product manager in charge at Microsoft.

The Implications of Discontinuing Facial Coding APIs

The discontinuation by these companies signals a growing awareness and concern around the implications of emotion recognition technology. It raises crucial questions about the accuracy, ethical use, and potential biases in AI systems designed to interpret human emotions. The decision by these firms to step back from facial coding APIs could mark a turning point in how the tech industry approaches the development and deployment of emotional AI.

Balancing Innovation with Ethical Responsibility

As the sector continues to evolve, it becomes increasingly important for companies involved in Emotional AI to balance innovation with ethical responsibility. This involves ensuring privacy, addressing biases in AI models, and securing informed consent from users. The steps taken by Microsoft, HireVue, and Nielsen highlight the need for a more cautious and considered approach towards the development of technologies that interact closely with human emotions.

Looking Ahead: Emotional AI APIs

Despite these challenges, the potential of Emotional AI remains vast. As the technology matures, it could lead to advancements in personalizing customer experiences, enhancing mental health treatments, and creating more empathetic human-computer interactions. However, the path forward must be navigated with an emphasis on ethical practices, transparency, and respect for user privacy.

The journey of Emotional AI APIs is as much about technological advancement as it is about ethical introspection and responsibility. As the industry responds to these challenges, the future of Emotional AI continues to be a compelling narrative of innovation intertwined with the human element.

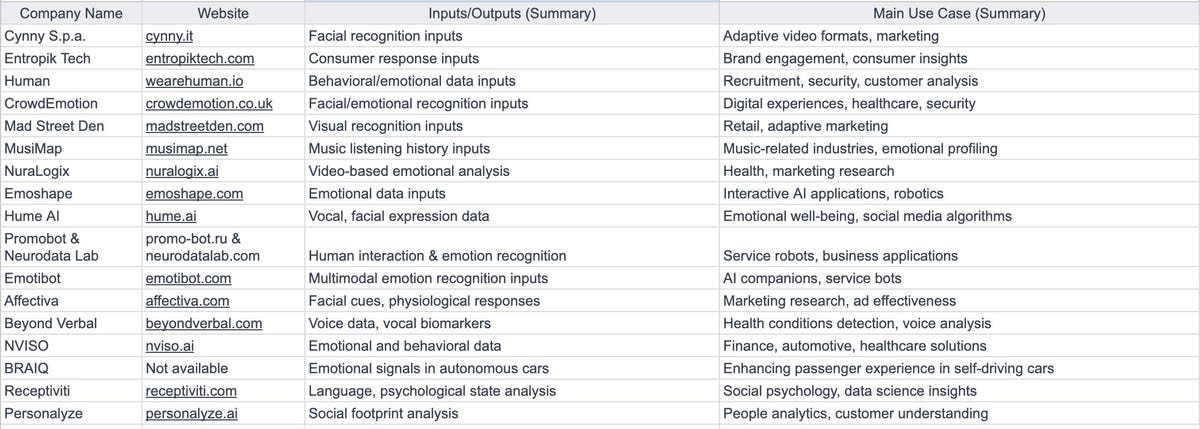

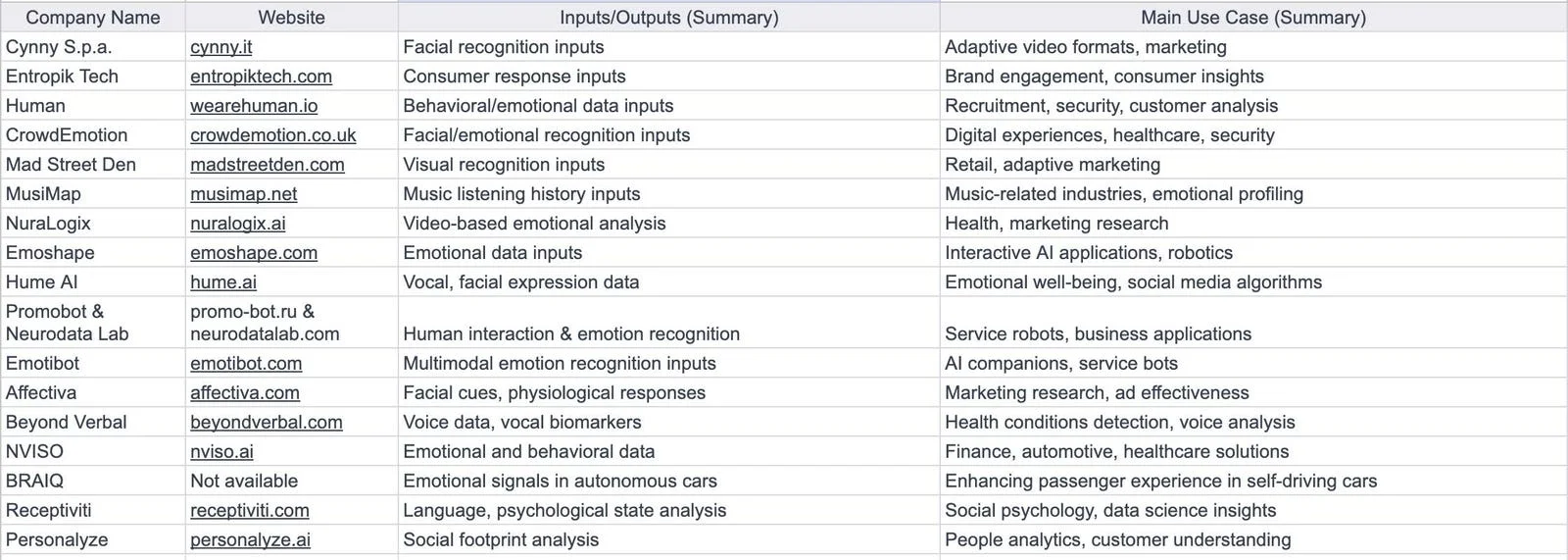

List of Emotional AI API companies

Emotional AI for optimising investment returns

Emotional AI in investment is an emerging field that integrates the analysis of human emotions and behaviors to enhance financial decision-making. While there are not many tools specifically branded as “Emotional AI” for investment purposes, there are approaches and methodologies that leverage emotional intelligence for better investment outcomes.

One approach to incorporating emotional intelligence in investments is Financial Emotional Intelligence (FEI). FEI involves adding emotional details to trade registries, thus allowing investors to reflect on how their emotions correlate with their decisions. This method emphasizes the importance of being aware of one’s emotional state during investment decision-making and learning from past emotional influences to improve future decisions. Investors can begin by documenting their emotional state during trades and cross-referencing this with patterns in their decision-making, thereby aiming to minimize the impact of negative emotional biases on their investment strategies.

In the realm of AI, while traditional financial AI tools focus on quantitative data, there is a growing interest in integrating emotional data for more nuanced insights. For instance, AI tools can be trained to recognize and interpret emotional cues in voice or text, providing additional layers of analysis to financial decision-making processes. Such tools can analyze customer interactions in call centers, identifying emotional cues that can be indicative of broader market sentiments or individual customer preferences.

Moreover, there is an increasing interest in using AI for analyzing Environmental, Social, and Governance (ESG) performance. Tools like IFC’s MALENA use natural language processing to analyze vast amounts of textual data for ESG insights, which can be a critical component of investment strategies. These AI tools can rapidly filter and analyze massive datasets to generate insights that enable more accurate risk assessments and investment decisions.

Emotional AI for assessing traders

An interesting 2012 study published in the Journal of Neuroscience Psychology and Economics explains how advanced biometric tools can be used to assess traders. The study was interested in seeing how the state of the market, the trader’s experience, and how well they manage their emotions (measured by HRV, their heart rate variability) are connected. The findings showed that when the market is more unpredictable, the traders’ ability to manage their emotions decreases, but more experienced traders are better at it. This indicates that being good at controlling emotions might be a key skill for traders and that as traders get better over time, part of what they’re learning is how to control their emotions better. The study suggests that it might be useful to explore how managing emotions can impact other types of financial decisions too.

In a more recent study published in 2022 researchers explored how the physical and emotional responses of professional traders (like their heart rate and stress levels) relate to their trading decisions and market changes.In this study, researchers observed 55 professional traders at a major financial institution, tracking their physiological responses with wearable technology during trading hours for a week. They used a device that measures heart rate, skin temperature, and other bodily signals to understand how traders physically react in real-time during their work without disrupting their routine. The main goal was to see if there’s a link between these physical reactions, called “psychophysiological (PP) activation,” and the traders’ decisions or market events. This PP activation, which reflects emotional states like excitement or stress, was analysed to find out when it changed in relation to trading activities or market changes.

The study was based on two ideas: first, that making financial decisions, especially under uncertainty, triggers these physical and emotional responses in traders; and second, that these responses are influenced by various factors like market movements, the type of financial products being traded, and the trader’s experience. They tested four theories related to these ideas, examining the effects of market changes, trading experience, the kind of financial products traded, and the specifics of the trading activities on the traders’ PP activation.

The findings showed clear connections between the traders’ PP activation levels and market movements, with the type of market data they monitored impacting their physical responses. More experienced traders showed lower levels of PP activation, suggesting they might be better at managing their stress and emotions when trading. The type of financial products traded also affected their PP activation, with those involved in more volatile markets showing higher levels of stress and excitement. Additionally, the study found that traders were most physically and emotionally aroused shortly after executing a trade.

To deepen their understanding, the researchers also interviewed some of the traders, gaining insights into how work demands, social interactions, and managerial duties might influence their stress and excitement levels. Busy days and social events, for example, tended to increase traders’ PP activation. This comprehensive approach provides a nuanced view of how traders’ physiological states are intertwined with their professional activities and the market environment.

In summary, while the direct application of emotional AI in investment is still developing, the integration of emotional intelligence and AI in financial decision-making processes is a promising area. It offers an opportunity to blend quantitative analysis with a deeper understanding of human emotions and behaviors, potentially leading to more informed and effective investment strategies.

The Future of Emotional AI

The potential of Emotional AI is vast and still largely untapped. With continuous advancements, we could see a future where AI not only understands our emotional states but also responds in a way that enhances our daily lives, from smarter personal assistants to empathetic healthcare robots.

In conclusion, Emotional AI APIs represent a significant leap in how we interact with technology, offering a more personalised and human-centric approach. As these technologies evolve, they promise to reshape various industries, making our interactions with machines more natural and emotionally aware.