I’ve authored over 100 AI prompts ready for deployment with my team. Our stringent standards demand consistent performance and reliability across various applications.

This task is far from simple. While a prompt may succeed in nine scenarios, it may falter in the tenth, necessitating the development of robust solutions through extensive research and trial and error.

To aid in prompt creation, we have curated proven rapid architectural strategies. By delving into the rationale behind each strategy, we empower you to tailor them to address your specific challenges effectively.

Ensuring Correct Adjustments Before Prompt Creation

Navigating the realm of large language models (LLMs) is akin to conducting an orchestra. Your prompts act as the sheet music guiding the performance, with knobs like Temperature and Top P allowing you to fine-tune the AI ensemble’s output during the crucial convolutions phase.

These settings play a pivotal role in enhancing creativity or reigning it in, shaping the AI’s thought selection and connection process.

Understanding how these settings influence AI output is paramount for content creators seeking to master AI-driven content creation.

Deconstructing the AI Process: A Closer Look

Let’s dissect the stages of a converter, progressing from the initial prompt to the final output at the softmax layer, to gain a comprehensive understanding of the process.

Consider a GPT prompt: “The most crucial SEO aspect is…”

- Step 1: Tokenization

- Each word is converted into a numerical token by the model. For example, “The” might be token 1, “most” token 2098, “important” token 4322, “SEO” token 4, “factor” token 697, and “is” token 6.

- LLMs process word representations as numerical values rather than actual words.

- Step 2: Word Embeddings

- Each token is transformed into a word embedding, multi-dimensional vectors capturing word meanings and relationships.

- These vectors, though seemingly complex, represent various features or attributes of a word.

- Step 3: Neural Processing

- Mathematical operations using word embeddings help the model comprehend word contexts and relationships.

- Neurocognitive weights aid in understanding the contextual significance of words like “important” and “SEO” in the prompt.

- Step 4: Generation of Likely Words

- The model generates a list of probable future words based on the input context.

- These words could include typical SEO terms like “content,” “backlinks,” or “user experience.”

- Step 5: Softmax Stage

- Probabilities of potential words are calculated using the softmax function.

- The model assigns probabilities to words like “content,” “backlinks,” or “user experience” based on training data.

- Step 6: Word Selection

- Based on probabilities, the model selects the next word, ensuring coherence and relevance in the output.

By understanding this process, you gain insight into how adjustments to Temperature and Top P can influence AI-generated content.

Unpacking Temperature and Top P Adjustments

Temperature and Top P serve as adjustable settings in AI prompts, impacting the diversity and focus of generated content.

Impact of Temperature Adjustment

-

High Temperature (e.g., 0.9):

- Leads to a broader range of possible words with more even distribution of probabilities.

- Encourages creative and imaginative outputs but may risk veering off course.

-

Low Temperature (e.g., 0.3):

- Favors high-probability words, resulting in more traditional and focused outputs.

Effects of Top P Adjustment

-

High Top P (e.g., 0.9):

- Considers a wider range of words with higher combined probabilities, maintaining output richness while excluding less likely options.

-

Low Top P (e.g., 0.5):

- Focuses on top words until their combined probability reaches a set threshold, leading to more focused outputs.

Application in SEO Context

Adapting Temperature and Top P settings allows for tailored content generation, from exploring unique SEO aspects to focusing on established factors like “content” and “backlinks.”

Understanding and adjusting these settings enable SEO professionals to optimize AI content for various objectives, from technical discussions to creative brainstorming for SEO strategies.

Leveraging Rapid Architectural Techniques

Mastering rapid architecture is crucial for maximizing LLM potential, enabling precise control over AI outputs by adding detailed instructions to prompts.

By employing strategies like persona assignment, example incorporation, adherence to prompt patterns, problem complexity enhancement, and iterative prompt refinement, content creators can enhance the accuracy and relevance of AI-generated content.

These techniques empower content creators to guide AI effectively, ensuring that the generated content aligns with their intended goals and requirements.

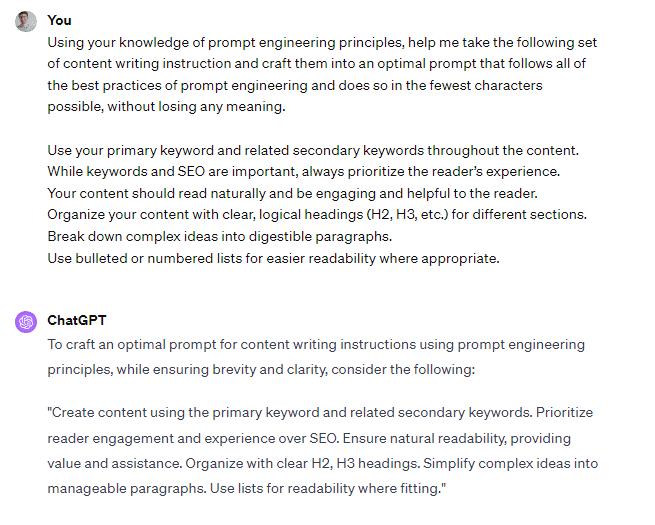

Enhancing Prompt Precision Through Iterative Refinement

Iteratively refining prompts by feeding initial prompts back into the system aids in crafting more refined and effective instructions for AI models.

This iterative process, akin to a feedback loop, fosters collaboration between content creators and AI systems, resulting in clearer, more concise prompts that align closely with the desired outcomes.

Crafting Productive AI Prompts

The realm of AI-assisted content creation offers a myriad of strategies, such as “ring of thought,” “mental verifier,” “template,” and “tree of thoughts,” to address complex challenges and enhance question-answer accuracy.

By exploring these advanced techniques and refining prompt engineering practices, content creators can harness the full potential of AI for diverse applications.

Stay tuned for further insights on prompt separation, method-user input differentiation, and advanced prompt engineering methodologies in upcoming discussions.