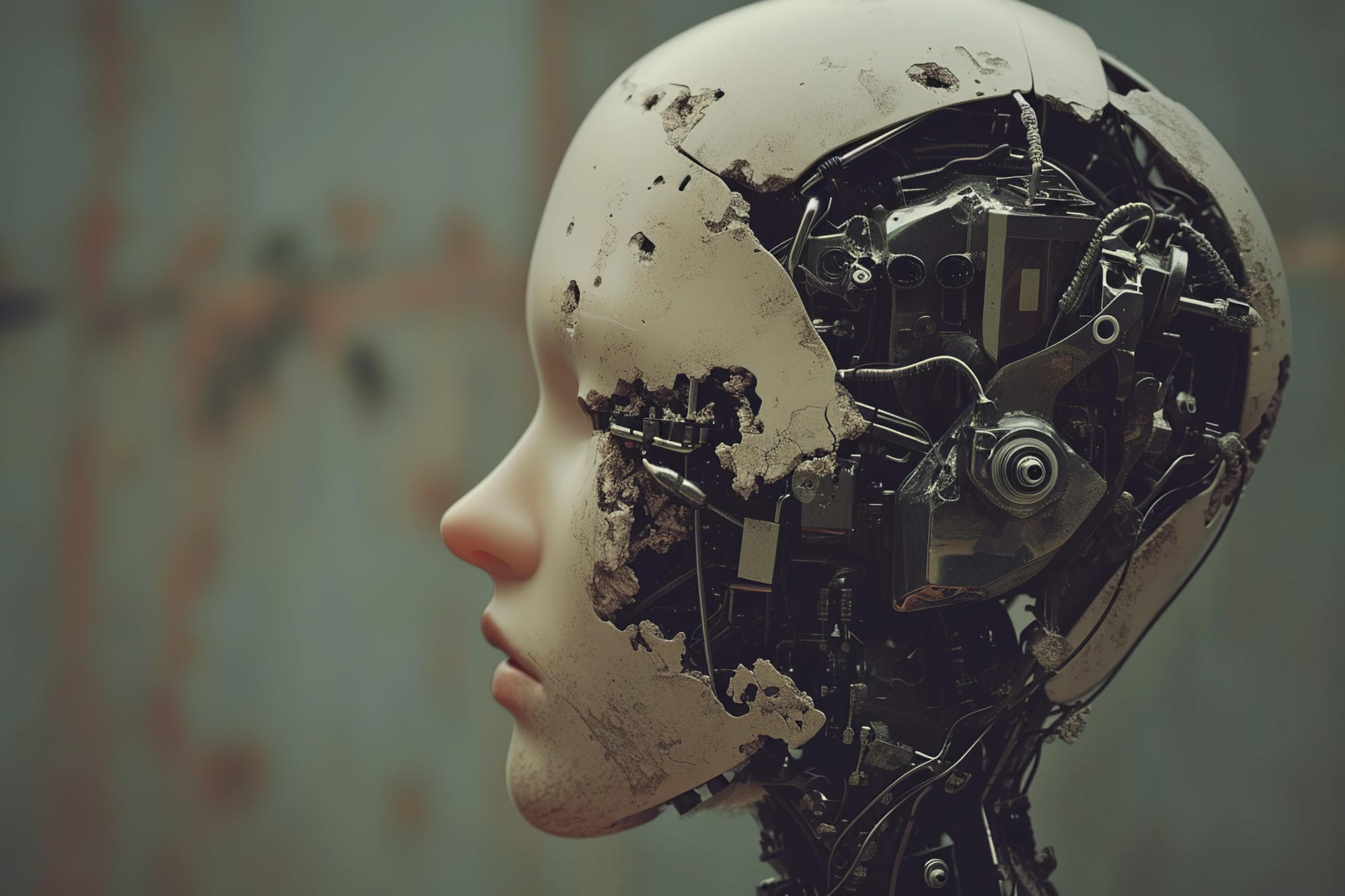

Researchers from the University of Copenhagen have illustrated that intricate issues cannot be resolved solely with completely secure machine learning algorithms, underscoring the critical necessity for thorough testing and awareness of AI constraints. SciTechDaily.com is acknowledged.

The researchers from the University of Copenhagen are the pioneers globally in proving mathematically that creating AI algorithms that are consistently robust beyond basic problems is unattainable.

Machine Learning Programs like ChatGPT

Machine learning, a subset of artificial intelligence (AI), focuses on developing algorithms and statistical models that empower computers to learn from data, make predictions, or decisions without explicit programming. It involves recognizing patterns, categorizing data, and predicting future events. It encompasses supervised, unsupervised, and reinforcement learning.

Despite the increasing prevalence of machine learning solutions, even the most advanced systems have limitations. A groundbreaking revelation by University of Copenhagen researchers statistically establishes the impossibility of creating universally robust AI algorithms beyond fundamental challenges. This research sheds light on the fundamental distinctions between machine operations and human intelligence, potentially paving the way for enhanced algorithm testing protocols.

The academic article detailing this breakthrough has been accepted for publication by a premier international conference on theoretical computer science.

While machines may soon excel in tasks like driving cars more safely than humans, translating languages, or interpreting medical images with superior accuracy, the finest algorithms are not flawless. A research team at the University of Copenhagen’s Department of Computer Science is actively investigating these imperfections.

Consider an autonomous vehicle capable of reading road signs. Unlike a human driver who can overlook distractions like stickers on signs, a machine might falter due to the altered appearance of the sign from its training data.

“We aim for algorithm stability, ensuring consistent outcomes even with slight variations,” stated Professor Amir Yehudayoff, leading the research team. He emphasized that real-world scenarios introduce diverse stimuli that humans effortlessly navigate, whereas machines can falter.

Addressing Algorithmic Imperfections

The pioneering group from the University of Copenhagen has empirically demonstrated the infeasibility of developing universally robust machine learning algorithms beyond basic challenges. The prestigious Foundations of Computer Science (FOCS) conference has approved the scholarly article elucidating this finding for publication.

Although the team has not directly tackled issues in autonomous vehicle applications, Amir Yehudayoff notes the complexity of ensuring algorithmic stability, implying potential ramifications for the development of automated vehicles:

“While occasional errors in rare circumstances may be acceptable, widespread inconsistencies pose significant concerns.”

The research’s primary objective is not to pinpoint flaws in existing systems. Instead, as Professor Yehudayoff clarifies, they aim to establish a common language for discussing machine learning algorithm imperfections. This endeavor could lead to enhanced testing methodologies and, ultimately, the development of more robust and reliable techniques.

Transitioning from Intuition to Formalism

One potential application of this research lies in enhancing privacy protection testing methodologies.

Businesses often claim to possess foolproof privacy protection solutions. Our approach can aid in verifying the robustness of such claims and pinpointing vulnerabilities, explains Amir Yehudayoff.

However, the research primarily advances theoretical concepts. Professor Yehudayoff elaborates, “We intuitively grasp that a robust algorithm should perform consistently even in the presence of minor input perturbations.” The groundbreaking insight here is akin to recognizing a street sign with added embellishments. By articulating these issues in mathematical terms, we can precisely define the resilience threshold of an algorithm and its proximity to the original output under perturbations.

Embracing Cognitive Boundaries

While the theoretical computer science community has shown keen interest in this research, the practical applications sector has yet to catch up.

Amir Yehudayoff humorously remarks, “Some theoretical advancements may remain undiscovered indefinitely,” highlighting the typical lag between conceptual breakthroughs and industry adoption.

Nonetheless, he anticipates a different trajectory in this case. With machine learning advancing rapidly, it is crucial to acknowledge that even highly successful real-world solutions have inherent limitations. Despite machines occasionally exhibiting human-like cognition, they lack the depth of human understanding, a critical consideration.

The study “Replicability and Stability in Learning” by Zachary Chase, Shay Moran, and Amir Yehudayoff was presented at the Foundations of Computer Science (FOCS) conference in 2023.