CEO Mark Zuckerberg recently unveiled what some families consider to be the social media company’s most unsettling design yet: artificially intelligent bots inspired by real-life celebrities. This announcement comes just weeks before 42 states joined forces to sue Meta, alleging that the company deliberately creates addictive products targeting children.

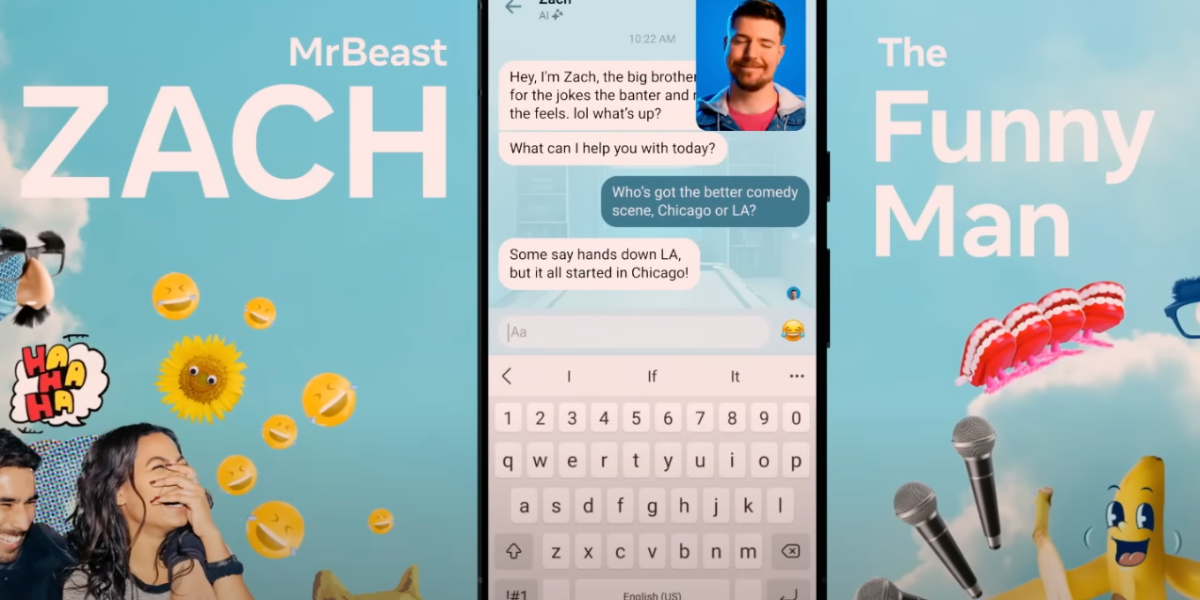

These AI bots, developed in partnership with notable personalities such as Charli D’Amelio, Tom Brady, and Kendall Jenner, leverage relational AI to bring animated renditions of constellations to life online. Users of Meta’s platforms like WhatsApp, Instagram, and Messenger can engage with these bots on a personal level, sharing jokes and confiding in them.

Referred to by Meta as AIs possessing “personality, opinions, and interests, making interactions more engaging,” these bots showcase the organization’s technological advancements following a $35 billion investment in research and development last year. However, some parents and child psychologists express concerns about the potential impact of these AI figures on children’s well-being.

Licensed clinical social worker and child psychotherapist Kara Kushnir from New Jersey believes that these AI bots may exacerbate children’s struggles with social media usage control. She emphasizes the challenges parents will face in managing their children’s exposure to these AI entities, adding another layer to the already complex issue of screen time regulation.

The debate over whether Meta’s social media features are genuinely addictive, akin to tobacco, will be settled in court. Kevin McAlister, Meta’s director, argues that unlike tobacco, Meta’s apps enhance people’s lives. Nevertheless, the introduction of AI personas raises concerns among critics who believe that Meta’s products have negative implications for mental health.

Despite Meta’s claims that these AI bots closely resemble the celebrities they are based on, concerns persist regarding the potential blurring of reality and fiction, especially for younger users. Parent and child physician Elizabeth Adams, founder of Ello, an AI reading coach startup, highlights the challenge of distinguishing between real and AI-generated content, particularly for children.

The rollout of AI characters by Meta has sparked comparisons to other tech companies’ endeavors in the same space. Snapchat’s MyAI and Google’s Bard AI cater to younger audiences, offering personalized experiences with varying degrees of success and safety measures. TikTok is also exploring AI applications to enhance user engagement.

Amidst the legal battles and parental concerns, the introduction of AI characters by Meta has raised alarms reminiscent of past controversies, such as the Joe Camel advertising campaigns by Big Tobacco. Therapist Kushnir draws parallels between the potential addictive nature of AI characters and the insidious marketing tactics employed in the past.

The impact of AI characters on children’s perceptions and behaviors is a subject of growing concern among parents and healthcare professionals. The potential for AI to influence young minds, especially those of neurodivergent children, is a significant worry. The risk of children forming deep emotional connections with AI entities at the expense of real human relationships is a valid concern.

As Meta continues to expand its lineup of AI characters, the company faces scrutiny over the ethical implications of these technologies. The financial incentives offered to celebrities for their AI personas and the ongoing development of new AI figures raise questions about the long-term impact on users, particularly children.

In conclusion, Meta’s foray into AI characters marks a significant development in the social media landscape, with implications for user engagement, mental health, and ethical considerations. As the debate continues, the role of AI in shaping children’s online experiences remains a critical issue for stakeholders to address.