If you seek an alternative to Nvidia GPUs for AI inference in the era of generative AI advancements, Groq emerges as a compelling option. Specializing in Language Processing Units (LPUs) like the GroqChip, Groq is gearing up its production to meet the escalating demand for supporting inference in extensive language models.

In the current landscape, various compute engines such as the CS-2 wafer-scale processor from Cerebras Systems, the SN40L Reconfigurable Dataflow Unit from SambaNova Systems, and Intel’s Gaudi 2 and upcoming Gaudi 3 engines are gaining traction as alternatives to traditional Nvidia and AMD GPUs. This shift is primarily driven by the scarcity of HBM memory and advanced packaging technologies, which are pivotal components in these compute engines.

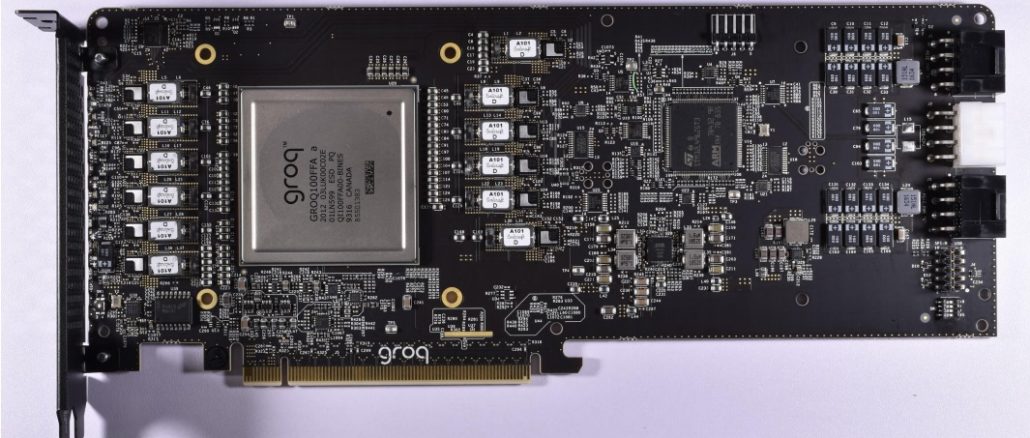

Contrary to the dependency on advanced processes and packaging, the “OceanLight” supercomputer in China, powered by domestically developed SW26010-Pro processors, exemplifies a highly efficient computing architecture capable of significant HPC and AI workloads. Groq’s uniqueness lies in its GroqChip LPUs, which do not rely on external HBM or CoWoS packaging, setting them apart from the competition.

Jonathan Ross, Groq’s co-founder and CEO, emphasizes the superior throughput, reduced latency, and cost-effectiveness of Groq LPUs compared to Nvidia GPUs for Large Language Model (LLM) inference. The recent surge in demand for faster model execution has propelled Groq into the spotlight, with a significant uptick in customer interest and hardware allocations.

In terms of scalability and performance, Groq envisions a future where its GroqChip clusters can scale extensively, offering enhanced fabric architecture and improved chip design. Benchmarking against the LLaMA 2 model from Meta Platforms, Groq demonstrates remarkable efficiency with significantly lower energy consumption and faster inference speeds compared to Nvidia GPUs.

Looking ahead, Groq’s roadmap includes the development of next-generation GroqChips leveraging Samsung’s 4 nanometer processes, promising even greater scalability and efficiency. Ross anticipates a substantial increase in power efficiency, paving the way for enhanced matrix compute capabilities and memory integration within the same power constraints.

With a strategic focus on optimizing power efficiency and performance, Groq aims to deliver cost-effective solutions for AI inference tasks, positioning itself as a key player in the evolving landscape of AI hardware acceleration.