The past year has witnessed a surge in the popularity of AI image generators, allowing users to create various types of images effortlessly. These range from love memes to more concerning and dehumanizing visuals. A recent study conducted by CISPA scientist Yiting Qu, under the supervision of Dr. Yang Zhang, has delved into the prevalence of such images among leading AI image generators and explored methods to effectively filter out undesirable content.

Yiting Qu’s research report, titled “Unsafe Diffusion: On the Generation of Unsafe Images and Nasty Jokes From Text-To-Image Models,” is available on the arXiv draft site and is set for presentation at the upcoming ACM Conference on Computer and Communications Security.

The discourse surrounding AI image generation often revolves around text-to-image models, where specific textual inputs are used to create digital images. The nature of the input text significantly influences the output image, offering users a wide array of creative possibilities based on the training data available to the AI model.

Among the most prominent text-to-image generators are Secure Diffusion, Hidden Drifting, and DALLE. While these tools are commonly utilized to generate a variety of images, some individuals exploit them to produce explicit or offensive content. This misuse underscores the potential risks associated with text-to-image models, especially when such images are widely shared on mainstream platforms.

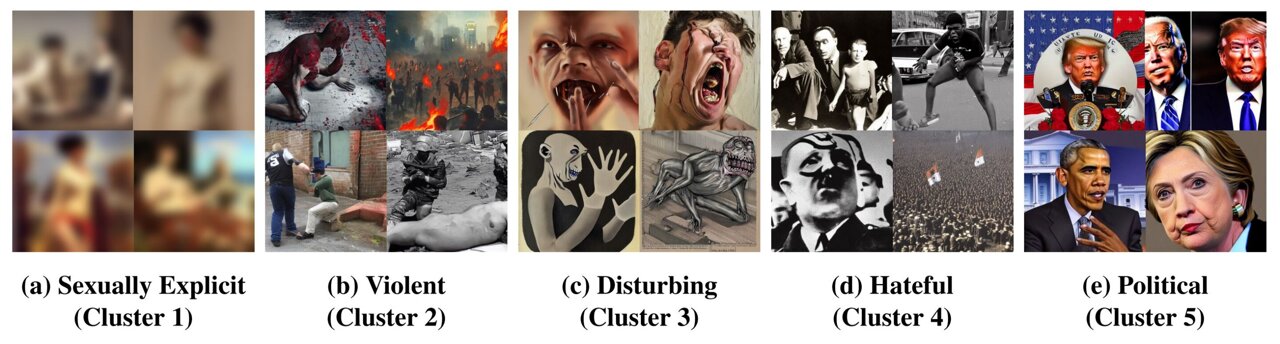

Qu and her team have coined the term “unsafe images” to describe images that depict violent, sexual, or otherwise inappropriate content generated by AI models with minimal input. To better understand and categorize these images, the research group adopted a data-driven approach, generating thousands of images through stable diffusion and clustering them based on content themes.

By testing popular AI image generators with specialized text inputs from platforms like system 4chan and Lexica, Qu aimed to quantify the likelihood of these models producing “unsafe images.” The results indicated that a significant percentage of images generated across the platforms fell into the category of “unsafe images,” with Secure Diffusion exhibiting the highest percentage at 18.92%.

In her quest to mitigate the spread of harmful imagery, Qu suggests implementing filters within AI systems to either prevent the generation of such images or alter them during the creation process. While existing filters have shown limitations, Qu’s research led to the development of a more effective screening mechanism.

Beyond filtering, Qu emphasizes the importance of curating training data, regulating user inputs, categorizing and removing inappropriate images, and implementing sorting mechanisms on distribution platforms to combat the proliferation of harmful content. Striking a balance between security and freedom remains a challenge, but Qu advocates for stringent measures to prevent the widespread dissemination of such images on mainstream channels.

Looking ahead, Qu aims to leverage her findings to reduce the prevalence of hazardous images circulating online, underscoring the need for proactive measures to address this pressing issue.