When Gary Schildhorn answered the phone on his commute to work in 2020, he was startled to hear his son Brett’s distressed voice on the line, claiming he had been in a car accident and needed $9,000 for bail. Brett described a scenario where he had collided with a pregnant woman’s vehicle, resulting in a broken nose, and urged his father to contact the public defender assigned to his case.

Following Brett’s instructions, Mr. Schildhorn reached out to the alleged public defender, who advised him to transfer the funds via a Bitcoin kiosk. However, this interaction raised suspicions in Mr. Schildhorn’s mind. Subsequent communication with Brett revealed the truth: he was safe, and Mr. Schildhorn narrowly avoided falling prey to what the Federal Trade Commission labels the “family emergency scam.”

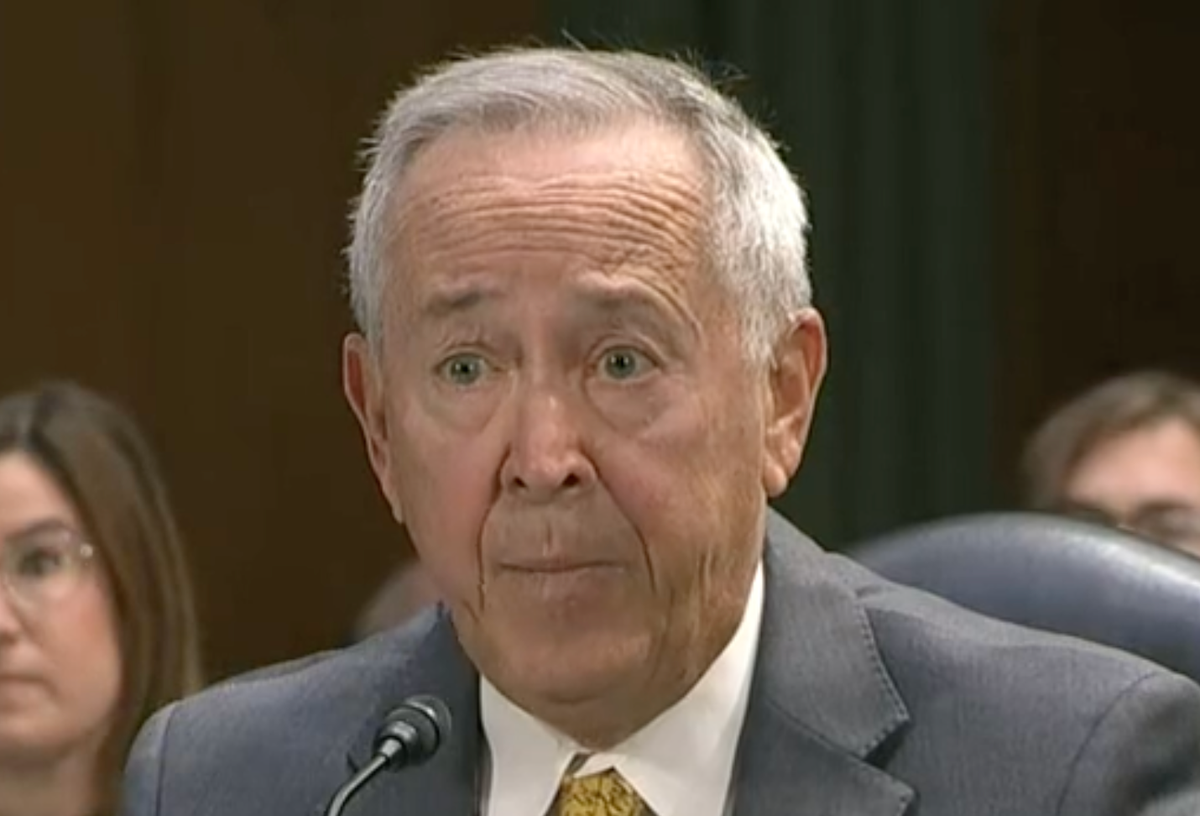

During a recent congressional hearing, Mr. Schildhorn, a corporate attorney based in Philadelphia, recounted the emotional turmoil he experienced upon realizing the attempted scam. This elaborate scheme involves scammers utilizing artificial intelligence to replicate a person’s voice convincingly, manipulating loved ones into sending money under false pretenses. Local authorities redirected Mr. Schildhorn to the FBI, which, while acknowledging the scam, indicated limited jurisdiction unless the funds were sent abroad.

The Federal Trade Commission issued a warning in March, emphasizing the evolving nature of scams facilitated by advanced technologies, such as AI voice cloning. These scams can replicate a person’s voice with alarming accuracy using minimal audio samples, often sourced from publicly available content like social media posts. The ease with which scammers can generate lifelike voice replicas poses a significant threat, especially when coupled with emotional manipulation tactics during phone calls.

Lawmakers are grappling with the regulatory challenges posed by AI voice cloning scams, as highlighted by Jennifer DeStefano’s harrowing encounter during a Senate hearing. She recounted a distressing call where a scammer threatened harm to her daughter, leveraging AI-generated cries for help to instill fear. The lack of robust legal frameworks to address such scams has left victims vulnerable, with existing laws primarily focused on privacy rights rather than safeguarding against fraudulent voice cloning.

Despite the inadequacy of current legislation, efforts are underway to combat voice cloning fraud. The FTC has initiated a call to action, encouraging innovative solutions to mitigate the risks associated with AI voice cloning. Victims are urged to report incidents of voice-cloning fraud on the FTC’s website, signaling a collective effort to address and prevent such deceptive practices.