At Computex 2023, during the Supermicro presentation, Nvidia’s CEO Jensen Huang will showcase the Grace Grinder superchip CPU, specifically designed for conceptual AI applications.

Nvidia recently introduced the H200, a powerful graphics processing unit tailored for training and deploying advanced artificial intelligence models, crucial for driving the current generative AI trend.

The upgraded H100, previously utilized by OpenAI to develop its cutting-edge language model, GPT-4, has now evolved into the new H200 GPU. The demand for these cards is fierce among major corporations, startups, and government entities.

Estimates from Raymond James suggest that the H100 chips are priced between \(25,000 and \)40,000 each, with organizations needing thousands of them to build and train massive models effectively.

Driven by the excitement surrounding its AI GPUs, Nvidia’s stock has surged by over 230% in 2023. The company anticipates approximately $16 billion in revenue for the fiscal third quarter, marking a significant increase from the previous year.

A notable feature of the H200 is its 141GB of cutting-edge “HBM3” memory, enabling efficient “inference” tasks such as generating text, images, or predictions from programmed models. Nvidia claims that the H200 can deliver output almost twice as fast as its predecessor, the H100, as demonstrated by tests using Meta’s Llama 2 LLM.

Scheduled for release in the second quarter of 2024, the H200 will rival AMD’s MI300X GPU, boasting enhanced memory capacity to accommodate large models for inference tasks effectively.

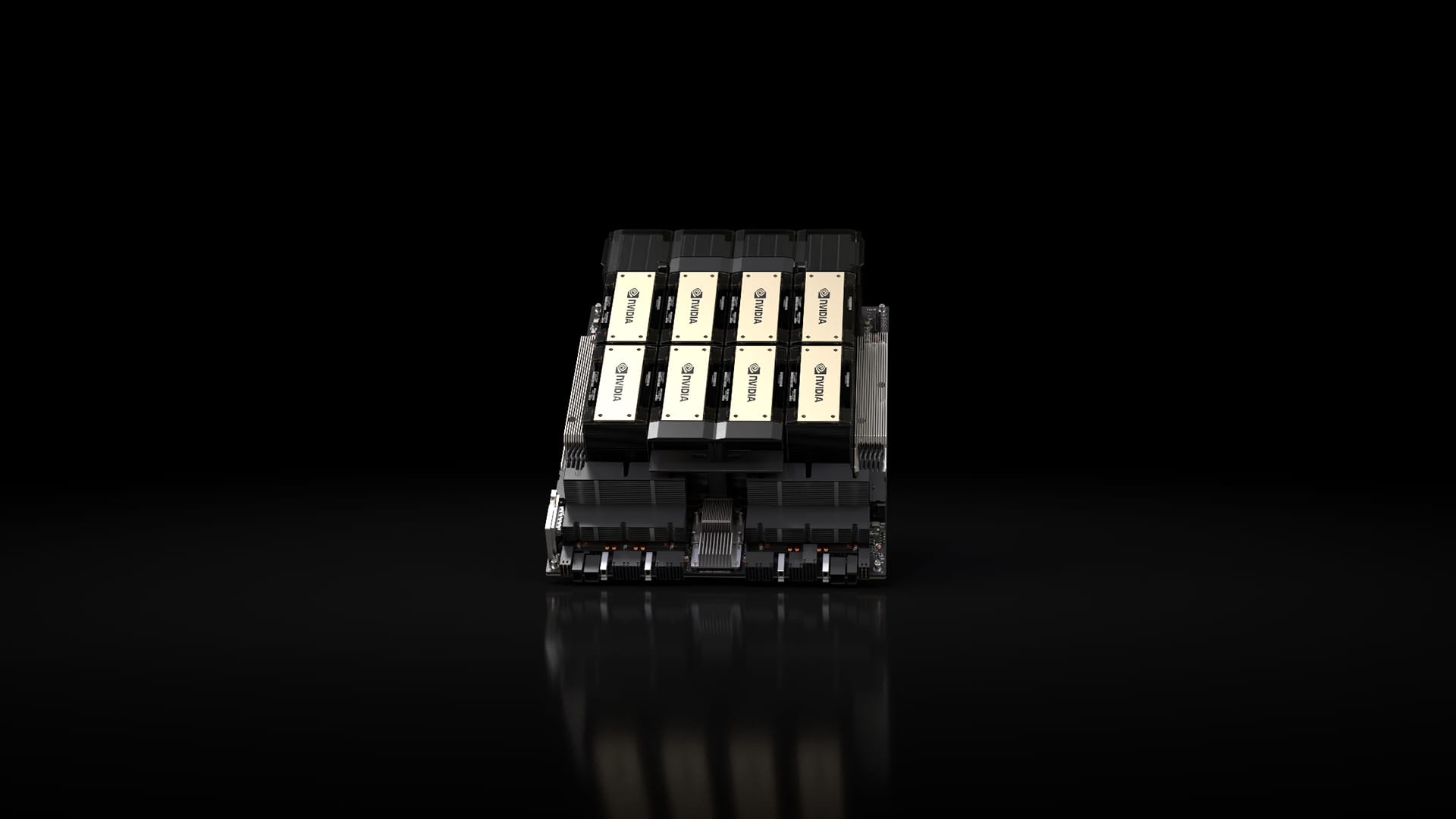

The Nvidia HGX program incorporates Vidia H200 chips in an eight-GPU configuration. Nvidia assures that companies currently utilizing the previous model for training purposes can seamlessly transition to the H200 without requiring changes to their server systems or software, ensuring compatibility between the H200 and H100.

Nvidia plans to offer the HGX full systems in four- or eight-GPU server setups, along with the GH200 device that combines the H200 GPU with an Arm-based computer.

Despite its current prowess, the H200 may soon face competition as technological advancements typically leap significantly every two generations, unlocking substantial efficiency gains. Nvidia’s shift to an annual release cycle from a biennial one reflects the industry’s rapid evolution, with the upcoming B100 chip based on the Blackwell architecture slated for a 2024 debut.