Have you ever observed that ChatGPT fails to retain previous messages upon opening a new conversation? Or that each time your autonomous vehicle navigates a pipeline, it repeats the same mistake?

This phenomenon arises from the current limitations of AI methodologies, which do not continuously learn and adapt as they progress. Engineers must gather and incorporate fresh data, train the program, and meticulously assess its performance before reintroducing it to the environment. The retraining process occurs manually with individual oversight.

The challenge of “catastrophic forgetting” plagues modern artificial neural networks, as they often struggle to retain previously learned information when acquiring new knowledge. This deficiency in common sense and motor skills further constrains their capabilities.

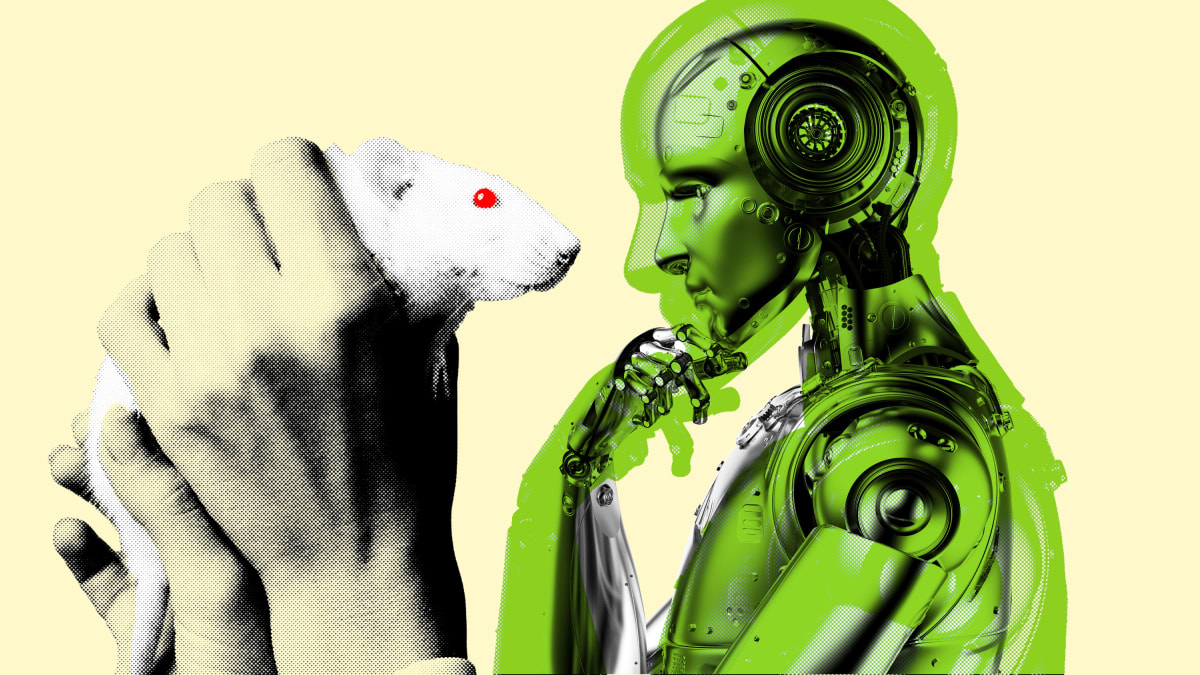

Significant resources are being invested in addressing these issues, yet we are lagging behind. The solutions to these challenges were already present in the neural structures of early mammals over 100 million years ago. Even a small rat can effortlessly navigate these hurdles, showcasing superior common sense and motor abilities compared to contemporary machines.

So, how do animals achieve this feat? The brain operates through two thinking modes, akin to systems 1 and 2, where one involves deliberate mental processes while the other enables quick decision-making. This dichotomy mirrors the ongoing debates in AI research, philosophy, and neuroscience. Daniel Kahneman’s renowned work, “Thinking, Fast and Slow,” labels these modes as “system 1” and “system 2,” or goal-directed versus habitual decision-making.

The slower, deliberative thinking mode, which is pivotal for holistic understanding, is notably absent in current AI systems. Our capacity for imaginative planning and seamless integration of new and old knowledge stems from this internal cognitive framework.

While some AI systems excel at predicting future events, like Google Maps plotting routes or AlphaZero simulating game moves, they struggle to adapt in real-world scenarios beyond controlled environments. Rats, on the other hand, effortlessly navigate complex real-world challenges, handling noisy information, vast decision spaces, and evolving internal needs.

The recent advancements in large-scale language models have astonished researchers by achieving feats previously deemed exclusive to more complex systems. Despite lacking a detailed understanding of the external world, models like GPT-4 demonstrate remarkable common-sense reasoning and logical coherence. They epitomize “fast” thinking capabilities.

The ultimate goal of AI is to surpass the limitations of the human mind rather than merely replicating it. However, the current approach of scaling neural networks without considering the gradual evolution of human intelligence risks overlooking crucial aspects of cognition necessary for AI systems to truly comprehend.

Human intelligence evolved over time, layering cognitive faculties gradually, a process not fully replicated in present AI systems. By incorporating these gradual advancements into AI development, we can enhance their safety, resilience, and ability to fulfill the promises of AI. This thoughtful approach may tip the scales towards a successful outcome in the intriguing, yet potentially daunting, realm of AI that we are just beginning to explore.