On the recent episode of The Excerpt podcast: The introduction of potent Artificial Intelligence (AI) into society without adequate regulation or safeguards has sparked widespread concern. While AI shows immense potential across various sectors such as healthcare and law enforcement, it also presents significant risks. How concerned should we be? To delve deeper into this topic, we are joined by Vince Conitzer, the Head of Technical AI Engagement at the Institute for Ethics in AI at the University of Oxford.

To listen to the podcast, click on the player below and follow along with the transcript provided. Please note that this transcript has been automatically generated and subsequently refined for improved clarity. Discrepancies between the audio and text may exist.

Podcasts: Explore a variety of USA TODAY podcasts, including true crime and in-depth interviews, right here.

Dana Taylor:

Greetings and welcome to The Excerpt. I’m Dana Taylor. Today is Sunday, January 28th, 2024.

The introduction of powerful artificial intelligence into society, with minimal to no regulation or oversight, has unsettled many individuals. Its emerging utilization in diverse fields ranging from healthcare to law enforcement and warfare has already highlighted the significant perils it presents. How worried should we be? I am now joined by an expert who can shed light on the potential risks associated with AI and offer insights on how to mitigate these risks. Vincent Conitzer, a computer science professor at Carnegie Mellon University, holds the position of Professor of CS and Philosophy and serves as the Head of Technical AI Engagement at the Institute for Ethics in AI at the University of Oxford. He is also the co-author of the upcoming book “Moral AI and How we Get There,” set to be released on February 8th. Vincent, thank you for being here.

Vincent Conitzer:

I appreciate the invitation.

Dana Taylor:

Let’s commence with an exploration of Oxford’s distinctive approach to ethics in AI and its significance. How can philosophy contribute to our understanding of AI regulation?

Vincent Conitzer:

Indeed, AI’s pervasive influence across various aspects of life necessitates a multidisciplinary approach to its ethical deployment. While my background lies predominantly in computer science, the traditional training in this field primarily focuses on the technical aspects of system functionality. However, the current landscape demands a deeper contemplation on how we wish to integrate AI into society, the limitations we wish to impose, and the desired functionalities of this technology. This deliberation extends beyond technical considerations to encompass broader societal, legal, and medical dimensions. Philosophy serves as a pivotal starting point due to its interdisciplinary nature, offering a comprehensive framework for ethical discourse. At the Institute for Ethics in AI, we advocate for a collaborative effort involving experts from diverse fields such as law, social sciences, and medicine to address the multifaceted challenges posed by AI.

Dana Taylor:

While the potential of AI in domains like healthcare, law enforcement, and military applications is undeniable, there are also apprehensions surrounding its implementation in these sectors. Let’s focus on healthcare for a moment. Recent lawsuits have highlighted instances where AI tools allegedly denied life-saving care. What are the dangers associated with relying on AI in healthcare settings?

Vincent Conitzer:

The integration of AI in healthcare presents a realm of promise and caution. While the technology showcases remarkable potential, its evaluation and implementation demand meticulous scrutiny. Merely achieving favorable outcomes in controlled studies does not guarantee seamless real-world application. Discrepancies in data collection methods, biases in training data, and variations in patient demographics can significantly impact the efficacy of AI systems in healthcare. Hence, a cautious and rigorous approach is imperative to ensure the reliability and inclusivity of AI-driven healthcare solutions.

Dana Taylor:

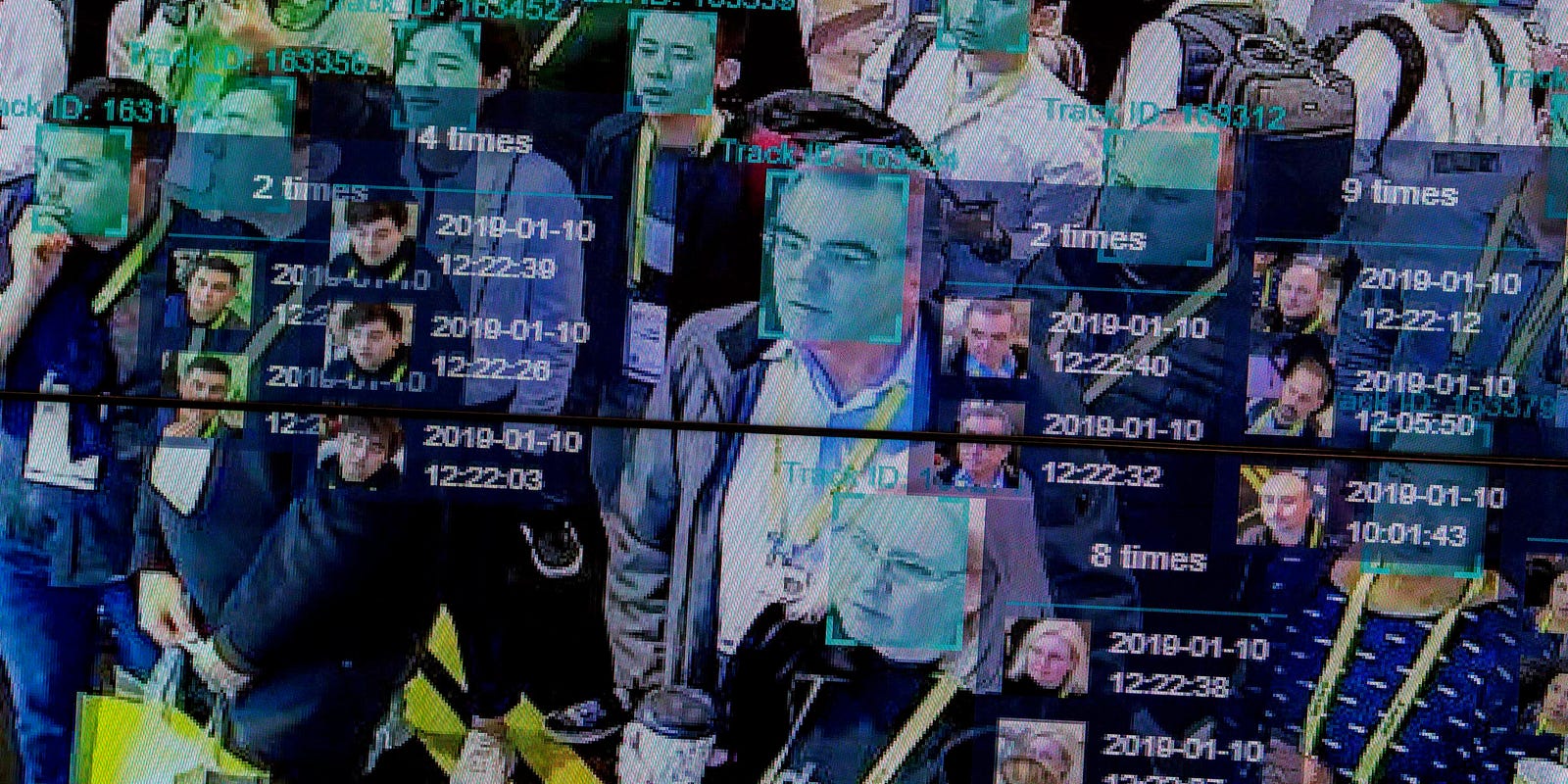

Shifting our focus to AI’s role in law enforcement, facial recognition technology has garnered significant attention. Despite its potential benefits in criminal identification, what ethical and legal challenges does it pose?

Vincent Conitzer:

The deployment of AI, particularly facial recognition technology, in law enforcement has sparked debates surrounding its efficacy and fairness. Studies have revealed inherent biases in facial recognition algorithms, disproportionately affecting individuals with darker skin tones. The ethical implications of such biases are particularly concerning in law enforcement applications, where accuracy and impartiality are paramount. Additionally, emerging practices like generating facial images from DNA samples raise further ethical dilemmas, as they introduce additional layers of uncertainty and potential errors into the identification process.

Dana Taylor:

The utilization of AI in armed conflict has also raised significant concerns. The development of autonomous weapons capable of independent decision-making poses complex ethical challenges. What are the key considerations regarding AI’s involvement in warfare?

Vincent Conitzer:

The advent of autonomous weapons fueled by AI technology has sparked widespread apprehension regarding the ethical ramifications of delegating life-and-death decisions to machines. While the concept of human oversight in autonomous weapon systems offers a semblance of control, the practical implementation raises critical questions about the extent of human involvement and the ethical reasoning embedded within AI algorithms. Striking a balance between human oversight and AI autonomy is crucial to ensuring responsible decision-making in armed conflict scenarios.

Dana Taylor:

AI’s impact on electoral integrity has also come under scrutiny, with instances of AI-generated misinformation influencing public opinion. How can we safeguard elections against AI-driven manipulation?

Vincent Conitzer:

The proliferation of AI-generated misinformation poses a significant threat to electoral integrity worldwide. The emergence of deepfake technologies capable of mimicking political figures and disseminating false narratives necessitates enhanced vigilance and critical evaluation of information sources. As AI continues to advance, distinguishing between authentic and manipulated content becomes increasingly challenging, underscoring the importance of media literacy and skepticism in the face of AI-driven disinformation campaigns.

Dana Taylor:

Combatting fraud through AI-driven phishing attacks presents another pressing challenge. The sophistication and scale of AI-enabled phishing tactics raise concerns about data security and privacy. How can individuals protect themselves against AI-facilitated fraud?

Vincent Conitzer:

AI-powered phishing attacks pose a formidable threat to data security, leveraging advanced algorithms to craft personalized and convincing scams. The scalability and sophistication of AI-driven phishing tactics necessitate heightened awareness and robust cybersecurity measures to safeguard sensitive information. Deepfake technologies further compound the risk of social engineering attacks, emphasizing the need for enhanced digital literacy and vigilance in detecting fraudulent schemes.

Dana Taylor:

While we have delved into the potential risks associated with AI, it is essential to acknowledge the transformative potential of AI in addressing societal challenges and enhancing quality of life. What are your insights on the positive impact of AI in various domains?

Vincent Conitzer:

Despite the inherent risks, AI holds immense promise in revolutionizing diverse fields such as healthcare, environmental conservation, and scientific research. From optimizing medical diagnostics to advancing material sciences, AI’s capacity to drive innovation and knowledge acquisition is unparalleled. However, the responsible deployment of AI is paramount to harnessing its benefits while mitigating potential harms, underscoring the need for ethical considerations and regulatory frameworks to guide its ethical development.

Dana Taylor:

Regulation plays a pivotal role in mitigating the risks associated with AI deployment. However, the dynamic and multifaceted nature of AI applications necessitates adaptive and comprehensive regulatory frameworks. How can effective regulation help address the ethical challenges posed by AI?

Vincent Conitzer:

Navigating the regulatory landscape of AI demands a nuanced approach that balances sector-specific regulations with overarching ethical guidelines. Tailored regulations addressing distinct AI applications are essential to ensure compliance and accountability in diverse domains. Simultaneously, a holistic regulatory framework encompassing broader ethical considerations is crucial to address the cross-cutting implications of AI technology. While regulatory efforts show promise in mitigating AI-related risks, sustained attention and resources are essential to foster adaptive and effective governance mechanisms.

Dana Taylor:

Vincent, thank you for sharing your valuable insights on The Excerpt.

Vincent Conitzer:

It was a pleasure to engage in this discussion. Thank you for having me.

Dana Taylor:

Special thanks to our senior producer, Shannon Rae Green, for her contributions to this episode. Our executive producer is Laura Beatty. Share your feedback on this episode by reaching out to us at [email protected]. Thank you for tuning in. I’m Dana Taylor, and remember to join us tomorrow morning for another episode of The Excerpt with Taylor Wilson.