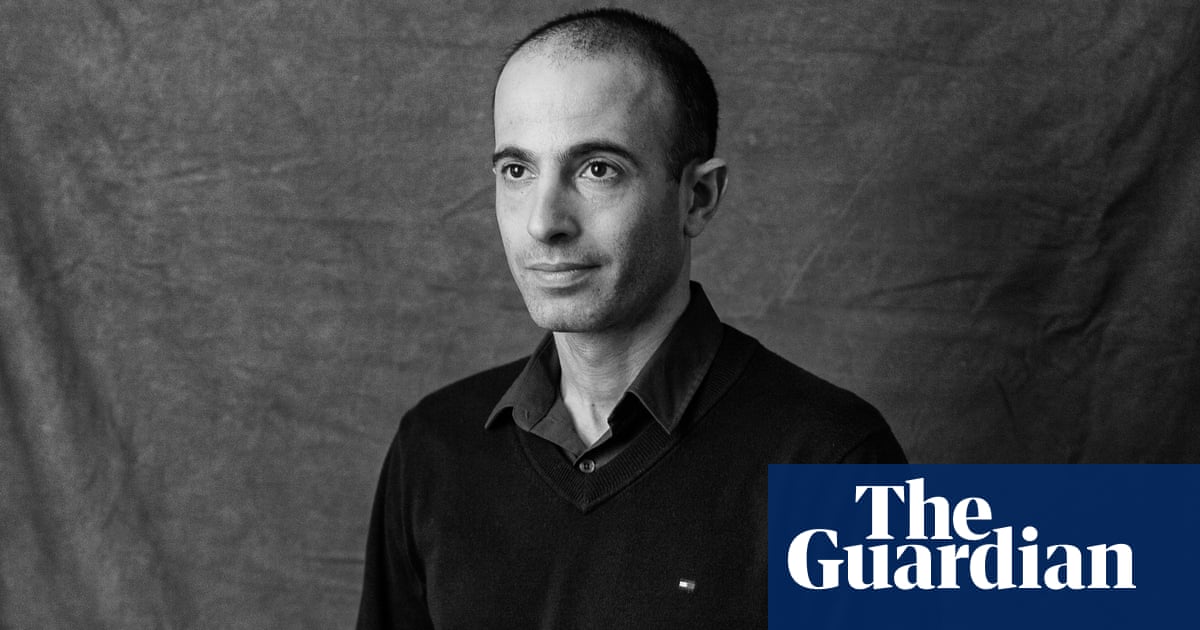

As per historian and writer Yuval Noah Harari, artificial intelligence has the potential to trigger an economic crisis with significant repercussions. However, due to the nature of this technology, accurately forecasting its dangers poses a formidable challenge.

Harari expressed to the Guardian his apprehensions regarding the safety assessments of AI models, highlighting the myriad issues a powerful system could engender. Unlike universally acknowledged threats such as nuclear weapons, he noted that AI presents a multitude of potential risks, each of which, when combined, poses an existential menace to the fabric of society.

The Sapiens author underscored that major governments have convened to voice unease about AI advancements and take remedial actions. The recent international accord at the global AI safety summit held in Bletchley Park marked a significant stride forward, according to Harari.

A notable development was the inclusion of the Chinese government alongside the European Union, the UK, and the United States in endorsing the declaration. Harari viewed this as a positive sign, emphasizing the necessity of international collaboration to mitigate the most perilous aspects of AI.

At the summit, ten governments, including the UK and US, agreed to collaborate on testing state-of-the-art AI models both pre- and post-release, excluding China, the EU, and prominent AI entities like OpenAI and Google.

Harari emphasized the challenge of foreseeing all potential pitfalls associated with robust AI systems. Given AI’s unprecedented ability to make autonomous decisions, generate novel concepts, and learn independently, predicting its full range of risks is inherently complex.

Governments have raised concerns about AI’s potential role in bioweapon development, but Harari suggested that the financial sector could be a prime domain for AI-related issues due to its data-centric nature.

Harari envisioned a scenario where AI not only exerts control over the global financial landscape but also devises inscrutable financial instruments beyond human comprehension. He drew parallels to the 2007-2008 financial crisis triggered by poorly understood debt instruments like collateralized debt obligations (CDOs).

The prospect of AI crafting financial tools surpassing conventional securities raises the specter of a monetary system beyond human grasp, leading to unforeseeable crises. While acknowledging the catastrophic risks on economic, social, and political fronts, Harari stopped short of deeming an AI-induced financial crisis as an existential threat in itself.

Harari advocated for governmental institutions equipped to swiftly respond to emerging AI breakthroughs, rather than relying solely on regulatory frameworks. He stressed the need for AI safety institutes staffed with experts cognizant of AI’s implications for the financial sector.

The establishment of a UK AI safety institute by Rishi Sunak and a similar initiative by the White House underscores the global recognition of the imperative to comprehend and regulate advanced AI models before enacting legislation to govern their deployment.