A future in which artificial intelligence blurs the line between reality and fiction has long been predicted by experts. This vision is now becoming a reality. Recent incidents involving what seems to be a high school principal making racist comments underscore the risks posed by easily accessible generative AI tools and the difficulty in detecting their usage.

An unsettling audio recording surfaced on social media last week, allegedly featuring the voice of a school principal in Baltimore County, Maryland. The clip quickly went viral online before garnering attention from local and national media outlets. However, the authenticity of the recording remains unverified, with reports indicating that a union representative attributed its creation to AI. Baltimore County Public Schools are reportedly investigating the incident.

Instances questioning the authenticity of damaging recordings are not new, nor are instances of spoofing gaining traction. However, such cases have typically involved prominent public figures like Russian President Vladimir Putin or American President Joe Biden, rather than high school principals. Robocalls in New Hampshire have been attempting to dissuade voters from participating in the state’s primary election over the past year. The proliferation of generative AI tools has empowered more individuals to create convincing fakes, leading to an anticipated surge in online deception and the unsettling realization that any media content could be counterfeit, leaving society ill-prepared.

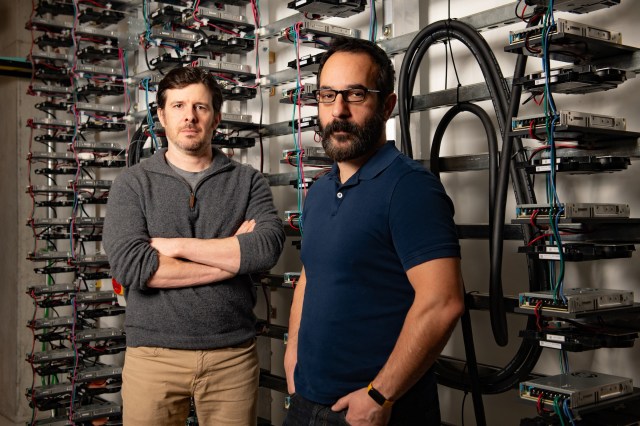

Hany Farid, a computer science professor at the University of California, Berkeley specializing in digital forensics and internet research, shared insights in an interview with Scientific American. Farid has developed tools for algorithmic detection of manipulated videos, audio, and images.

Opinions on the Baltimore County Public Schools Incident

Farid expressed intrigue regarding the incident at Baltimore County Public Schools. Utilizing specialized tools not readily available to the public, he analyzed the audio and concluded that it likely originated from AI generation. By scrutinizing the audio’s spectrogram, he detected distinct signs of digital manipulation at various points, indicating potential synthesis of multiple clips. While the evidence points towards the audio’s inauthenticity, Farid emphasized the need for further investigation before reaching a definitive conclusion.

Approaches to Authenticating Audio Recordings

Farid advocated for a multidisciplinary investigative approach involving diverse experts to analyze the content’s origin, timeline, and potential editing. Examining overt signs of editing, such as abrupt cuts or synthesized sentences, can provide critical insights into the audio’s credibility. Detecting AI-generated audio demands a high level of expertise due to the evolving sophistication of generative AI technology and the challenges it poses in discerning authenticity.

Challenges in Detecting Deepfake Audio

Farid highlighted the accessibility and simplicity of producing compelling audio deepfakes, emphasizing the minimal expertise required to manipulate voices using text-to-speech or speech-to-speech techniques. Identifying AI-generated audio remains a formidable task, with only a handful of specialized labs equipped to reliably detect such forgeries.

Legal Implications and Accountability

The evolving landscape of deepfake technology raises concerns about legal frameworks for verifying audio and video evidence in court proceedings. Farid stressed the need for regulatory measures to hold AI companies accountable for the potential harm caused by their services. Proposing the possibility of legal action against companies producing deepfakes, he underscored the importance of establishing liability frameworks to safeguard consumers and mitigate the misuse of generative AI technologies.