The images, likely generated by artificial intelligence and circulated among thousands of social media users, faced condemnation from the celebrity’s fans and political figures.

This week, fabricated and sexually explicit images of Taylor Swift, believed to be the work of artificial intelligence, rapidly circulated on various social media platforms, causing distress among viewers and renewing calls for lawmakers to safeguard women and address the dissemination of such content through technology and online platforms.

Prior to suspension on Thursday, an individual’s account on X, previously active online, featured a photo that garnered 47 million views. Despite efforts by X to suspend transactions associated with the dissemination of the fake images of Ms. Swift, these visuals persisted on multiple social media platforms.

Supporters of the music icon rallied on the platform in protest while X claimed to be actively working to remove the unauthorized images. In an attempt to overshadow the explicit content and make it harder to locate, fans posted related phrases alongside the hashtag “Protect Taylor Swift.”

According to Ben Colman, co-founder and CEO of Reality Defender, a cybersecurity firm specializing in identifying AI-generated content, there is a 90% certainty that the images were created using an AI-driven technology known as the dispersion model, which is accessible through over 100,000 apps and publicly available designs.

With the expansion of the AI industry, companies have rushed to develop tools that enable users to create photos, videos, text, and audio content through simple prompts. While these AI resources are popular, they have also made it easier and more cost-effective to produce deepfakes—media that depict individuals saying or doing things they never actually did.

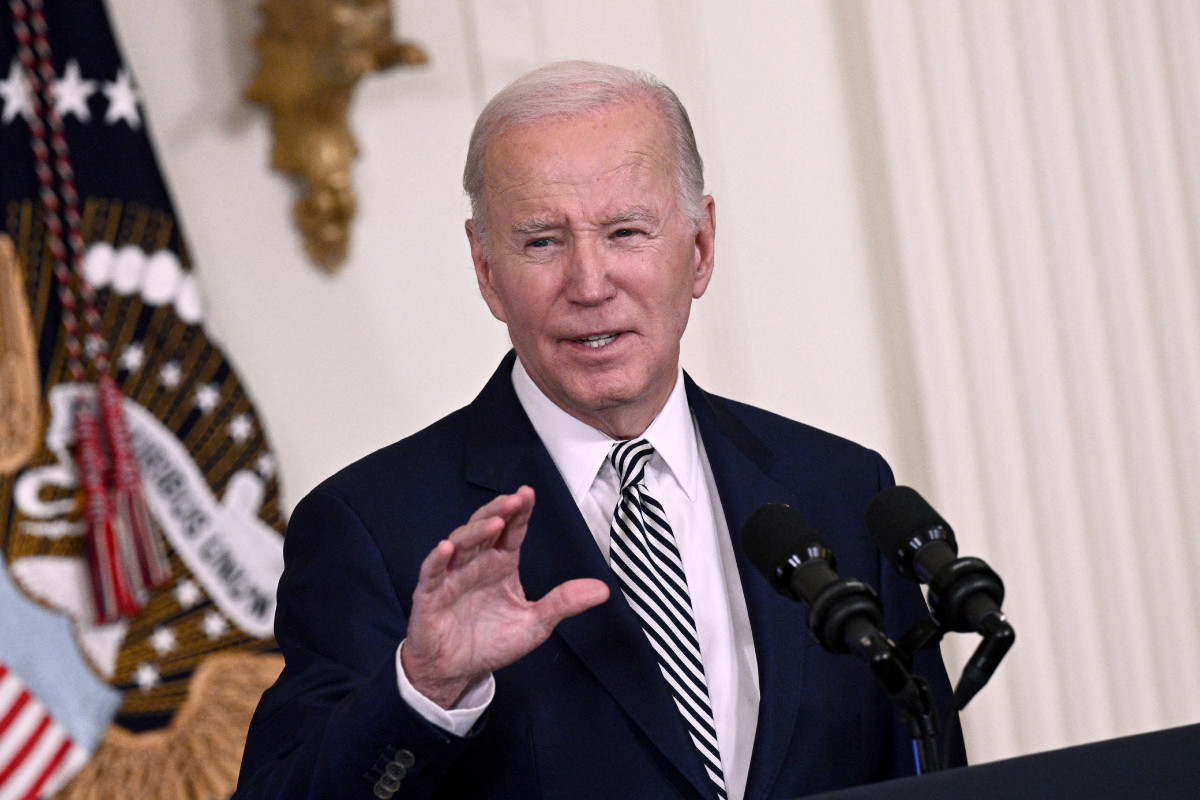

Deepfakes, which empower ordinary internet users to create non-consensual nude images or defamatory portrayals of public figures, are now recognized as a potent source of disinformation by researchers. Instances of false robocalls featuring President Biden and Ms. Swift, generated using synthetic intelligence, emerged during the New Hampshire primary. Recently, Swift was featured in fake advertisements endorsing a product.

Oren Etzioni, a computer science professor specializing in algorithmic identification at the University of Washington, highlighted the dark and non-consensual aspects of AI-generated content, particularly in the realm of pornography. He warned that the proliferation of AI-generated explicit images represents a troubling trend.

X has a strict policy against such content and is actively working to remove all identified visuals and take appropriate action against those responsible for their dissemination. The platform is closely monitoring the situation to promptly address any further violations and remove the objectionable material.

Since Elon Musk’s acquisition of the service in 2022, X has experienced a surge in harmful content, including abuse, propaganda, and hate speech. The platform has relaxed its content policies, leading to the departure of staff members dedicated to content moderation. Additionally, previously banned accounts have been reinstated.

Despite restrictions imposed by AI tool providers to prevent the creation of explicit content, users continue to circumvent these measures. Mr. Etzioni likened this challenge to an ongoing arms race, where for every barrier erected, individuals find ways to bypass it.

The initial posting of the images occurred on a Telegram account dedicated to generating such content, according to 404 Media, a tech news site. Subsequently, the deepfakes gained widespread attention after being shared on X and other social media platforms.

While some states have imposed restrictions on pornographic and social deepfakes, federal legislation governing such content remains absent. Mr. Colman noted that efforts to combat deepfakes by relying on user reports have proven ineffective, as millions of users may have already viewed the content before it is flagged.

In response to the incident, Ms. Tree Paine, Swift’s publicist, did not immediately provide a comment when contacted on Thursday.

Swift’s advocates once again called on legislators to take action following the dissemination of the images, deeming the situation “appalling.” Representative Joe Morelle, a New York Democrat who introduced legislation targeting the sharing of such content, emphasized the prevalence of such incidents and urged for decisive measures.

Senator Mark Warner, a Virginia Democrat and chair of the Senate Intelligence Committee, expressed concerns over the use of AI to create non-consensual imagery, labeling the situation as distressing.

Yvette D. Clarke, a Democratic representative from New York, highlighted the increased accessibility and affordability of deepfake creation due to advancements in artificial intelligence, noting that similar incidents are unfortunately not uncommon in today’s digital landscape.