At times, artificial intelligence (AI) systems excel in recognizing patterns in images more effectively than the human eye. The question arises as to when a doctor should trust or disregard an AI model’s advice when interpreting X-rays for signs of pneumonia.

Experts from MIT and the IBM Watson AI Lab suggest that a personalized training approach could assist physicians in making such decisions. They have developed a program to guide users on interacting with AI assistants effectively.

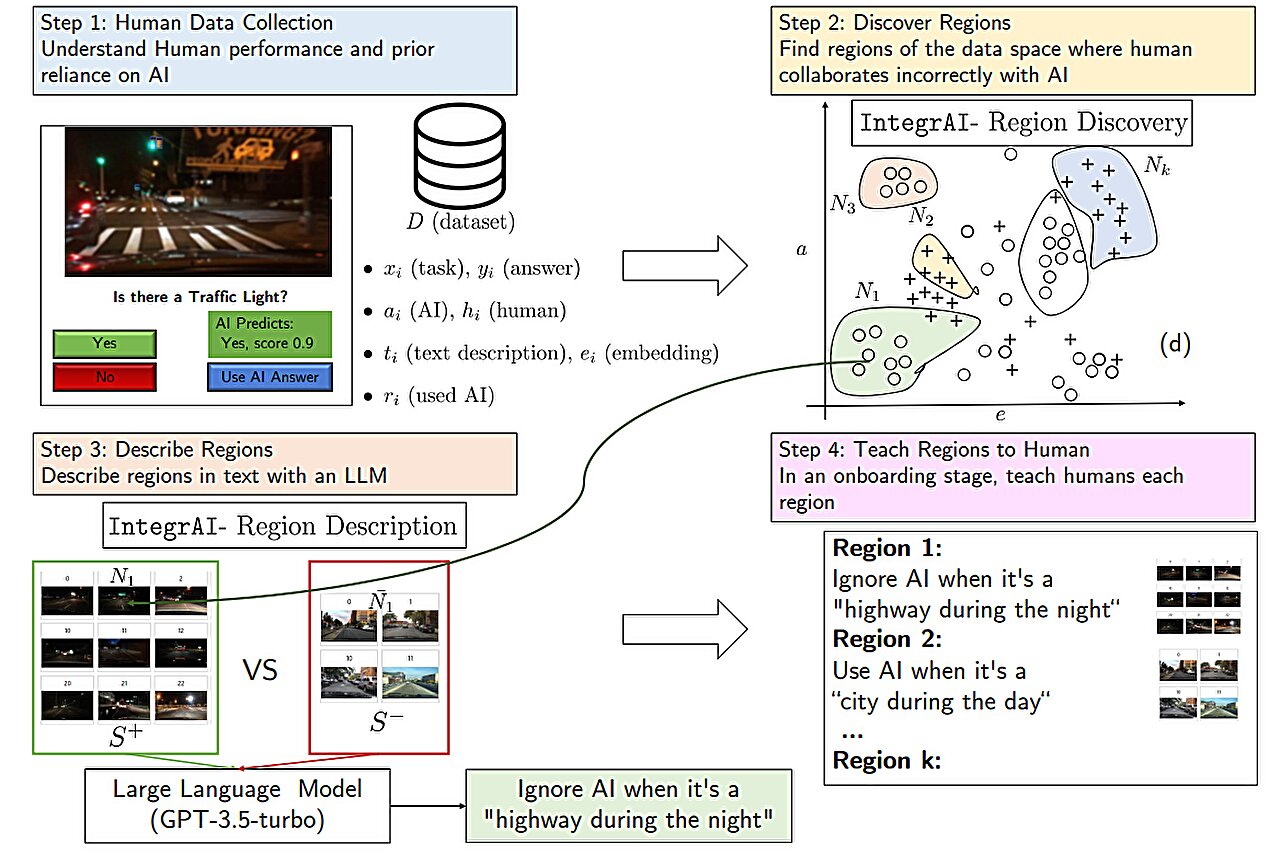

This training method aims to prepare radiologists to navigate situations where the AI’s advice may be inaccurate. By following guidelines on AI collaboration explained in simple terms, the radiologist learns to work with the AI during training exercises and receives feedback on both her performance and the AI’s performance.

Research has shown that when humans and AI collaborate on tasks like image analysis, implementing this training process can enhance accuracy by approximately 5%. The system adapts its training based on data gathered from individuals and IoT devices performing specific tasks, making it suitable for various collaborative scenarios involving humans and AI models.

The lack of structured training for utilizing AI tools contrasts with the norm of providing tutorials for most other tools. Harold Mozannar, a graduate student at the Institute for Data, Systems, and Society, emphasizes the importance of addressing this gap systematically and behaviorally.

The researchers believe that integrating this type of training into healthcare professionals’ education will be crucial, especially for using AI in treatment decision-making. David Sontag, a professor at MIT and a key figure in AI research, suggests that a reevaluation of medical education and clinical trial design may be necessary.

The study, authored by Mozannar along with Jimin J. Lee, Dennis Wei, Prasanna Sattigeri, and Subhro Das, will be presented at the Conference on Neural Information Processing Systems and is available on the arXiv draft site.

The researchers’ training method leverages data to evolve continuously, adapting to the changing capabilities of AI systems and users’ evolving understanding. By analyzing task performance data, the system identifies areas where humans and AI make errors, refines guidelines using natural language models, and creates training exercises to improve decision-making accuracy.

The onboarding process significantly enhanced users’ accuracy in tasks like identifying traffic lights in images without impeding their performance. However, it was less effective for answering general knowledge questions due to the accompanying explanations provided by the AI design ChatGPT.

Offering advice without comprehensive onboarding led to decreased performance and increased decision-making time. Mozannar emphasizes the importance of balancing recommendations with adequate data and plans to conduct further experiments to evaluate the long-term impact of onboarding processes.