Michigan is set to participate in an initiative aimed at combating deceptive practices involving artificial intelligence and manipulated media through the implementation of state-level regulations. This move comes as ongoing discussions within Congress and the Federal Elections Commission continue regarding broader regulations in anticipation of the 2024 elections.

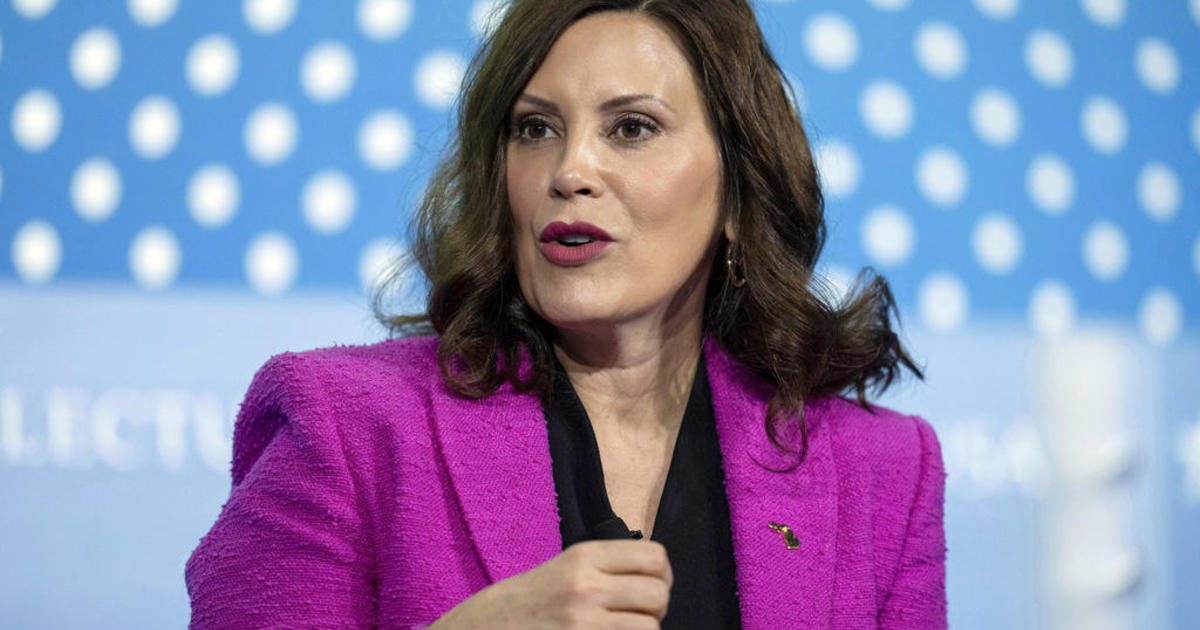

As part of the forthcoming legislation expected to be endorsed by Governor Gretchen Whitmer, political campaigns at both state and federal levels will be mandated to explicitly indicate the utilization of artificial intelligence in the creation of political advertisements aired in Michigan. Additionally, the legislation will prohibit the dissemination of AI-generated deepfakes within 90 days of an election without a distinct disclosure identifying the content as manipulated.

Deepfakes, which are falsified media representations portraying individuals engaging in actions or making statements they did not, are produced using generative artificial intelligence—a form of AI capable of swiftly generating convincing images, videos, or audio clips.

There is a growing apprehension that generative AI could be exploited in the upcoming 2024 presidential campaign to deceive voters, impersonate candidates, and subvert elections at an unprecedented scale and pace.

Various candidates and committees involved in the election are already experimenting with this rapidly evolving technology, which enables the creation of realistic fake images, videos, and audio clips within seconds. This technology has become more accessible, efficient, and affordable for public use in recent years.

For instance, the Republican National Committee unveiled an entirely AI-generated advertisement in April envisioning a hypothetical future of the United States under a reelected President Joe Biden. Despite a small printed disclaimer indicating AI involvement, the ad featured authentic-looking yet fabricated images depicting scenarios like boarded-up storefronts, armed military patrols, and a surge in immigration causing alarm.

In another instance, a super PAC supporting Republican Governor Ron DeSantis of Florida, named Never Back Down, utilized an AI voice cloning tool in July to mimic the voice of former President Donald Trump in a social media post, creating the impression that he verbally endorsed the content despite never vocalizing those statements.

Experts warn that these instances merely scratch the surface of the potential misuse of AI deepfakes by campaigns or external entities in more malicious ways.

Several states including California, Minnesota, Texas, and Washington have already enacted laws governing deepfakes in political advertising. Similar legislation has been proposed in Illinois, Kentucky, New Jersey, and New York, according to the advocacy group Public Citizen.

Michigan’s legislation stipulates that any individual, committee, or entity disseminating candidate advertisements must overtly disclose the use of generative AI. The disclosure must match the font size of the predominant text in print ads and be prominently displayed for at least four seconds in television ads, as outlined in an analysis by the state House Fiscal Agency.

Moreover, deepfakes circulated within 90 days of an election necessitate a separate disclaimer alerting viewers to the manipulated nature of the content, particularly if it portrays fabricated speech or actions. In the case of videos, the disclaimer must be clearly visible throughout the entire duration of the video.

Violations of these proposed laws could result in misdemeanor charges carrying penalties of up to 93 days in prison, a fine of up to $1,000, or both for initial offenses. The attorney general or aggrieved candidates could seek legal recourse through the appropriate circuit court against deceptive media.

While bipartisan federal lawmakers emphasize the significance of regulating deepfakes in political advertising, no concrete federal legislation has been passed yet. A recent bipartisan Senate bill, co-sponsored by Senators Amy Klobuchar and Josh Hawley, aims to prohibit “materially deceptive” deepfakes related to federal candidates, with exceptions for parody and satire.

Michigan Secretary of State Jocelyn Benson engaged in bipartisan discussions on AI and elections in Washington, D.C. in early November, advocating for the passage of the federal Deceptive AI Act proposed by Senators Klobuchar and Hawley. Benson also urged senators to advocate for similar state-level legislation in their respective states.

Benson highlighted the limitations of federal regulations in overseeing AI at local levels and emphasized the need for federal funding to address the challenges posed by AI. She underscored the importance of federal support in hiring personnel to manage AI-related issues at the state level and educating voters on identifying and responding to deepfakes effectively.

In a procedural move in August, the Federal Election Commission took steps to potentially regulate AI-generated deepfakes in political advertisements under existing rules prohibiting “fraudulent misrepresentation.” Despite soliciting public feedback on a petition submitted by Public Citizen, the commission has yet to reach a decision on this matter.

Furthermore, major social media platforms have introduced guidelines to mitigate the dissemination of harmful deepfakes. Meta, the parent company of Facebook and Instagram, announced a policy requiring political ads on their platforms to disclose if they were created using AI. Similarly, Google unveiled an AI labeling policy in September for political ads displayed on YouTube and other Google platforms.