A team of researchers at Nanyang Technological University, Singapore (NTU Singapore) has introduced an innovative computer program known as DIRFA (Diverse yet Realistic Facial Animations), marking a significant breakthrough in the realms of artificial intelligence and multimedia communication.

This AI-driven advancement showcases remarkable capabilities by transforming a standard audio clip and a static facial image into vivid 3D animated videos. These videos redefine the landscape of online media creation by presenting a diverse array of facial expressions, natural head movements, and precise synchronization of mouth movements with the audio content.

Evolution of DIRFA

The core feature of DIRFA lies in its sophisticated algorithm, seamlessly blending audio inputs with visual imagery to generate three-dimensional videos. By meticulously analyzing the tones and patterns of the audio, DIRFA adeptly anticipates and replicates corresponding facial expressions, head gestures, and speech patterns. Consequently, the final output exhibits a high degree of realism, with facial expressions intricately mirroring the nuances of the spoken words.

The development of DIRFA signifies a significant leap forward from previous technologies in this domain, which often grappled with capturing the intricacies of diverse poses and individual expressions.

Unlike conventional methods that struggled to capture the subtleties of human emotions or handle varied head positions effectively, DIRFA offers a more adaptable and practical solution. Its proficiency in capturing a wide spectrum of emotional nuances and adapting to different head orientations sets it apart.

This innovation not only paves the way for enhanced digital media interactions but also provides a glimpse into a future where electronic communication is more expressive and personalized, transcending the realm of mere technological advancement.

Technology and Training Underlying DIRFA

The ability of DIRFA to replicate human-like facial expressions and head movements stems from an extensive training regimen. The NTU Singapore team leveraged over one million audio clips from the VoxCeleb2 Dataset to train the software on a vast dataset.

With inputs from over 6,000 individuals encompassing facial expressions, head movements, and speech patterns, DIRFA has honed its ability to discern and mimic the subtle nuances that define individual expressions and speech characteristics.

Dr. Wu Rongliang, the lead researcher, and Associate Professor Lu Shijian, the co-author, have provided valuable insights into the significance of their contributions to this study.

Associate Professor Lu emphasizes that “the impact of our research could be profound and far-reaching, revolutionizing the realm of media communication by enabling the creation of highly realistic videos of individuals speaking, integrating AI and machine learning techniques.” The videos produced through our system exhibit accurate lip movements, vibrant facial expressions, and natural head poses, solely based on audio inputs and dynamic images, representing a technological advancement building upon prior research efforts.

Dr. Wu Rongliang elaborates on the diverse facets of speech, highlighting its variations in tone, duration, and other parameters that convey rich information about the speaker’s mental state and identity markers. The strategy employed in this study represents a pioneering endeavor in sound representation learning within AI and machine learning, aimed at enhancing performance levels.

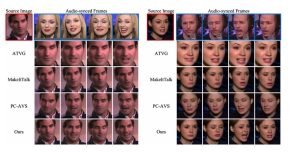

Evaluations of DIRFA have been conducted alongside state-of-the-art audio-driven talking mouth generation methods at NTU Singapore.

Potential Applications

One of the most promising applications of DIRFA lies in the realm of healthcare, particularly in the development of advanced virtual assistants and chatbots. By generating realistic and responsive facial animations, DIRFA has the potential to significantly enhance user experiences on online healthcare platforms, enriching interactions and bridging existing gaps in personalized care and emotional support through virtual mediums.

DIRFA also holds promise in assisting individuals with speech or visual impairments, offering a powerful tool for expressing thoughts and emotions through evocative avatars or online representations. By facilitating improved communication and expression, DIRFA can empower individuals to engage more effectively in the digital realm, bridging the gap between their intentions and expressions.

Future Prospects and Challenges

While DIRFA has made significant strides in producing lifelike facial expressions from audio inputs, challenges persist due to the complexity of individual expressions. Each individual’s speech patterns and facial expressions vary, posing a challenge in accurately capturing these nuances. The DIRFA team acknowledges the need for enhancements, particularly in program flexibility and expression control, to address limitations such as transitioning between different facial expressions seamlessly.

To enhance DIRFA’s relevance and accessibility, the NTU team plans to enrich the dataset with a broader range of tonal sound clips and facial expressions. This expansion is expected to render the visual animations produced by DIRFA more versatile, accurate, and realistic, thereby elevating their quality and authenticity.

The Transformative Potential of DIRFA

DIRFA stands at the forefront of revolutionizing multimedia communication with its groundbreaking approach to synthesizing authentic facial animations from audio inputs. By blurring the boundaries between the digital and physical realms, this system pushes the boundaries of online interaction, enhancing the quality and authenticity of digital representations.

The potential impact of technologies like DIRFA on online communication and visualization is immense and captivating. As these technologies evolve, they promise to offer more engaging, personalized, and emotionally resonant modes of online interaction, heralding a new era of digital expression and connection.