The common YouTube creator will soon have the option to incorporate unique AI-generated music into their short video content. This feature will be facilitated through collaborations with renowned musicians like Charlie Puth, T-Pain, John Legend, and Sia, among others. The initial phase of Google’s exploration into AI in music, known as Dream Track for Shorts, was made achievable through a partnership involving YouTube, the DeepMind AI research lab at Google, and various prominent figures.

Currently, individuals can effortlessly integrate music into their YouTube Shorts from an extensive music library, a capability also present on other short-form content platforms like TikTok and Instagram’s Reels. By promoting user innovation and leveraging artist partnerships alongside user familiarity with verbal cues, Fantasy Track for Shorts aims to distinguish YouTube in this realm.

Described as a “fresh creative playground,” users can find music ranging from “Upbeat sound music in the style of Charlie Puth” to “Intense jazz-infused R&B with soaring vocals in John Legend’s design” or even “Mid-tempo Reggaeton song about a particular type of automotive energy” through Dream Tracks. By specifying a style and selecting a musician from the carousel, users can easily access a diverse range of music options. The user-friendly interface of Dream Tracks offers users discounts on Cameo, session musicians, and studio time, enhancing the overall experience. Furthermore, the inclusion of artist collaborations enriches the available selection, which is expected to expand over time to cater to a wide array of musical tastes.

Although this experimental feature may not be universally accessible across all regions yet, it is expected to be accessible soon via the mobile app under “Try experimental new features,” with users being able to select one feature at a time.

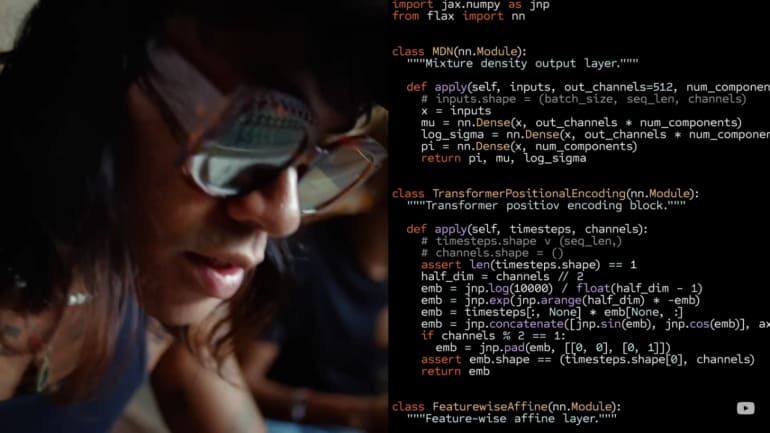

Dream Lines for Pants and music AI tools, developed in collaboration with musicians, songwriters, and producers to facilitate their creative processes, are centered around Google DeepMind’s Lyria. The AI research lab at Google asserts that previous AI models have struggled to create intricate and convincing high-fidelity music due to the complexity of audio composition. Lyria, the most advanced audio generation model, aims to address this challenge by maintaining music continuity across various sections and passages.

On the creative side, music AI tools are tailored to enhance workflow efficiency and inspire artists. These tools enable the transformation of sung melodies into simple or intricate instrumentations, such as converting a hummed melody into a whistle section. The advancements in these tools have significantly accelerated the creative process, with artists like Ed Sheeran now being able to hum a melody into their phone and have it played back by the London Philharmonic Orchestra promptly.

In August, YouTube and Universal Music Group unveiled plans to approach audio AI technology with an “artist-centric” perspective. Through the establishment of a music AI incubator that fosters collaboration among technologists, artists, songwriters, and producers from diverse genres, they aim to promote creativity and innovation in this domain.

The concept posits AI as a tool akin to traditional music equipment like samplers, synthesizers, or drum machines, rather than a replacement for human talent, which was a concern in the music industry. The responsible use of this technology, along with oversight from AI experts and ethical individuals within music labels and artist communities, is crucial in mitigating potential misuse and ensuring the preservation of artistic integrity.