Lavender, an AI tool created for military purposes, identified 37,000 Palestinians as potential Hamas members.

By Gaby Del Valle, a journalist specializing in policy matters such as immigration, border surveillance technology, and the political shift towards the New Right.

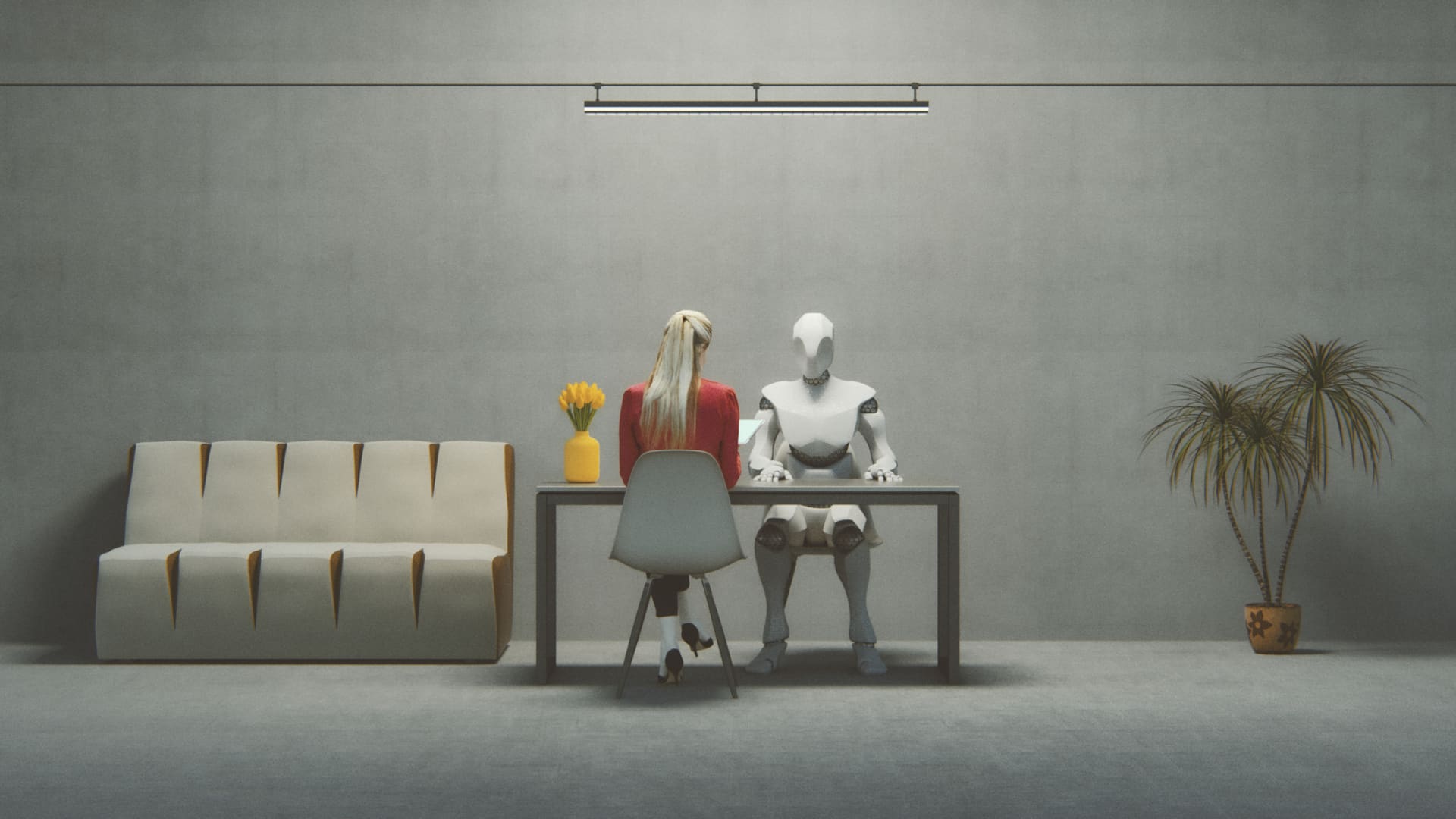

Reports from +972 Magazine and Local Call suggest that Israel’s military has been utilizing artificial intelligence through the Lavender system to expedite target selection in Gaza, prioritizing speed over precision and resulting in the deaths of numerous civilians.

The development of Lavender allegedly followed the October 7th attacks by Hamas. At its height, Lavender categorized 37,000 individuals in Gaza as suspected “Hamas militants” and sanctioned their elimination.

Although the Israeli military refuted the existence of a kill list, they acknowledged the Lavender system as tools for analysts in the target identification process. These analysts are required to independently verify targets to ensure compliance with international law and IDF directives.

Interviews with Israeli intelligence officers revealed that they often acted as a mere “rubber stamp” for Lavender’s decisions, with minimal scrutiny before authorizing strikes. The system’s training data reportedly included individuals loosely associated with Hamas, leading to concerns about the broad labeling of “Hamas operatives.”

Lavender operated by assessing various characteristics linked to Hamas members, such as communication patterns or frequent changes in personal information. Individuals in Gaza were then ranked based on their resemblance to known Hamas operatives, with those surpassing a certain threshold marked as targets.

Despite a reported 90 percent accuracy rate, approximately 10 percent of those identified as Hamas members were not affiliated with the group. Errors, such as mistaken identity due to shared names or familial ties, were statistically justified, leading to fatal consequences for innocent civilians.

Intelligence officers allegedly had significant leeway regarding civilian casualties, with directives allowing for the targeting of civilians in proximity to lower-level Hamas operatives. The indiscriminate bombing of homes, even in the absence of confirmed targets, resulted in numerous civilian deaths.

Critics like Mona Shtaya caution that the Lavender system reflects Israel’s extensive surveillance practices in Gaza and the West Bank. The potential export of such technologies by Israeli defense firms raises further ethical concerns.

Amidst Israel’s offensive in Gaza, the military has leveraged advanced technologies like facial recognition and building targeting systems to combat Hamas. These tools have been linked to civilian casualties, sparking debates on the ethical use of surveillance and force during conflicts.