-

The crypto market was abuzz with excitement over the potential of text-to-video generation, leading to a surge in AI tokens following OpenAI’s unveiling of a demo featuring Sora.

-

However, for this technology to reach mainstream adoption, an immense amount of computational power will be necessary. The demand for server-grade H100 GPUs is projected to be staggering, exceeding the annual production capacity of Nvidia or the combined usage of these GPUs by major tech giants like Microsoft {{MSFT}}, Meta {{META}}, and Google {{GOOG}}.

The transition to mainstream text-to-video generation is estimated to require hundreds of thousands of Graphics Processing Units (GPUs)—a quantity surpassing the current collective GPU utilization of Microsoft, Meta, and Google.

The initial showcase of OpenAI’s Sora, a text-to-video generator, captivated audiences worldwide and reignited interest in AI tokens, leading to a notable surge in their value post-demonstration.

Subsequently, numerous AI projects within the cryptocurrency space emerged, each pledging to deliver text-to-video and text-to-image capabilities. As a result, the AI token sector now boasts a market capitalization of $25 billion according to CoinGecko data.

At the core of AI-generated video production lies a multitude of Graphics Processing Units (GPUs) from industry giants such as Nvidia {{NVDA}} and AMD {{AMD}}, enabling the realization of the AI revolution through their high-volume data processing capabilities.

The pivotal question remains: How many GPUs are necessary to propel AI-generated video content into the mainstream? The answer points to a requirement exceeding the GPU inventory possessed by major tech corporations in 2023.

The Need for 720,000 Nvidia H100 GPUs

A recent analysis by Factorial Funds suggests that a substantial fleet of 720,000 top-tier Nvidia H100 GPUs is essential to sustain the creative ecosystem of platforms like TikTok and YouTube.

Factorial Funds’ research indicates that the training process for Sora necessitates approximately 10,500 potent GPUs over a month, with each GPU capable of generating roughly 5 minutes of video per hour for inference purposes.

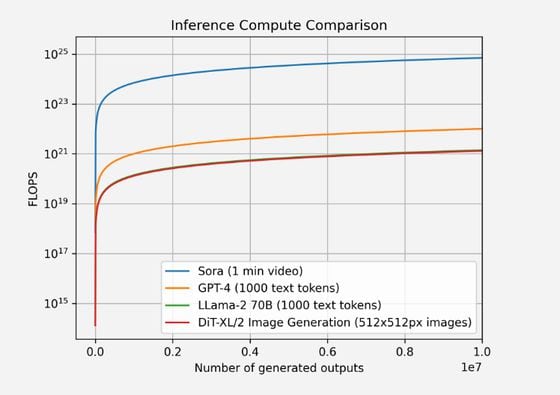

As illustrated in the accompanying chart, the computational demands for training Sora far surpass those of GPT-4 or static image generation.

In a scenario of widespread adoption, the computational load for inference tasks is poised to outstrip that of training activities. This shift implies that as more individuals and entities embrace AI models like Sora for video generation, the computational resources required for producing new content (inference) will exceed the resources needed for initial model training.

To contextualize, Nvidia dispatched 550,000 units of the H100 GPUs in 2023.

Data sourced from Statista reveals that the twelve primary clients utilizing Nvidia’s H100 GPUs collectively possess 650,000 units, with the two largest clients—Meta and Microsoft—accounting for 300,000 GPUs between them.

(Statista)

(Statista)

Assuming a unit cost of \(30,000, the endeavor to materialize Sora’s vision of mainstream AI-driven text-to-video content would entail an investment of \)21.6 billion, nearly equivalent to the entire current market capitalization of AI tokens.

However, this projection hinges on the feasibility of procuring the requisite GPUs.

Diversification in GPU Offerings

While Nvidia stands as a prominent figure in the AI landscape, it’s crucial to acknowledge the presence of other players in the field.

AMD, a perennial competitor to Nvidia, also delivers competitive GPU solutions and has witnessed substantial stock value appreciation—from the \(2 range in late 2012 to over \)175 presently.

Furthermore, alternative avenues for accessing GPU computational power exist through platforms like Render (RNDR) and Akash Network (AKT), offering distributed GPU computing services. Yet, it’s worth noting that the majority of GPUs on these networks are consumer-grade gaming GPUs, which notably lack the potency of Nvidia’s server-grade H100 GPUs or AMD’s offerings.

In conclusion, the realization of text-to-video potential, as championed by Sora and similar protocols, demands a monumental hardware investment. While the concept holds immense promise and could revolutionize creative processes in industries like Hollywood, widespread adoption remains a distant prospect necessitating further hardware advancements.

Edited by Shaurya Malwa.