Recently, Siri has been making efforts to describe images received through CarPlay or announced notifications. In typical Siri fashion, the results have been inconsistent with varying effects.

Despite this, Apple remains committed to advancing AI technology. Apple’s AI experts have outlined a method in a recently published study paper that suggests Siri could do more than just identify image contents. The exciting part is that they believe ChatGPT 4.0 stands out for enhancing these capabilities compared to its predecessor.

In the paper titled ReALM: Reference Resolution As Language Modeling, Apple introduces a technique that could enhance the functionality of a large vocabulary model-powered virtual assistant. ReALM takes into account both the visual context from the camera and the tasks being performed. Here’s a breakdown of the different types of entities discussed in the paper:

-

On-display Entities: These are entities currently visible on the user’s screen.

-

Verbal Entities: These entities are linked to the ongoing conversation. They could stem from a previous user query (e.g., “Call Mom” would make “Mom” the relevant entity) or be provided by the virtual assistant (e.g., presenting a list of options to the user).

-

Background Processes: These entities are associated with past events, such as alarms or background music, which may not directly relate to the current visual or verbal interactions.

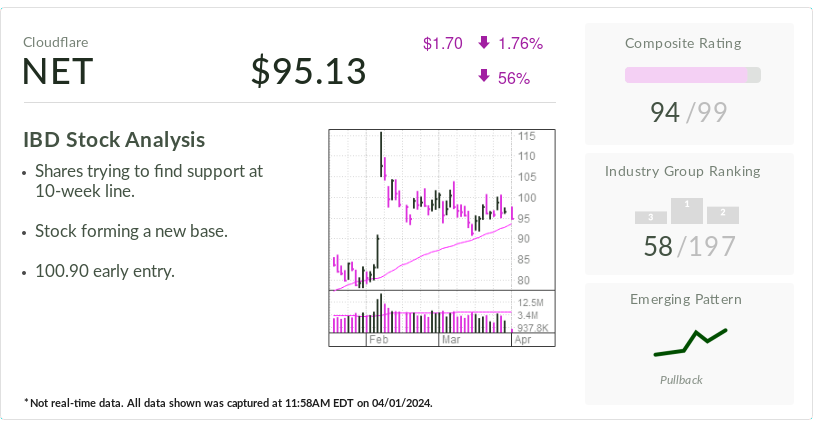

If successful, this approach could pave the way for a more intelligent and context-aware Siri. Apple appears confident in its ability to deliver such advancements swiftly. The study compares the performance of Apple’s model against OpenAI’s ChatGPT 3.5 and ChatGPT 4.0:

In addition to our model, we benchmarked against GPT-3.5 and GPT-4 from OpenAI, both equipped with in-context learning capabilities. While GPT-3.5 operates solely on text inputs, GPT-4 can contextualize images, leading to enhanced performance in tasks like on-screen reference resolution.

How does Apple’s model fare in comparison?

Our model showcases significant enhancements across various reference types, with even the smallest version surpassing GPT-4 by over 5% in on-screen references. Notably, our larger models outperform both GPT-3.5 and GPT-4 by a considerable margin.

Impressive performance gains indeed. The report concludes:

We have demonstrated that ReaLM surpasses previous models and nearly matches the performance of the current state-of-the-art language model, GPT-4, despite having fewer parameters. Particularly in on-screen references within the textual domain, ReaLM outshines GPT-4, making it a compelling choice for efficient reference resolution without compromising performance for domain-specific user interactions.

For Apple, achieving on-device efficiency without sacrificing performance is a key focus. The future of software development looks promising, perhaps starting with the upcoming apps 18 and WWDC 2024 event on June 10th.