The administration has reported the successful completion of the tasks set out in President Biden’s Executive Order on AI within the 150-day timeframe.

Today, Vice President Kamala Harris announced that the White House Office of Management and Budget (OMB) has introduced a comprehensive government-wide policy to address the risks associated with artificial intelligence (AI) and harness its benefits. This initiative fulfills a crucial requirement of President Biden’s groundbreaking AI Executive Order, which outlined extensive directives to enhance AI safety and security, safeguard Americans’ rights, promote competition and innovation, and bolster U.S. leadership globally. Federal agencies have confirmed the fulfillment of all the tasks assigned within the 150-day period stipulated by the Executive Order, building upon their prior completion of the 90-day tasks.

The strategic approach adopted by Federal departments and agencies aligns with the Biden-Harris Administration’s commitment to positioning America as a leader in trustworthy AI innovation. Recently, OMB announced that the President’s Budget prioritizes investments in agencies’ capabilities to foster, test, secure, and integrate cutting-edge AI applications across the Federal Government effectively.

In accordance with the Executive Order, OMB’s new policy outlines the following key measures:

Addressing AI Risks

Central to the President’s vision is the prioritization of the well-being of individuals and communities. Federal entities bear a unique responsibility to identify and mitigate AI risks while ensuring public trust in safeguarding their rights and security.

By December 1st, 2024, Federal agencies must implement substantial safeguards when utilizing AI that could impact public health or freedom. These safeguards entail a series of mandated actions to accurately assess, test, and oversee the effects of AI on the general populace, mitigate the risks of biased analytics, and provide transparency regarding the government’s AI usage. These protective measures encompass various AI applications, ranging from employment to housing, healthcare, and education.

For example, through the adoption of these safeguards, organizations can ensure that:

- Travelers can opt out of TSA facial recognition without delays or compromising their airport experience.

- Human oversight is maintained in evaluating the impact of AI tools in the Federal healthcare system to prevent disparities in care access.

- Individuals affected by consequential decisions made by AI-driven fraud detection systems have recourse for seeking compensation for any losses incurred due to human errors.

Should an agency fail to adhere to these safeguards, it must discontinue the use of the AI system, unless agency leadership can justify how such action would heighten safety risks or impede essential operations significantly.

OMB’s policy encourages agencies to engage with federal employee unions and adhere to the forthcoming Department of Labor guidelines on mitigating potential AI-related risks to employees, safeguarding the federal workforce amidst the government’s AI adoption. The Department is leading by example, collaborating with federal employees and labor unions to develop these guidelines and govern AI usage effectively.

Federal agencies also receive guidance on managing risks specific to AI procurement. Procuring AI solutions presents distinct challenges, necessitating safeguards for fair competition, data privacy, and transparency. OMB plans to ensure that organizations’ AI contracts align with its policy and protect the public from AI-related risks later this year. Public feedback will be solicited to ascertain how private sector entities supporting the Federal Government can adhere to the best practices and standards outlined in the Request for Information (RFI) released today.

Enhancing AI Transparency

The policy released today mandates Federal agencies to enhance the transparency of their AI utilization by:

- Publishing expanded annual inventories of their AI use cases, detailing their risk management approaches and the impact of these use cases on rights and safety.

- Excluding sensitive data from the public inventory while screening the agency’s AI use cases.

- Notifying the public of any AI exemptions from OMB policy provisions through waivers and justifying such exemptions.

- Disclosing government-owned AI code, models, and data where public or government operations are not jeopardized.

OMB has also made available a comprehensive draft directive that outlines the specifics of this transparency requirement.

Promoting Responsible AI Innovation

OMB’s policy aims to foster responsible AI innovation within Federal agencies. AI technology presents significant opportunities for addressing pressing societal challenges, such as climate crises, public health issues, and public safety concerns. Various agencies have already begun leveraging AI advancements to enhance customer experiences and pilot AI applications like chatbots.

Cultivating the AI Workforce

People are at the core of responsible AI deployment to serve the public effectively. Under OMB’s guidance, agencies are urged to expand and enhance their AI talent pool to drive innovation, governance, and risk management. The Biden-Harris Administration has committed to recruiting 100 AI professionals by Summer 2024 through the National AI Talent Surge established by Executive Order 14110. Additionally, a career fair for AI roles across the Federal Government is scheduled for April 18 to bolster retention and underscore the significance of AI talent within the Federal workforce.

Strengthening AI Governance

To ensure accountability and oversight in AI utilization across the Federal Government, OMB’s policy mandates Federal agencies to:

- Appoint Chief AI Officers to coordinate AI usage within their agencies. OMB and the Office of Science and Technology Policy have been convening these officials in a Chief AI Officer Council since December to align efforts and prepare for OMB’s guidance.

- Establish AI Governance Boards chaired by the Deputy Secretary or equivalent to oversee and govern AI deployment within the agency. Several agencies, including the Departments of Defense, Veterans Affairs, Housing and Urban Development, and State, have already set up these governance bodies, with all CFO Act agencies required to do so by May 27, 2024.

The Administration has unveiled additional initiatives to promote responsible AI use in government, such as issuing an RFI on Responsible Procurement of AI in Government to inform future OMB actions. The expanded reporting on the 2024 Federal AI Use Case Inventory aims to enhance public awareness of the Federal Government’s AI applications.

With these measures, the Administration underscores its commitment to fostering the safe, secure, and ethical use of AI. These initiatives complement the Administration’s efforts outlined in the Blueprint for an AI Bill of Rights and the NIST AI Risk Management Framework, emphasizing public interest responsibility, responsible AI innovation, and risk management protocols.

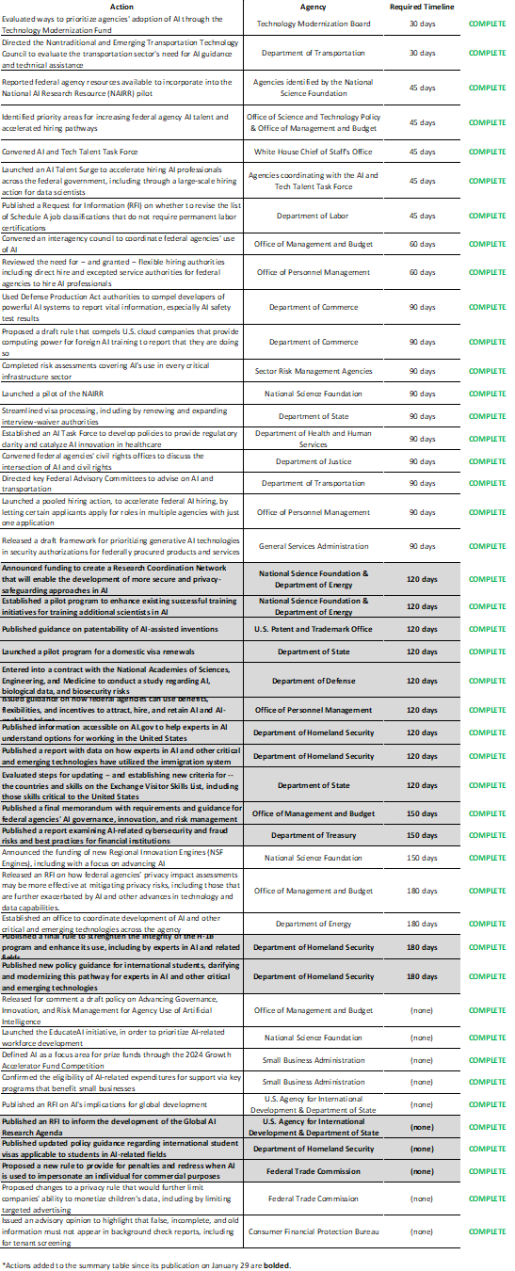

As the Executive Order 14110 approaches its 150-day milestone, the table below provides an updated summary of the tasks completed by federal agencies in response to the Executive Order.