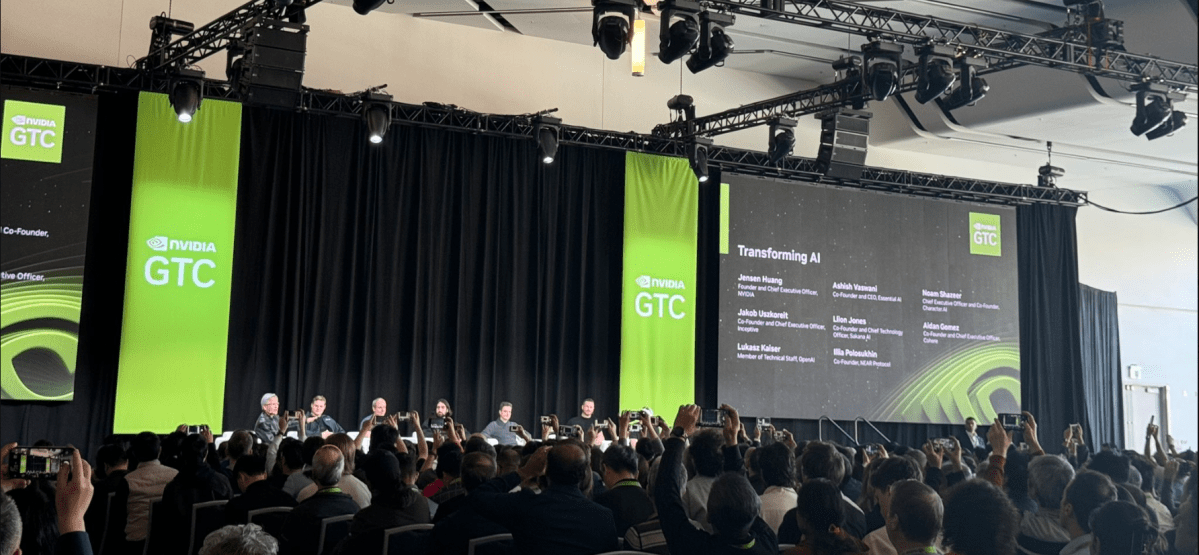

Seven out of the eight collaborators who jointly authored the influential paper “Attention is All You Need,” which introduced Transformers, convened for the first time as a collective to meet with Nvidia CEO Jensen Huang during a packed press conference today.

The group comprised Noam Shazeer, the co-founder and CEO of Character.ai; Aidan Gomez, the co-founder and CEO of Cohere; Ashish Vaswani, the co-founder and CEO of Essential AI; Llion Jones, the co-founder and CTO of Sakana AI; Illia Polosukhin, the co-founder of NEAR Protocol; Jakob Uszkoreit, the co-founder and CEO of Inceptive; and Lukasz Kaiser, a member of the professional staff at OpenAI. Niki Parmar, the co-founder of Essential AI, was regrettably unable to attend.

Transformers, a groundbreaking neural network advancement in natural language processing that more effectively grasped word context and meaning compared to its predecessors, namely the recurrent neural network and the long short-term memory network, was the brainchild of an eight-person team at Google Brain in 2017. The Transformer architecture not only laid the foundation for large language models like GPT-4 and ChatGPT but also found applications beyond language in projects such as OpenAI’s Codex and DeepMind’s AlphaFold.

A critic voices the need for innovation beyond Transformers.

A critic has expressed the sentiment that the world requires advancements surpassing the capabilities of Transformers. The creators of Transformers are now contemplating the future trajectory of AI models. Gomez from Cohere emphasized the necessity for progress beyond Transformers, stating, “the world needs something better than Transformers,” and expressing hope for a successor that can elevate performance to new heights. He prompted the group to ponder what innovations lie ahead, highlighting the importance of evolving beyond the current state, which he perceives as reminiscent of the past.

Gomez elaborated on his views in a post-panel discussion with VentureBeat, emphasizing the imperative for innovation and improvement in AI models. He underscored the need to reassess and refine various aspects of Transformer architecture, citing issues with memory handling and certain architectural components that warrant reevaluation. Gomez advocated for significant enhancements in model efficiency and scalability through optimization of parameterization and weight sharing.

Striving for unmistakable progress in AI evolution

Jones from Sakana emphasized the necessity for any successor to Transformers to not only be superior but also distinctly and demonstrably superior. He emphasized the importance of clear advancement to drive the industry forward, noting the current reliance on the original model despite its potential technical limitations.

Gomez concurred, emphasizing the critical role of both technical advancement and community engagement in propelling a new architecture to the forefront. He stressed the need for excitement and momentum within the community to facilitate the transition from one dominant architecture to another. According to Gomez, a compelling offering that excites and engages the community is essential to catalyze a shift towards a new paradigm in AI architecture.