The original iteration of this narrative was published in Quanta Magazine .

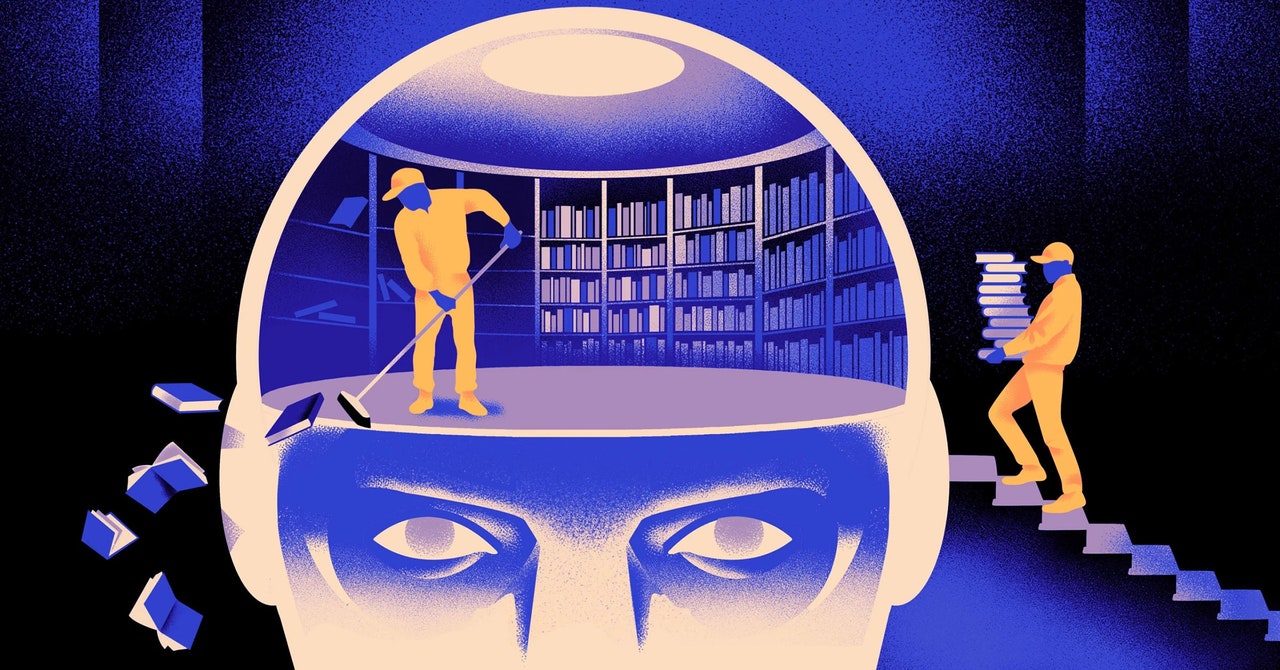

A more agile and adaptable type of machine learning model has been developed by a group of computer scientists. The key innovation lies in its ability to periodically discard acquired knowledge. While this novel approach may not replace the massive models that support major applications, it has the potential to offer insights into how these programs comprehend language.

Describing the new research as “a significant advancement in the field,” Jea Kwon, an AI engineer at the Institute for Basic Science in South Korea, praised the work.

Presently, the AI language engines predominantly rely on artificial neural networks. Each “neuron” within the network functions as a mathematical entity that processes signals from other neurons, performs computations, and transmits signals through multiple neuron layers. Initially, the flow of information is somewhat random, but through training, the connections between neurons improve as the network adjusts to the training data. For instance, if an AI researcher aims to create a bilingual model, they would train the model using a substantial amount of text from both languages. This process adjusts the neuron connections to establish relationships between text in one language and corresponding words in another.

However, the training process necessitates significant computational resources. Adapting the model can be challenging if it does not perform well initially or if the user’s requirements change over time. Mikel Artetxe, a coauthor of the new research and founder of the AI startup Reka, illustrated this challenge by highlighting the scenario of a model with 100 languages lacking coverage for a specific language. Starting anew from scratch is not an ideal solution.

To address these limitations, Artetxe and his team devised a workaround. In a previous experiment, they trained a neural network in one language, then erased the knowledge related to the fundamental units of words, known as tokens, stored in the embedding layer—the initial layer of the neural network. Subsequently, they retrained the model using a different language, which populated the embedding layer with new tokens from that language.

Despite containing mismatched information, the retraining process was successful, enabling the model to grasp and process the new language. The researchers inferred that while the embedding layer retained language-specific information, the deeper network layers stored more abstract concepts underlying human languages. This abstraction aided the model in learning the second language.

Yihong Chen, the lead author of the recent study, emphasized the shared conceptualization of objects across languages, stating, “We live in the same world. We conceptualize the same things with different words.” This high-level reasoning in the model allows it to understand that “an apple is something sweet and juicy, instead of just a word.”

While the forgetting approach proved effective in integrating a new language into a pre-trained model, the retraining process remained resource-intensive, requiring extensive linguistic data and processing power. Chen proposed a modification: instead of erasing and retraining the embedding layer, they should periodically reset it during the initial training phase. Artetxe explained that this approach familiarizes the entire model with resetting, simplifying the extension to new languages.

The researchers applied their periodic-forgetting technique to a widely used language model called Roberta and compared its performance to the same model trained using the standard approach without forgetting. The forgetting model exhibited a slightly lower performance, scoring 85.1 compared to the conventional model’s 86.1 on a common language accuracy metric. Subsequently, they retrained the models on other languages using significantly smaller datasets of only 5 million tokens, as opposed to the initial 70 billion tokens. The standard model’s accuracy dropped to an average of 53.3, whereas the forgetting model only decreased to 62.7.

Moreover, the forgetting model demonstrated superior performance when the team imposed computational constraints during retraining. Reducing the training steps from 125,000 to 5,000 resulted in an average accuracy decrease to 57.8 for the forgetting model, while the standard model plummeted to 37.2, akin to random guesses.

The team concluded that periodic forgetting enhances the model’s language learning capabilities. Evgenii Nikishin, a researcher at Mila, emphasized that the continuous forgetting and relearning process during training facilitates the assimilation of new knowledge, making it easier to introduce novel information later on. This suggests that language models comprehend languages at a deeper level beyond individual word meanings.

The approach mirrors the functioning of human memory. Benjamin Levy, a neuroscientist at the University of San Francisco, noted that human memory tends to retain the essence of experiences rather than detailed information. Implementing adaptive forgetting in AI models can enhance their flexibility and performance, aligning them more closely with human cognitive processes.

Artetxe envisions that more flexible forgetting language models could facilitate the expansion of AI advancements to diverse languages. While AI models excel in handling languages like Spanish and English with abundant training resources, they struggle with languages like Basque, specific to northeastern Spain. Adapting existing models to accommodate languages like Basque could address this gap.

Chen anticipates a future where multiple AI language models coexist, each tailored to different domains. She envisions a scenario where a foundational model can swiftly adapt to new domains, fostering a diverse ecosystem of language models.

Yihong Chen helped demonstrate that a machine learning model that selectively forgets certain knowledge can effectively learn new languages. Courtesy of Yihong Chen