On the basis that AI should be safeguarded through deliberate design, a coalition comprising eighteen nations has endorsed an agreement centered on the safeguarding of AI.

The Secure AI System Development Guidelines, led by the National Cyber Security Centre (NCSC) of the United Kingdom in partnership with the Cybersecurity and Infrastructure Security Agency (CISA), are said to be the first of their kind to gain worldwide acceptance.

Targeting AI system providers who utilize models hosted by a company or external application programming interfaces (APIs), the recommendations seek to assist developers in integrating digital security into the development process from the outset and consistently as a crucial requirement for ensuring the safety of AI systems.

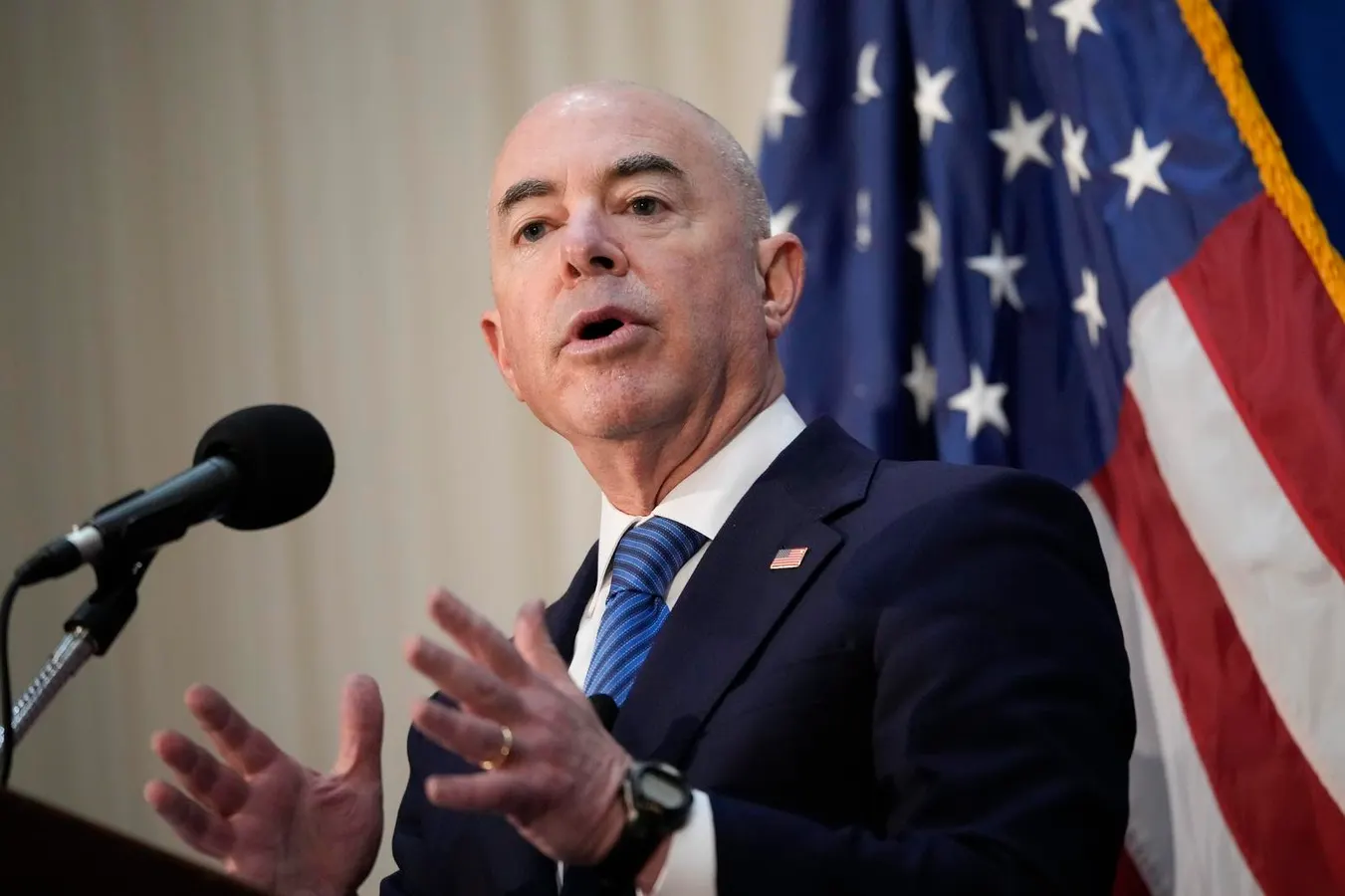

Alejandro Mayorkas, the homeland security secretary, commended the regulations jointly issued by CISA, NCSC, and other global partners as a common-sense approach to incorporating computer security at the heart of designing, developing, deploying, and operating AI.

This collaborative initiative represents a significant alliance that developers can participate in to protect customers throughout the design and development phases by incorporating “protected by design” principles.

The guidelines cover various aspects of development including supply chain security, documentation, asset and technical debt management, as well as secure design involving risk understanding and threat modeling. They also tackle the necessary trade-offs in system and model design.

Regarding stable operation and maintenance, the guidelines address aspects such as monitoring and surveillance, update management, and information sharing. Additionally, secure deployment protocols encompass safeguarding infrastructure and models from compromise, threats, or losses, formulating incident management procedures, and ensuring reliable releases.

Borrowing heavily from the Secure development and deployment guidance from the NCSC, NIST’s Secure Software Development Framework, and the secure by design principles advocated by CISA, the North Carolina Commission (CSC), and other international cyber agencies, these guidelines have received approval from countries like Australia, Canada, Chile, Czechia, Estonia, France, Germany, Israel, Italy, New Zealand, Nigeria, Norway, Poland, South Korea, Singapore, and the United Kingdom, with the exception of China, a prominent player in AI engineering.

The recent AI safety conference held at Bletchley Park presents a strategic opportunity that the U.K. aims to capitalize on. Furthermore, the guidelines, while rich in fundamental principles, are succinct on specifics, reminiscent of the discussions at the aforementioned event.

Ongoing initiatives include CISA’s unveiling of the Roadmap for Artificial Intelligence earlier this month, designed to strengthen AI methodologies against cyber threats, and President Biden’s issuance of an executive order last month directing the Government to advocate for the adoption of global AI security standards.

According to CISA Director Jen Easterly, the publication of the Guidelines for Secure AI System Development signifies a significant step forward in the collective dedication to ensuring the development and integration of AI features protected by design.

“In the midst of our shared technological evolution, there has never been a more crucial moment for domestic and international cooperation to advance the principles of protected by design and cultivate resilient frameworks for the sustainable progress of AI systems globally.”